RussianSensation

Elite Member

- Sep 5, 2003

- 19,458

- 765

- 126

Better solution for bottlenecking is to upgrade to higher res. At 1440p you also end up with better image quality. Seeing 980ti owners still on cheap 1080p displays just rubs me the wrong way, but sadly there are still many of them.

RS, any 1440p benches from that site?

I agree. It's ludicrous how some are sticking to 22-24" 1080p 60Hz monitors and eyeing a GTX1070/1080 upgrade. The context has been completely lost on these PC gamers.

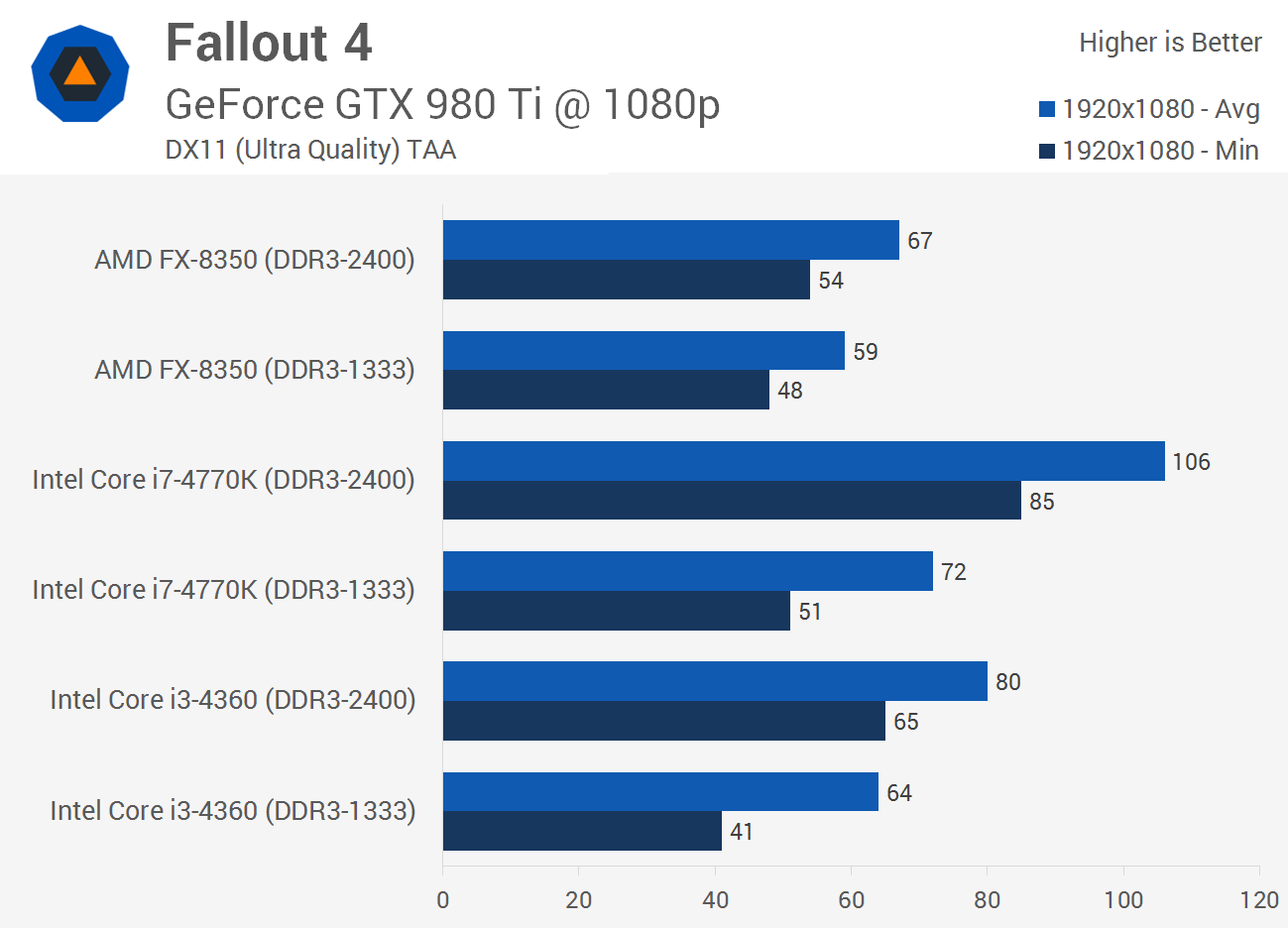

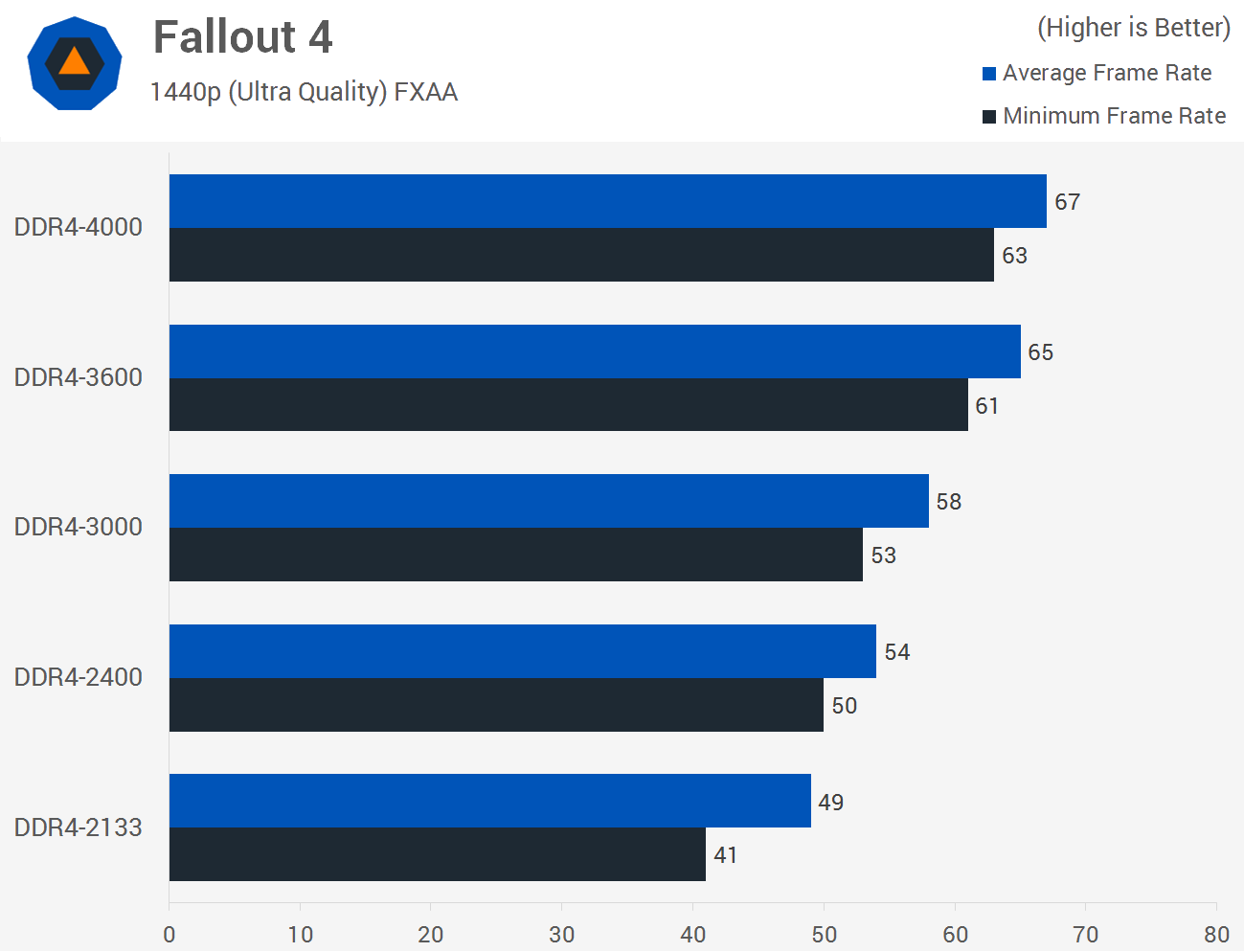

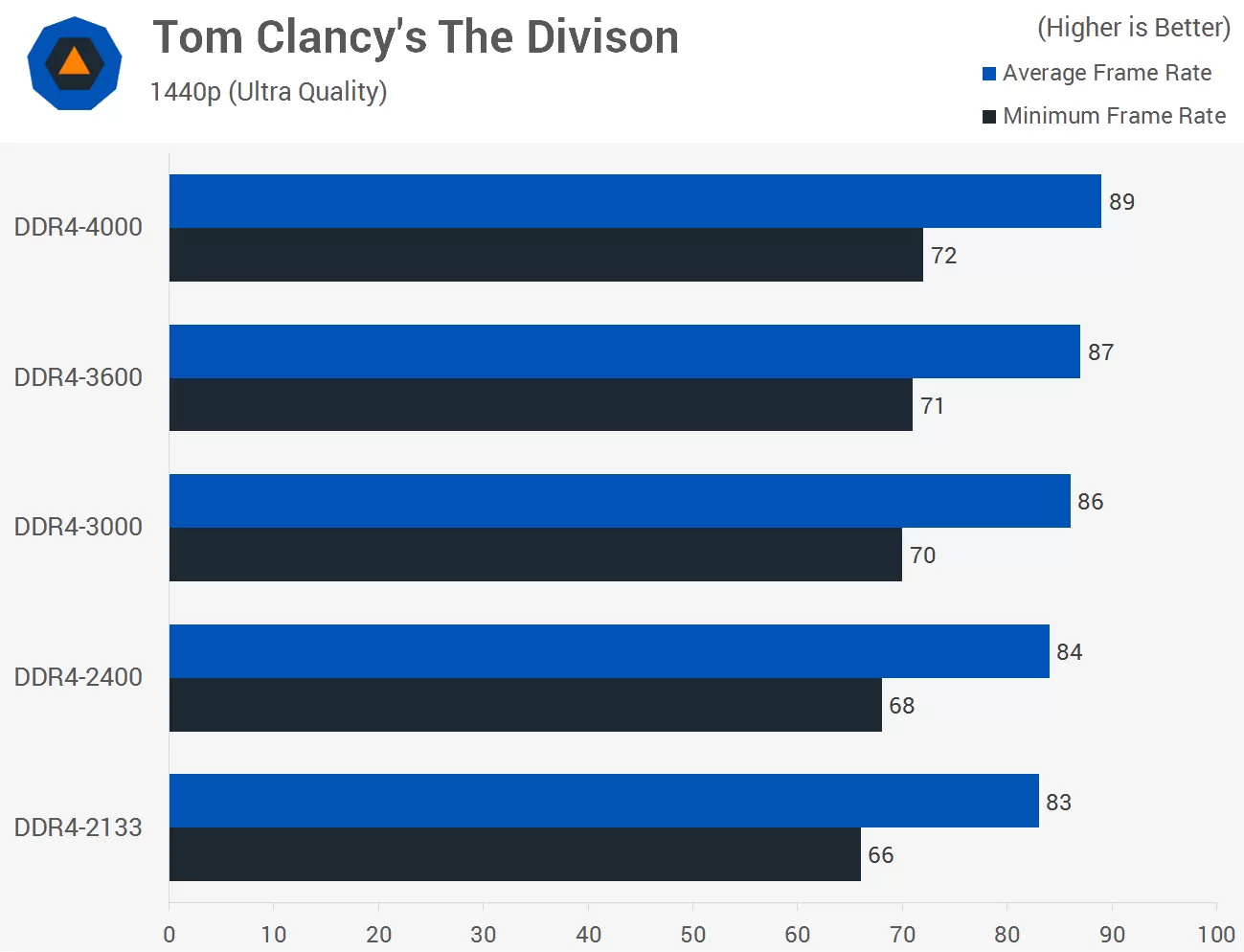

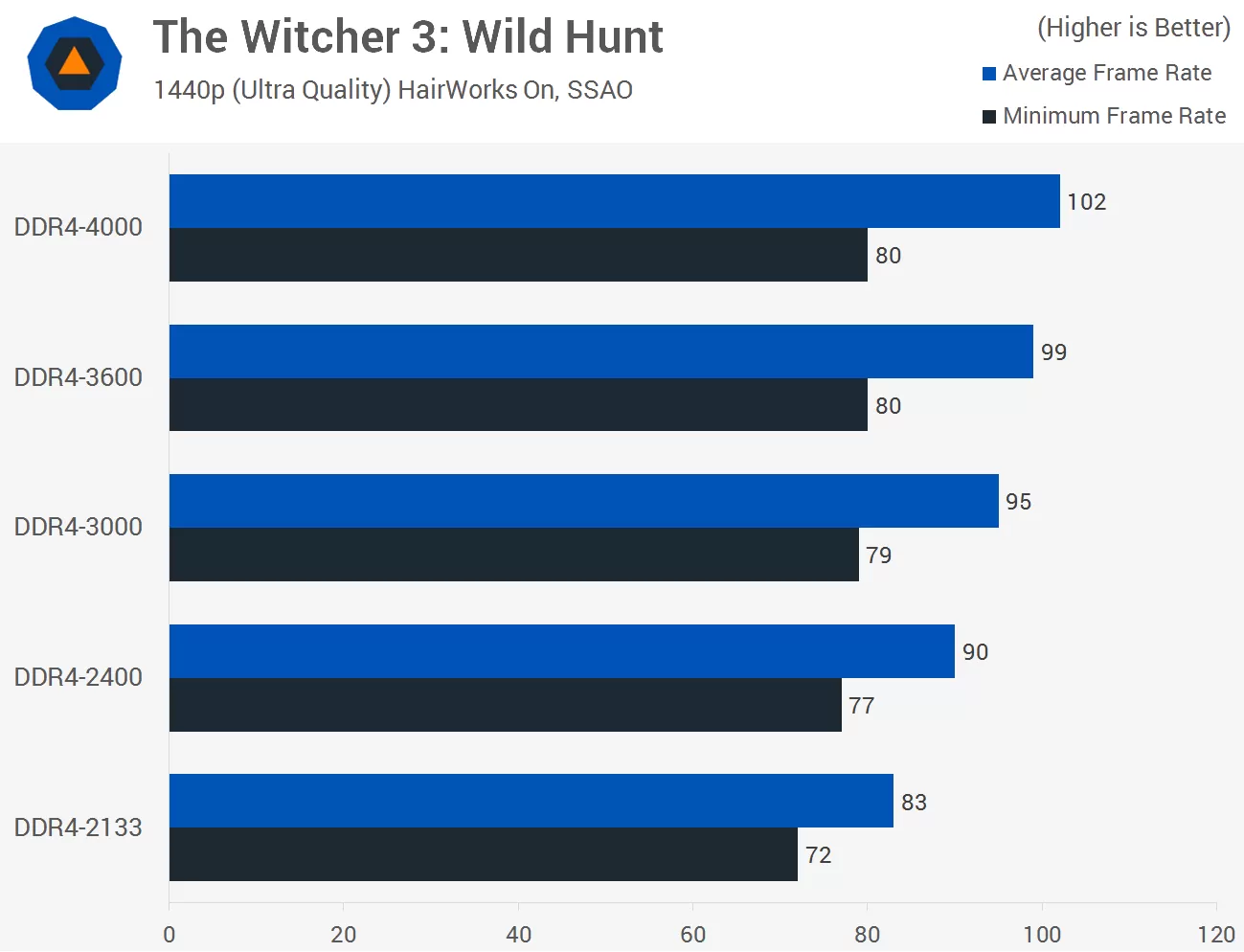

Not from that site but TechSpot just did a review of 980Ti SLI and it shows incredible bottlenecks on Skylake i7 6700K @ 4.5Ghz at 1440p with slower DDR4 memory. We know that Skylake i7 6700K @ 4.5Ghz with DDR4 2133 is still faster than anything other than an i7 4790K with DDR3 2400mhz. That means every CPU below that is automatically bottlenecking 1070/1080 level cards at 1080p.

Test System Specs

Intel Core i7-6700 Skylake @ 4.50GHz

Asrock Z170M OC Formula

G.Skill TridentZ 8GB (2x4GB) DDR4-4000

2x GeForce GTX 980 Ti SLI

Samsung SSD 950 Pro 512GB

Silverstone Strider Series ST1000-G Evolution 1000w

Windows 10 Pro 64-bit

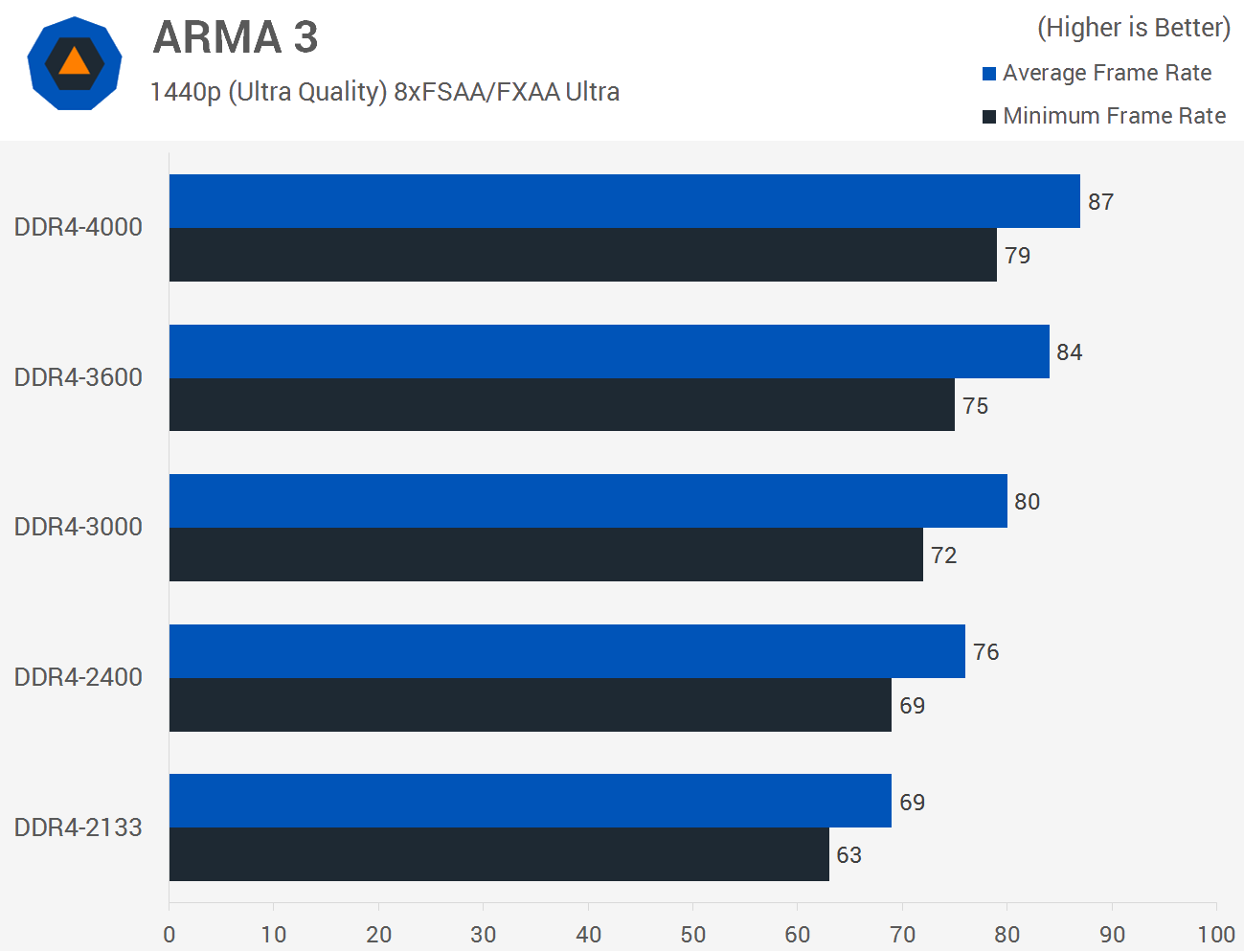

ARMA 3 sees a 10% increase in minimum frame rates when going from DDR4-3000 to 4000,

Although DDR4-4000 wasn’t a huge step forward over the 3600MT/s memory, it was a whopping great step from 3000MT/s delivering a 19% greater minimum frame rate.

GTX1070/1080/980Ti OC are all CPU limited at 1080p. These are 1440p cards. Right now, Skylake i7 6700K @ 4.5Ghz doesn't bottleneck a 980Ti level card at 1440p.

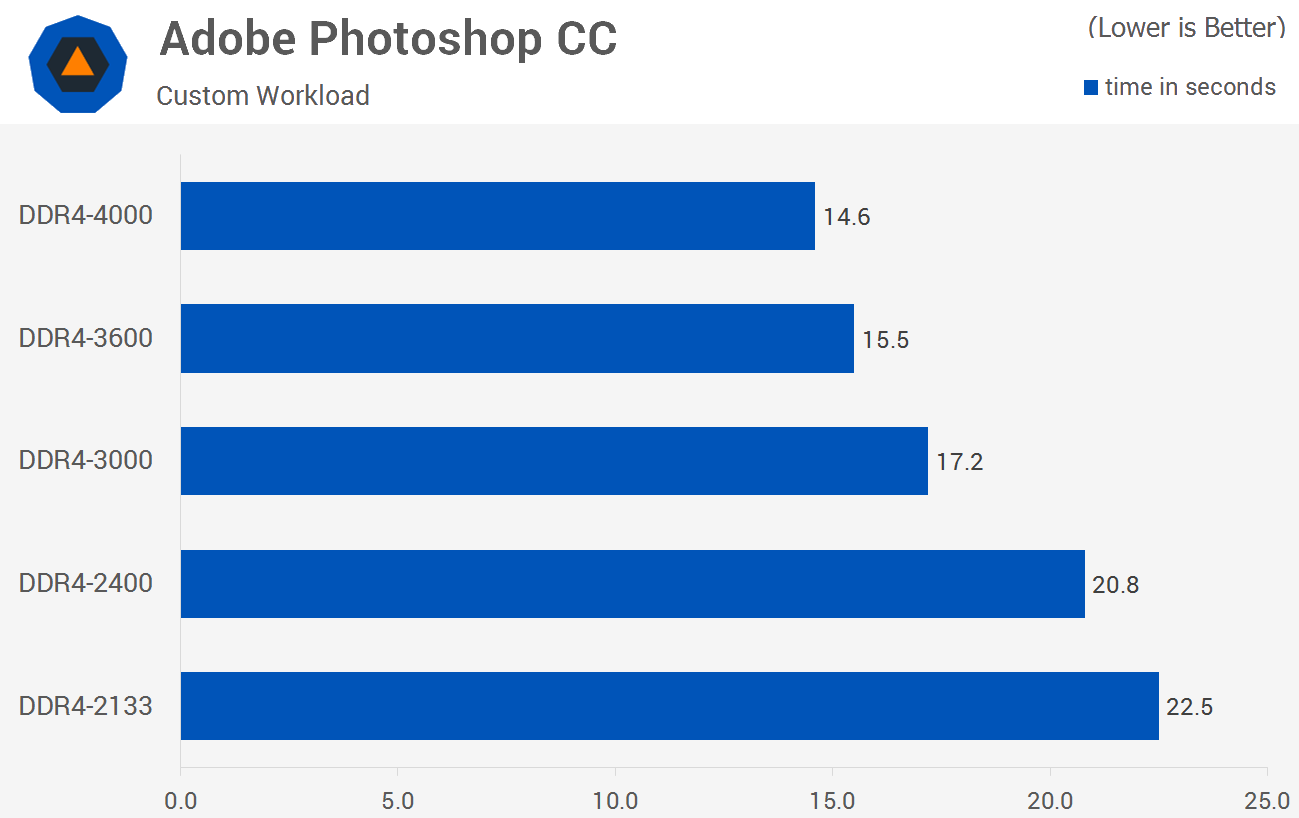

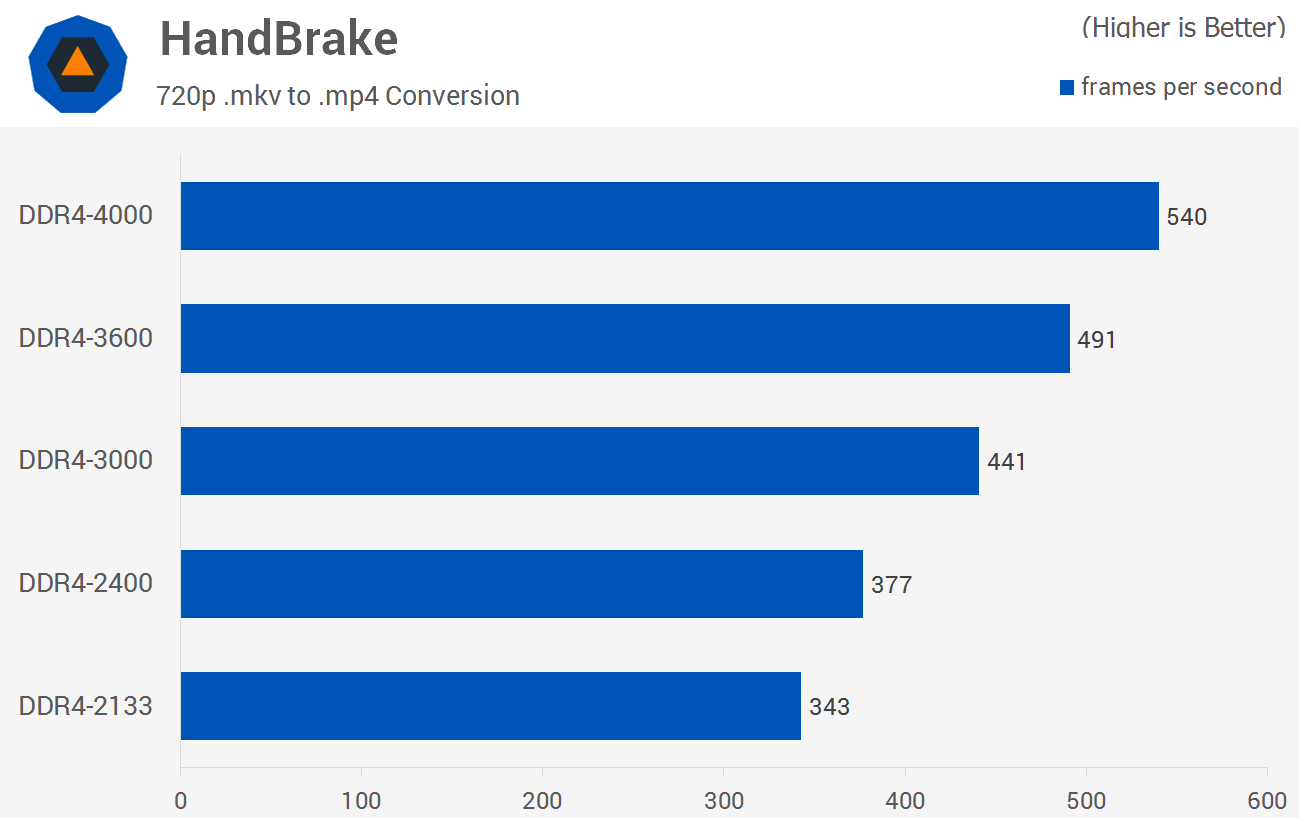

As GPUs get even more powerful (think 2017 Big Pascal/Vega), the CPU and DDR4 bottlenecks will be even greater. People buying i5 6500 + DDR4 2133mhz or still using Sandy/Ivy i5/i7 CPUs are not accepting reality if they think their CPUs aren't limiting the full potential of GTX1070 and higher level cards at 1080p.

http://www.techspot.com/article/1171-ddr4-4000-mhz-performance/page4.html

This is the first time in 5 years or so that CPU and memory bottlenecking is becoming a 100% real issue that needs to be discussed and almost everyone is avoiding it since they are too busy discussing perf/watt and the launch of Pascal GPUs.

If one makes the argument that they are using a 1080p 120-144Hz monitor, they will need the fastest CPU+DDR4 memory possible to maximize the full potential of that monitor. OTOH, if someone is using a 1080p 60hz monitor, this level of GPU power is simply wasted outright.

AMD needs to deliver the message that Polaris 10 is the perfect 1080p/60Hz card while 1070 and Vega level is 1080p 120-140Hz and 1440p and above. But their marketing department isn't that competent...

Last edited: