Info AMD CDNA Compute GPU architecture

- Thread starter soresu

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I think this is a smart move for AMD now that they have the money to support it.

Krteq

Golden Member

- May 22, 2015

- 1,010

- 730

- 136

ao_ika_red

Golden Member

- Aug 11, 2016

- 1,679

- 715

- 136

For slide deck: https://videocardz.com/newz/amd-promises-rdna-2-navi-2x-late-2020-confirms-rdna-3-navi-3x

Thanks to cryptomining boom, now we see both companies move to pre-GPGPU concept again.

Thanks to cryptomining boom, now we see both companies move to pre-GPGPU concept again.

Not quite, RDNA still has general compute capabilities, it's just not focused on that, so likely no DP FP RDNA ever, and it will lack the tensor acceleration of CDNA too, so ML will not run nearly as well or efficiently on RDNA.For slide deck: https://videocardz.com/newz/amd-promises-rdna-2-navi-2x-late-2020-confirms-rdna-3-navi-3x

Thanks to cryptomining boom, now we see both companies move to pre-GPGPU concept again.

DisEnchantment

Golden Member

- Mar 3, 2017

- 1,779

- 6,798

- 136

I can imagine the first thing they will do is nerf Navi10's fp64 capabilities and lots of IF function blocks. Navi10 has a lot more fp64 throughput than Turing.Not quite, RDNA still has general compute capabilities, it's just not focused on that, so likely no DP FP RDNA ever, and it will lack the tensor acceleration of CDNA too, so ML will not run nearly as well or efficiently on RDNA.

GodisanAtheist

Diamond Member

- Nov 16, 2006

- 8,549

- 9,983

- 136

I guess for now CDNA is just a label put on GCN (Vega). The true departure will happen somewhere down the road, my guess is with CDNA3.

-AMD seems to draw a distinction in their own slides and there are some serious changes under the hood as well.

They're ripping out all the rasterization HW used for pumping out graphics and replacing it with Tensor cores and other compute focused stuff.

CDNA launch video, kinda came outta nowhere seems like. Haven't seen very many people report on it/mention it

leoneazzurro

Golden Member

- Jul 26, 2016

- 1,114

- 1,867

- 136

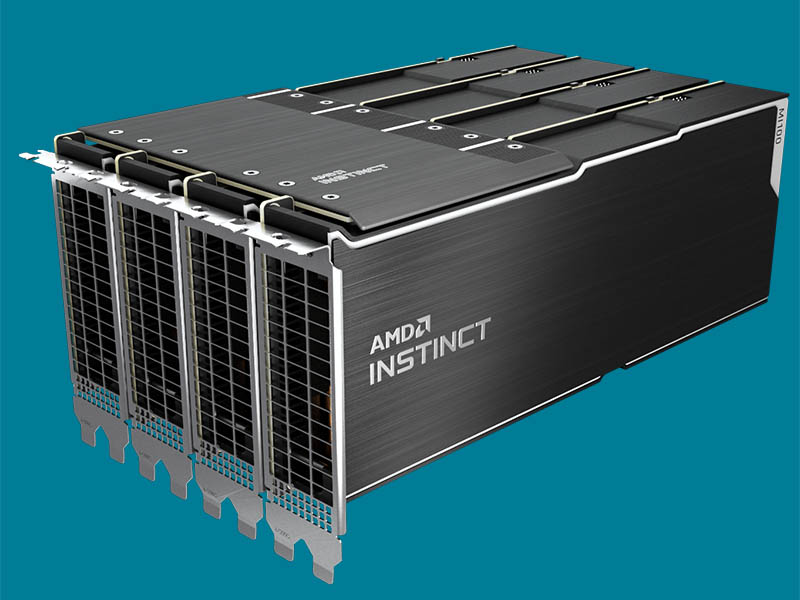

A look at Gigabyte/AMD HPC solutions featuring the Instinct MI100

NTMBK

Lifer

- Nov 14, 2011

- 10,526

- 6,051

- 136

CDNA launch video, kinda came outta nowhere seems like. Haven't seen very many people report on it/mention it

Is there a write up of this anywhere?

Is there a write up of this anywhere?

Short write-up here:

AMD Instinct MI100 32GB CDNA GPU Launched

The AMD Instinct MI100 32GB GPU utilizes the company's CDNA architecture to achieve 11.5 TFLOPS and can form 4x GPU "hives" to scale

It's faster than A100 in 'traditional' fp32 and fp64 but slower in pure matrix/bfloat calculations. In mixed workloads, MI100 may have the advantage as well. A100 has more VRAM but MI100 should be considerably cheaper unless Nvidia adjusts price in response.

itsmydamnation

Diamond Member

- Feb 6, 2011

- 3,150

- 4,044

- 136

Yes and they are wrong and i kept asking them to prove it and crickets.I did think I'd seen a few people here say that the A100 could somehow dual purpose it's tensor cores to give it a bunch more effective FP performance?

if you understand how a tensor core actually works its easy to understand why.

But the thing to also remember is memory/register pressure, the execution is largely the easy part. its the data movement that costs. So A100 / MI100 etc will be largely be designed so they have more or same execution resources as bandwidth/register/cache read/write , because execution resources are cheap and easy and data movement is expensive and hard so they just aren't going to leave that performance on the table.

If you could rewrite your FMA code to be GEMM then of course that is a different situation.

On a similar node, it must be at least twice as big as Vega 20. Would this be the closest AMD has ever come to a reticle limit chip?

Seem to be winning some big deals but I guess if you're building a very expensive super computer you also have the money to hand tune the software to run well on the machine. Where this will suffer is selling to cloud vendors who rent them out and their clients will still prefer CUDA to HIP.

Seem to be winning some big deals but I guess if you're building a very expensive super computer you also have the money to hand tune the software to run well on the machine. Where this will suffer is selling to cloud vendors who rent them out and their clients will still prefer CUDA to HIP.

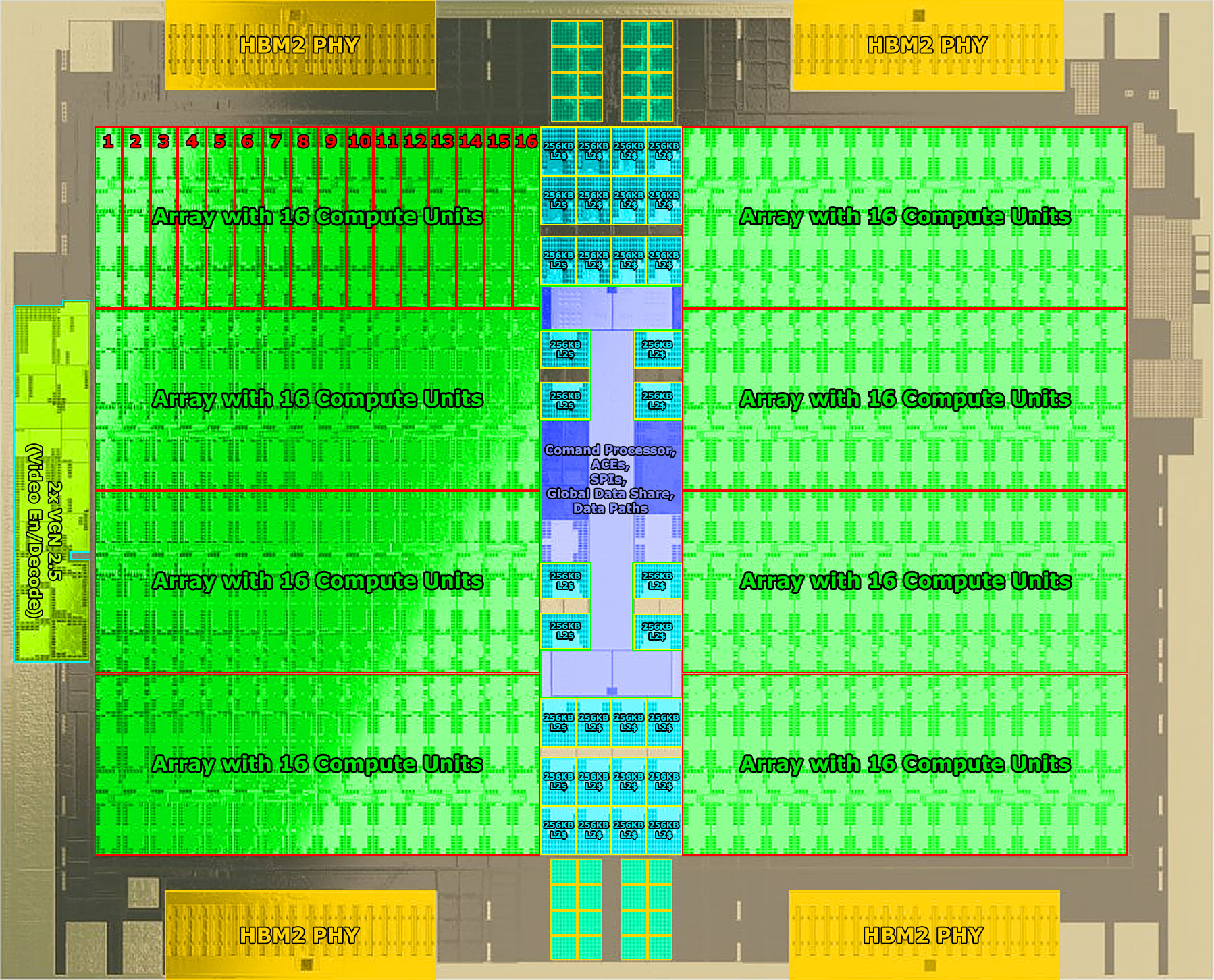

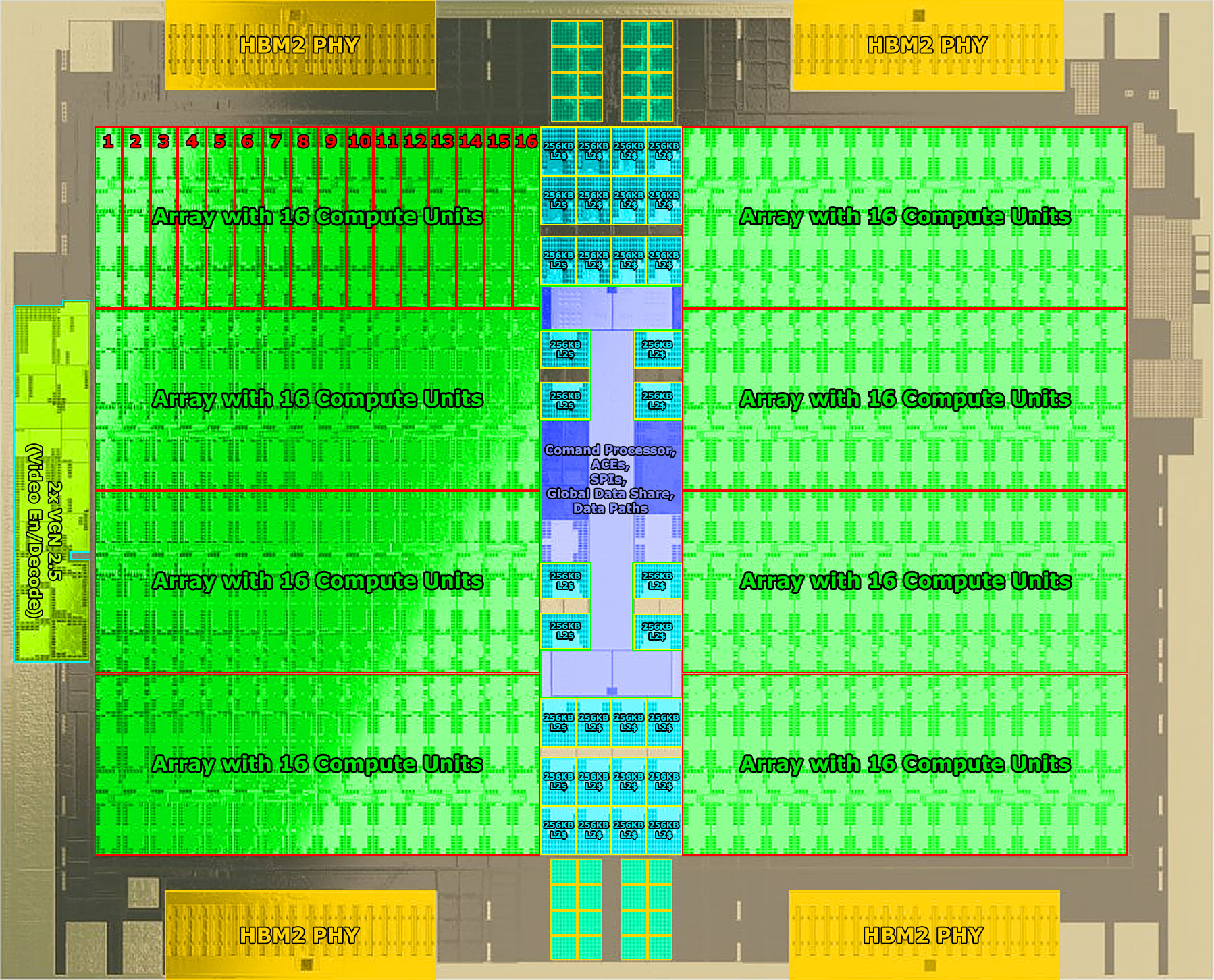

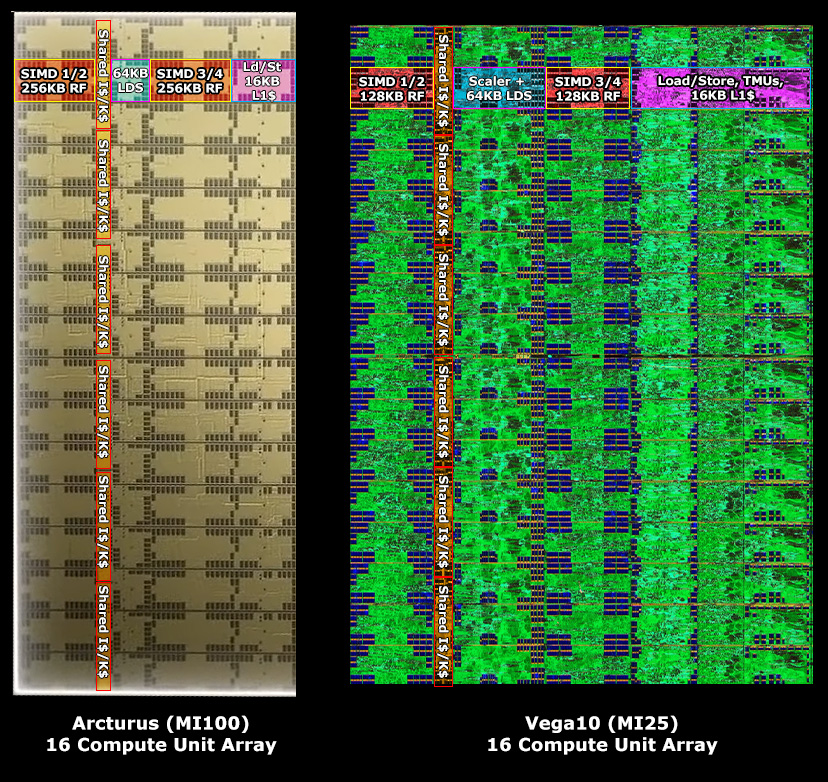

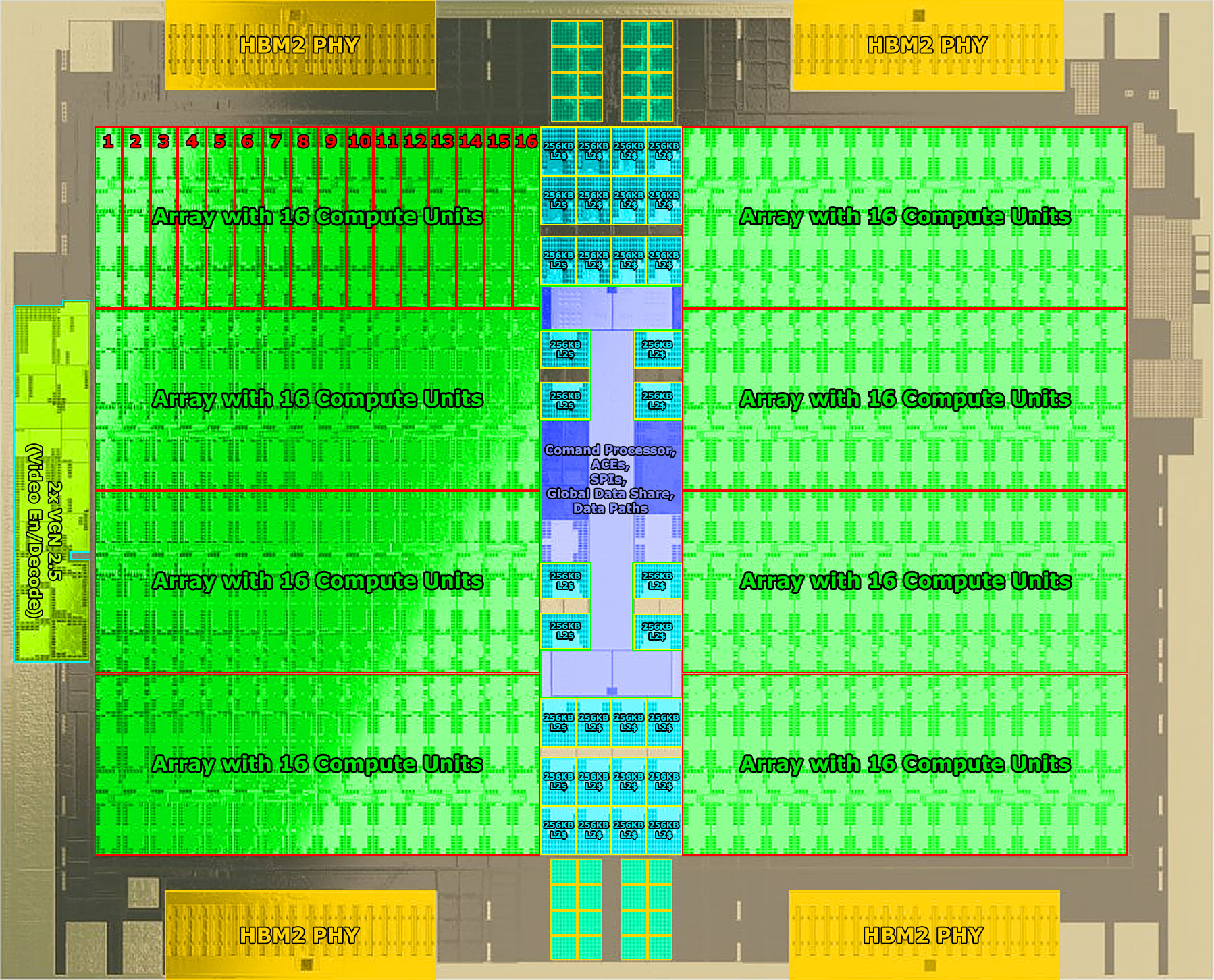

Arcturus/MI100 high level die shot annotations

This thing must be *massive*

-----------------------------------

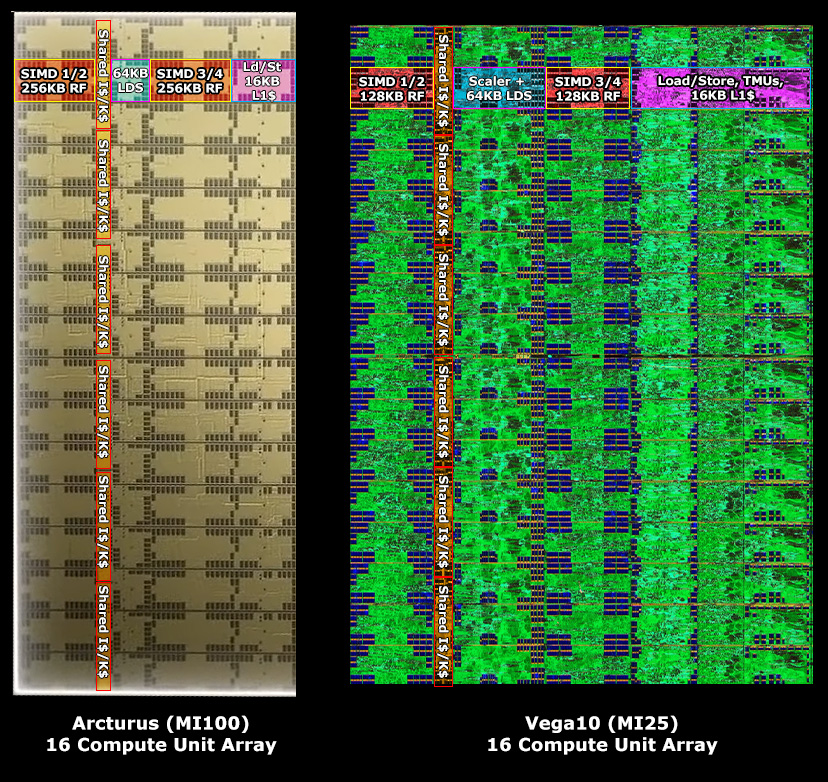

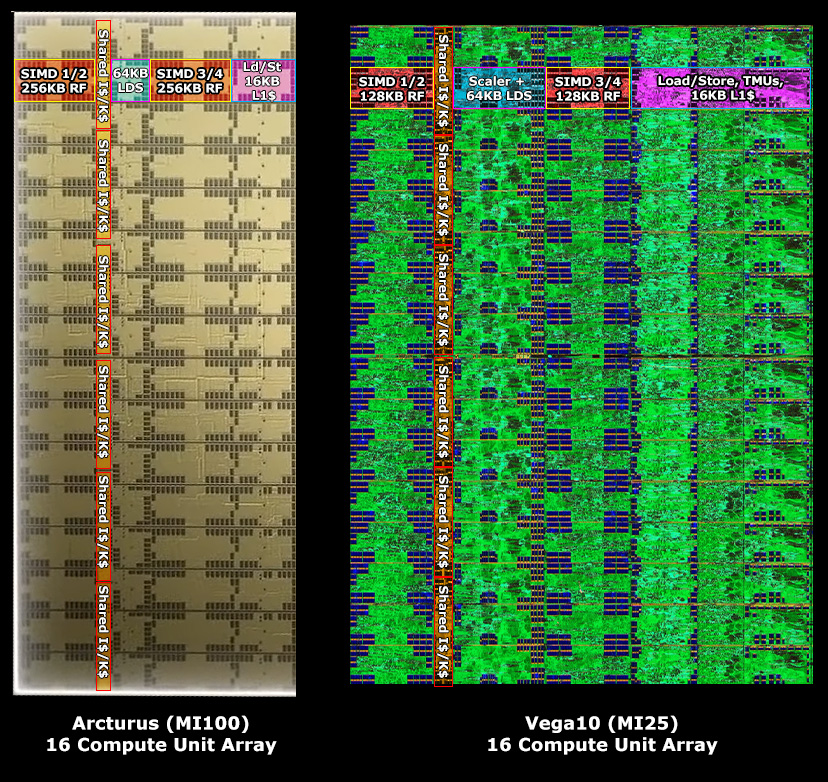

Compute Array comparison between Arcturus/CDNA/MI100 and Vega10/GCN5/MI25

Pure compute, no graphics capability

This thing must be *massive*

-----------------------------------

Compute Array comparison between Arcturus/CDNA/MI100 and Vega10/GCN5/MI25

Pure compute, no graphics capability

Last edited:

The entire point of HIP is portability from CUDA.and their clients will still prefer CUDA to HIP.

Not just to AMD hardware, but also back to CUDA from HIP if you wish it = so you can keep a dual hardware codebase if you don't mind it lagging the CUDA state of the art a bit.

Arcturus/MI100 high level die shot annotations

This thing must be *massive*

-----------------------------------

Compute Array comparison between Arcturus/CDNA/MI100 and Vega10/GCN5/MI25

Pure compute, no graphics capability

Why is there supposedly VCN still included in these? Are the expected to be used for video decode/encode at all? Seems strange when all graphics capability has been stripped out.

Have you tried using it? There are many corner cases where HIPify doesn't actually work and you'll have to dig through and fix it manually. And as you say you must have to restrict yourself to CUDA8 which hasn't been a big deal but might be a step back for some people.The entire point of HIP is portability from CUDA.

Not just to AMD hardware, but also back to CUDA from HIP if you wish it = so you can keep a dual hardware codebase if you don't mind it lagging the CUDA state of the art a bit.

It's basically their only shot to get Nvidia customers over to their side but they need people to use it. But no one wants to use it because Nvidia hardware isn't prohibitively expensive and the code we have written works as-is.

For machine learning applications which need to decode video, per the overview:Why is there supposedly VCN still included in these? Are the expected to be used for video decode/encode at all? Seems strange when all graphics capability has been stripped out.

the AMD CDNA family retains dedicated logic for HEVC, H.264, and VP9 decoding that is sometimes used for compute workloads that operate on multimedia data, such as machine learning for object detection

For machine learning applications which need to decode video, per the overview:

Ok, that makes sense, thanks.

Saylick

Diamond Member

- Sep 10, 2012

- 4,154

- 9,696

- 136

I think people were already estimating it to be in the low to mid-700mm2 range for die size based on the size of the HBM PHYs, which seems kind of large to be honest since it's got 50% extra CUs than Big Navi, which is estimated to be in the low 500mm2 range with a huge 128 MB LLC, yet has all of the graphics pipeline stripped out (no TMUs, ROPs, geometry engines, etc). Are the tensor cores and doubled register files really that space hungry? I wouldn't imagine so.This thing must be *massive*

Stuka87

Diamond Member

- Dec 10, 2010

- 6,240

- 2,559

- 136

On a similar node, it must be at least twice as big as Vega 20. Would this be the closest AMD has ever come to a reticle limit chip?

Seem to be winning some big deals but I guess if you're building a very expensive super computer you also have the money to hand tune the software to run well on the machine. Where this will suffer is selling to cloud vendors who rent them out and their clients will still prefer CUDA to HIP.

Vega20 still had rasterization hardware in it. It was a full blown GPU.

CDNA cards arent video cards. They don't have the ability to output video. So yes, it will be larger than Vega20, but unlikely to be double as it had lots of stuff removed that Vega20 had.

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.