Discussion Ada/'Lovelace'? Next gen Nvidia gaming architecture speculation

Page 40 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

What do you mean "easily"? When all of TSMCs major clients are cutting chip orders? Nvidia is in a different situation in that they were late to the game and had to pre-commit $ billions in 5nm orders under apparently different contractual conditions that the others were not subject to?We don't really know how much allocation is needed for consumer GPUs vs their HPC/cloud efforts though, do we?

And it's not like they couldn't easily find someone needing that allocation if they would be allowed to resell/release it if they found a partner.

I can think of several ways nvidia could be fine with this, so long as they have healthy cashflow.

There is no mention whatsoever in tech media in last few weeks or months that anyone has decided to take on Nvidias hoped for cuts on chip orders.NVIDIA is one of the companies that made prepayments to TSMC for their 5nm wafers. Unfortunately for NVIDIA, TSMC is not willing to make concessions. At best NVIDIA can count on wafer shipment delay for up to two quarters, but it’s NVIDIA’s problem to find customers for TSMC vacated production capacity, which may be very hard given how demand has dropped for the whole sector.

Heartbreaker

Diamond Member

- Apr 3, 2006

- 4,233

- 5,241

- 136

DLSS 3 is exclusive to rtx 4000, does this mean nvidia is no longer paying developers to put dlss 2 in games?

No reason to support old version of technology and all that.

I'd bet that All DLSS 3 games support DLSS 2 as a fallback since the inputs are almost certainly the same for the overlapping functionality.

HurleyBird

Platinum Member

- Apr 22, 2003

- 2,690

- 1,278

- 136

The first three games on the slide below, are NOT on the DLSS 3 game list so they can't be using frame interpolation to boost FPS:

Right, thanks. Doesn't make my brain any less confused though, because then the DLSS 3 FPS improvement looks too small in Darktide and MSFS vs Cyberpunk which was showing >1.5x. Assuming that Nvidia, being Nvidia, isn't going to show outliers where they perform poorly (only ones where they perform well) the question becomes: is Cyberpunk an outlier for DLSS 3 performance, or are RE:V, AC:V, and Division 2 outliers for non-DLSS 3 performance? When charts are this confusing, it usually means a company is trying to hide something.

4090 is about 1.5X 3090 Ti at rasterized performance without frame interpolation.

Almost certainly better than 1.5X with Ray Tracing

All of the titles in Nvidia's charts are Raytraced though. It looks like it's a bit over 1.5x with RT (or about 1.5x if you assume Nvidia is showing somewhat better than average titles), only the "next generation" RT titles show a huge improvement. So pure raster performance is almost certainly under 50% unless there's some really weird bottleneck going on with RT.

Edit: Faulty analysis. Betrayed by Google. See correction below.

Last edited:

DisEnchantment

Golden Member

- Mar 3, 2017

- 1,622

- 5,892

- 136

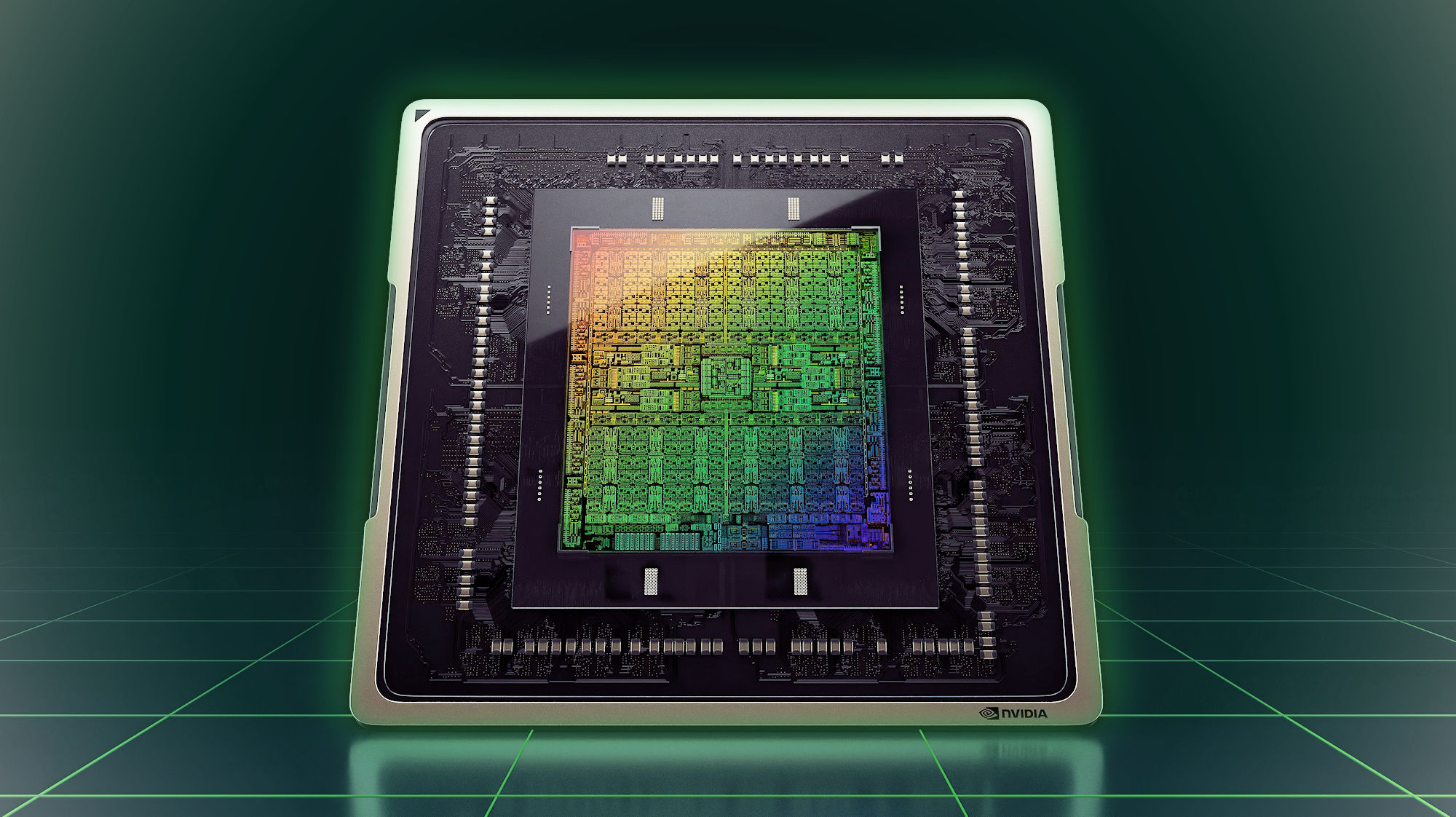

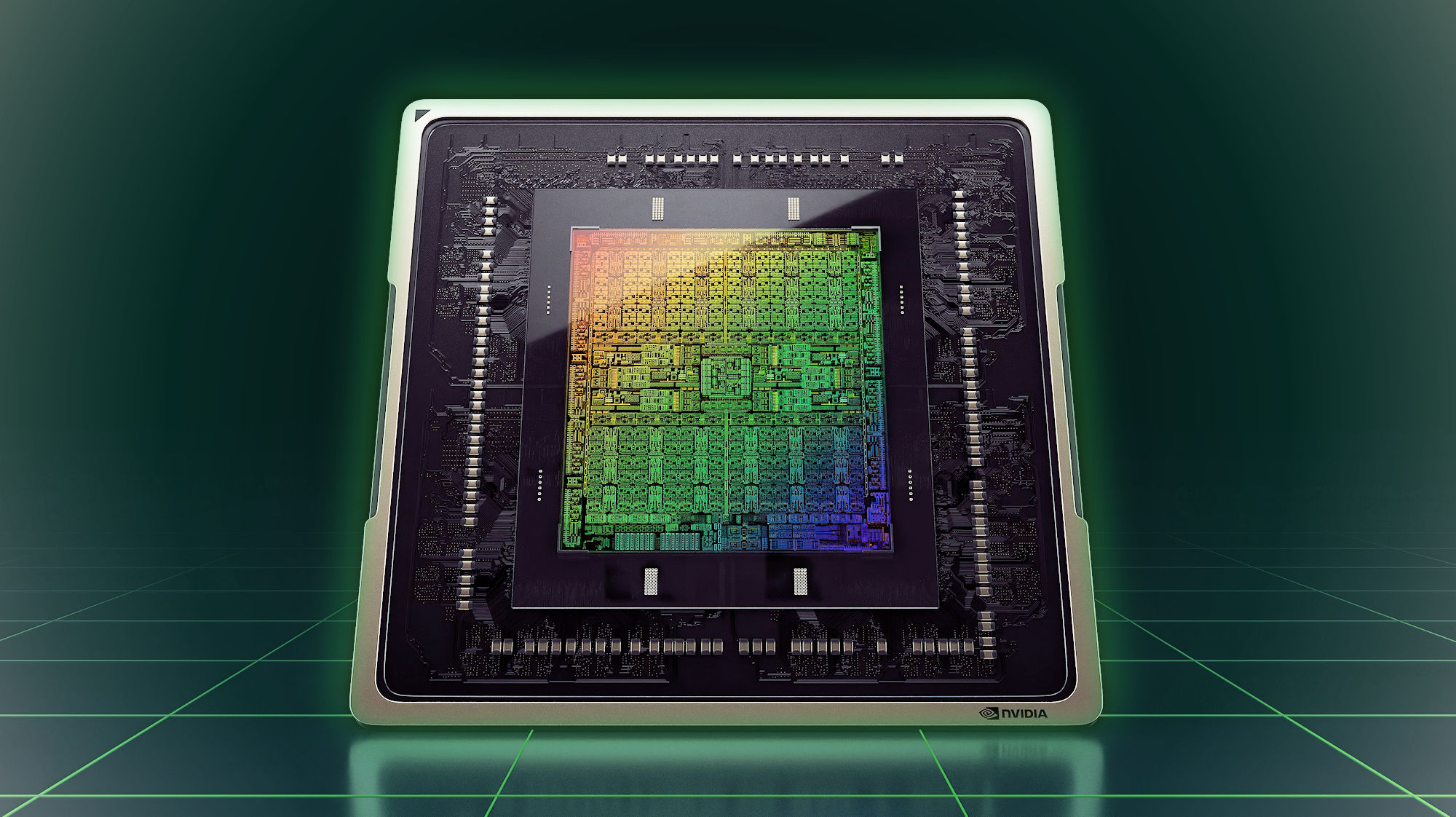

Your logic makes sense. We'll just have to wait until we get a teardown to confirm. Speaking of die shot, I didn't see what should be an obvious 96 MB of L2 cache on the die shot that was provided by Nvidia. I know it's a render, but even the render didn't show an obvious bank of cache.

NVIDIA details AD102 GPU, up to 18432 CUDA cores, 76.3B transistors and 608 mm² - VideoCardz.com

NVIDIA AD102 GPU has 76.3B transistors The most transistor-packed consumer GPU yet. Not everything was revealed by NVIDIA today, but luckily for us, some board partners were quick to share the missing details. Today, NVIDIA introduced its Ada Lovelace architecture for next-gen gaming...

With that density though the clocks would would be more restrictive. Interesting choices.

Saylick

Diamond Member

- Sep 10, 2012

- 3,210

- 6,562

- 136

Maybe AD102 doesn't have a large L2 at all... Maybe we have the same ol' 6 MB?Seems rumor are right.

NVIDIA details AD102 GPU, up to 18432 CUDA cores, 76.3B transistors and 608 mm² - VideoCardz.com

NVIDIA AD102 GPU has 76.3B transistors The most transistor-packed consumer GPU yet. Not everything was revealed by NVIDIA today, but luckily for us, some board partners were quick to share the missing details. Today, NVIDIA introduced its Ada Lovelace architecture for next-gen gaming...videocardz.com

With that density though the clocks would would be more restrictive. Interesting choices.

Perhaps a blessingOhh!

DLSS 3.0 exclusive to RTX4000 series owners.

Heartbreaker

Diamond Member

- Apr 3, 2006

- 4,233

- 5,241

- 136

Right, thanks. Doesn't make my brain any less confused though, because then the DLSS 3 FPS improvement looks too small in Darktide and MSFS vs Cyberpunk which was showing >1.5x. Assuming that Nvidia, being Nvidia, isn't going to show outliers where they perform poorly (only ones where they perform well) the question becomes: is Cyberpunk an outlier for DLSS 3 performance, or are RE:V, AC:V, and Division 2 outliers for non-DLSS 3 performance? When charts are this confusing, it usually means a company is trying to hide something.

All of the titles in Nvidia's charts are Raytraced though. It looks like it's a bit over 1.5x with RT (or about 1.5x if you assume Nvidia is showing somewhat better than average titles), only the "next generation" RT titles show a huge improvement. So pure raster performance is almost certainly under 50% unless there's some really weird bottleneck going on with RT.

There can be vastly different levels of Ray Tracing though. I expect CP2077 is using fairly extreme ray tracing, so multiplying big RT gains with DLSS 3 frame interpolation.

As far as MSFS, it's fairly CPU limited so almost all the gains might be coming from frame interpolation.

Heartbreaker

Diamond Member

- Apr 3, 2006

- 4,233

- 5,241

- 136

Perhaps a blessing

Yeah, I don't think I would shed any tears over the lack of inserted, interpolated frames.

HurleyBird

Platinum Member

- Apr 22, 2003

- 2,690

- 1,278

- 136

There can be vastly different levels of Ray Tracing though. I expect CP2077 is using fairly extreme ray tracing, so multiplying big RT gains with DLSS 3 frame interpolation.

OK, gotta correct myself on this one. Valhalla and Division 2 don't support raytracing. Google search for "Valhalla/Division 2 Raytracing" makes it appear like they do, but in all of the top hits "reshape" is hidden behind the ellipsis. D'oh.

Not interpolated, futurepolated, dlss 3 is making up a frame that it thinks would be displayed if the gpu actually had that frame rate.Yeah, I don't think I would shed any tears over the lack of inserted, interpolated frames.

SteveGrabowski

Diamond Member

- Oct 20, 2014

- 6,953

- 5,878

- 136

DLSS 3 is exclusive to rtx 4000, does this mean nvidia is no longer paying developers to put dlss 2 in games?

No reason to support old version of technology and all that.

Nice to know if I buy into an Nvidia feature it'll be replaced in a couple of years.

Frenetic Pony

Senior member

- May 1, 2012

- 218

- 179

- 116

DLSS 3 is exclusive to rtx 4000, does this mean nvidia is no longer paying developers to put dlss 2 in games?

No reason to support old version of technology and all that.

No idea why it's 4xxx only, typical Nvidia [redacted]. There's been work on the frame intertweening for years now, fun fact that's actually what started the whole TAA thing to begin with (make 30fps 60!) and only later was the research converted to TAA after figuring that out was easier.

Anyway there's nothing really particular about 4xxx to accelerate it. Way to royally [redacted] over your own customer base Nvidia.

In real terms the 2x(ish, almost, kinda) performance boost was correct. And the customers won't be redacted for long, the frame intertweening isn't anything magic, AMD and Intel will both have FSR3 or whatever soon enough, heck Nvidia isn't even the first to introduce a practical realtime optical flow modelling. Some bored hobby hacker has a published github of it for moths, over a year?, now. It means you can add stuff like TAA and frame intertweening even to games that don't have it.

Profanity isn't allowed in the tech forums.

AT Mod Usandthem

Last edited by a moderator:

A bit late to the party - but I love the naming scheme.

I hope Nvidia goes all in with their new naming scheme and does a 4080 10GB, 4080 8GB and 4080 6GB to complete the lineup instead of the regular 4070/60/tis.

I hope Nvidia goes all in with their new naming scheme and does a 4080 10GB, 4080 8GB and 4080 6GB to complete the lineup instead of the regular 4070/60/tis.

moonbogg

Lifer

- Jan 8, 2011

- 10,635

- 3,095

- 136

Maybe Nvidia will give us a 4060 for $700 that performs like a 3070. Here's to hoping.

Saylick

Diamond Member

- Sep 10, 2012

- 3,210

- 6,562

- 136

An Nvidia employee who worked on DLSS sheds some light on how it works, along with any concerns about latency.

I think he's using some fancy words there, but I think the gist is what we've already discussed. The interpolated frames do NOT utilize the rendering pipeline at all and so there is no added input lag to generate the interpolated frame. I mean, this makes sense because all interpolation needs is just the key frames, which are already rendered. Zero additional input lag makes sense because, again, the interpolated frame is generated based off of rendered key frames. He then says that the higher fps has zero latency cost, which is a roundabout way of saying the input lag is just based off of the key frames only. At the end of the day, DLSS 3.0 is fancy motion smoothening, not unlike what you got on your TV from years back. Biggest improvement is that it's for a video game and the interpolated frame is comparable in quality to the key frames.

Looks like the OFA was a part of the architecture since Turing but only in Ada is it fast enough to enable DLSS 3.0...

I think he's using some fancy words there, but I think the gist is what we've already discussed. The interpolated frames do NOT utilize the rendering pipeline at all and so there is no added input lag to generate the interpolated frame. I mean, this makes sense because all interpolation needs is just the key frames, which are already rendered. Zero additional input lag makes sense because, again, the interpolated frame is generated based off of rendered key frames. He then says that the higher fps has zero latency cost, which is a roundabout way of saying the input lag is just based off of the key frames only. At the end of the day, DLSS 3.0 is fancy motion smoothening, not unlike what you got on your TV from years back. Biggest improvement is that it's for a video game and the interpolated frame is comparable in quality to the key frames.

Looks like the OFA was a part of the architecture since Turing but only in Ada is it fast enough to enable DLSS 3.0...

Last edited:

Here's DLSS 3.0 in action. I think the fact that in both scenarios the user drives straight ahead likely means this is the best case scenario for image stability of the interpolated frames.

Wow, yes amazing. Its like so super faster. Like OMG dudes, we must totally get DLSS 3.0 now. The more you buy the more you game faster.

Because liek bro, you totally would run RT on with no DLSS 2.0. Its like gotta be the original frames bro. None of that like deep super sampling AI stuff maan. I want the real deal. But like 3.0 has like all them interpollations stuff n' junk so its legit. Its interpreting the real flows bro.

In2Photos

Golden Member

- Mar 21, 2007

- 1,640

- 1,658

- 136

I still play at 1080p. Not everyone has the hardware to run 1440. According to Steam the gtx 1060 was still the most popular card until just recently. And there are many Fortnite competitive players that still run at 1080.I don’t know of a single gamer, competitive or otherwise, that still runs 1080p. Most run 1440p or some form of Ultrawide monitor. I even know a pro dota player, and he runs 1440p. Most “competitive” games have been playable at high framerates with resolutions up to 4k since the 1080ti.

Outside of that, this whole launch was “meh”. Sticking with my 3090 and waiting for AMD’s announcement.

Welp, it looks like the high-end air and AIO cooling for all the AIB's 4090's will be 1800-2000 and the 408016gb will be 1300-1500 range including tax (in the US). obviously the under or around 1000-dollar flagship is in the past. Maybe these things are more expensive to engineer and produce, I don't know. Obviously, the power of these things is much greater. (I remember when I got the 580 3gb at 600.00 at launch and thought I had the most powerful GPU on the planet and bragging rights, lol)

But, if the performance numbers touted are true (will wait to see reviews) then these prices are not too bad, I guess. I've come to the conclusion that if you want the latest and greatest, it's going to cost you in the thousands. I really don't think AMD's new offerings are going to be cheaper either. Hell, we just spent in the thousands on a freaking refrigerator.

I'm just going to hang back a generation anymore. I just got a 3090 for 999.00 and a 6900XT for 760.00 for our gaming computers. These were the prices I was willing to pay without any buyer's remorse. I don't buy used either. We wanted to play our games at 4K ultra and these GPU's do the trick, for the most part. We'll get some use out of these and then, I'll either hand these 2 GPU's down to my son and daughter or sell them, wait till we have some money to blow and buy when I don't feel I'll have buyer's remorse.

But, if the performance numbers touted are true (will wait to see reviews) then these prices are not too bad, I guess. I've come to the conclusion that if you want the latest and greatest, it's going to cost you in the thousands. I really don't think AMD's new offerings are going to be cheaper either. Hell, we just spent in the thousands on a freaking refrigerator.

I'm just going to hang back a generation anymore. I just got a 3090 for 999.00 and a 6900XT for 760.00 for our gaming computers. These were the prices I was willing to pay without any buyer's remorse. I don't buy used either. We wanted to play our games at 4K ultra and these GPU's do the trick, for the most part. We'll get some use out of these and then, I'll either hand these 2 GPU's down to my son and daughter or sell them, wait till we have some money to blow and buy when I don't feel I'll have buyer's remorse.

I used to think that was silly to get so little "extra" for so much money. Now I can appreciate the mindset of buying on top and staying there, because why not?

If you've got some disposable income and want to make this a priority, well then by all means. It's still a relatively cheap hobby.

My thoughts too. People will buy it, because why not? I generally want a specific game or new feature to justify a new card though. I can use it for RT 4K and VR sims, but can't think of a specific game (that I haven't already played) where I would want to use it. Maybe the Cyberpunk DLC or Starfield. It would shine in VR but there is not much new PC VR content anymore. Also, the heat output is concerning to me regardless of price. My current PC already puts out too much heat for my liking.

Basically you'll have the latency of slower fps with the illusion of faster fps. I wonder if afterwards, he realized what he actually said.An Nvidia employee who worked on DLSS sheds some light on how it works, along with any concerns about latency.

I think he's using some fancy words there, but I think the gist is what we've already discussed. The interpolated frames do NOT utilize the rendering pipeline at all and so there is no added input lag to generate the interpolated frame. I mean, this makes sense because all interpolation needs is just the key frames, which are already rendered. Zero additional input lag makes sense because, again, the interpolated frame is generated based off of rendered key frames. He then says that the higher fps has zero latency cost, which is a roundabout way of saying the input lag is just based off of the key frames only. At the end of the day, DLSS 3.0 is fancy motion smoothening, not unlike what you got on your TV from years back. Biggest improvement is that it's for a video game and the interpolated frame is comparable in quality to the key frames.

Looks like the OFA was a part of the architecture since Turing but only in Ada is it fast enough to enable DLSS 3.0...

View attachment 67849

- Sep 28, 2005

- 20,853

- 3,211

- 126

People will buy it, because why not?

"The MORE we buy, the MORE we save... "

Book of Jensen Verse 1 Chapter 1.

I probably wont get launch 4090 as mining is dead.

I will wait for it to mature out a bit, let AIB's fine tune the PCB, and then see what pretty waterblocks are available for whatever cards come out.

I really want a White PCB card as my next 4090 tho, or something like a Sakura Edition Colorful, since my PC has always been named after female names.

Thunder 57

Platinum Member

- Aug 19, 2007

- 2,711

- 3,881

- 136

Clearly NVIDIA wants to be the new Apple. That we they can sell a $999 monitor stand video card stand that keeps the video card from falling off the motherboard.

Saylick

Diamond Member

- Sep 10, 2012

- 3,210

- 6,562

- 136

Yeah, I was looking over his replies on Twitter to see if he was just giving the Nvidia marketing spiel or if he truly believes what he says, and it seemed to lean towards the former. Too much use of marketing terms for me to think otherwise.Basically you'll have the latency of slower fps with the illusion of faster fps. I wonder if afterwards, he realized what he actually said.

Regardless, "illusion of higher fps" is an apt way to put it. All I know is this, moving forward: Not all fps is created equal. Will there be scenarios where it is preferable to have 40 fps without DLSS 3.0 over 80 fps with DLSS 3.0 at the same input lag? The selling point, if done right, is that there would be zero cost to enabling DLSS 3.0. We'll have to see in-depth testing to find out...

Thunder 57

Platinum Member

- Aug 19, 2007

- 2,711

- 3,881

- 136

Basically, all AMD (or even Intel) has to do now is a repeat of this:

TRENDING THREADS

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 11K

-

Discussion Speculation: Zen 4 (EPYC 4 "Genoa", Ryzen 7000, etc.)

- Started by Vattila

- Replies: 13K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes Discussion Threads

- Started by Tigerick

- Replies: 8K

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.