And I need no more convincing at this point to believe that you simply don't know what averages are and how they work.

I know exactly how averages work. I just think it's counterproductive to introduce additional unnecessary variables into a test. Why use mainstream Broadwell, when the HEDT version has the

exact same cache hierarchies as HEDT Haswell CPUs?

Even accounting for situations like these won't change the average results, which you've all but proven yourself to be incapable of understanding. I don't give much credence to Kraken/Google Octane or any other web-based benchmarks. This situation is exactly reminiscent of the CPU-Z benchmark before it was patched some time after the Ryzen launched.

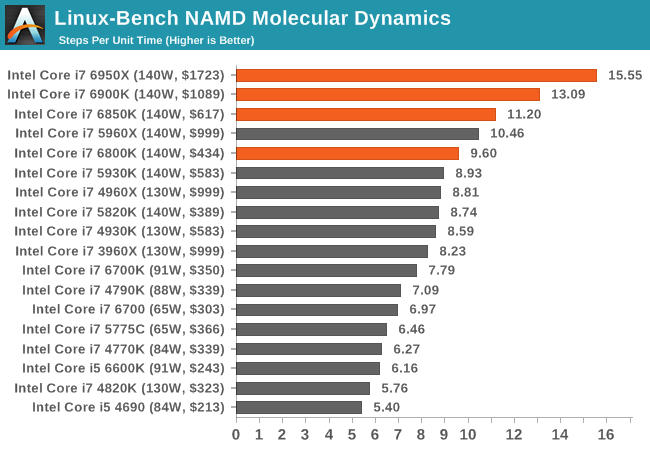

What you really mean to say is, I don't like the results and what they mean for my argument, therefore I'm going to try my best to undermine and ignore them. I have to wonder, what is your excuse for the NAMD Molecular dynamics test, which is an extremely parallel and extremely scalable scientific application? This one benchmark destroys your entire narrative and supports mine, which is why you continually refuse to acknowledge it, thus making you an

intellectually dishonest person.

And yet all you're doing is getting convinced about how those cumulative changes Intel claimed about *must* be what is affecting the performance in this *one* benchmark. When actually you're rocking the same boat as mine as you and me both know diddly squat about what this benchmark is doing under the hood to explain the observed performance difference. The difference being that I have the objectivity to include this result into the average, while you've convinced yourself that this one result is *undeniably* due to the things Intel talked about, which really shows the difference between the way we each interpret data differently. I'm simply saying that it does not change the overall outcome by that much, while with you it's only about reinforcing your confirmation bias.

Unlike you, I am a rational man. If two benchmarks exhibit the same behavior pattern where a new architecture has a substantial lead over the older one, one can assume it's due to the following reasons:

1) Increased operating speed of the CPU

2) Benchmark's support for newer instructions

3) Micro-architectural tweaks and optimizations

We can rule out number 1 because as I said in my previous post, even if you assume the best case scenario of perfect linear scaling with a clock speed increase, it still doesn't account for the performance increase. For number 2, Broadwell and Haswell support the same instructions with the exception of TSX, which Broadwell-E supports. Obviously the TSX instruction isn't used in those benchmarks, as the instruction is geared towards multithreaded applications and Kraken and Octane definitely don't appear to be multithreaded in any meaningful manner.

Which leaves number 3 as the only possible explanation. Feel free to contradict me with your emotional pleas about not trusting the benchmarks and blah blah blah, but you have not one leg to stand upon. The fact that you still continue to debate me on this is highly amusing, and just reinforces my already low opinion of you.