- Dec 15, 2021

- 2,356

- 1,275

- 106

The similarity is uncanny.Both employ former Anandtech writers. But I still am hopeful Qualcomm will talk about this chip at HotChips.

The similarity is uncanny.Both employ former Anandtech writers. But I still am hopeful Qualcomm will talk about this chip at HotChips.

Snake oil and core truths...This is a good naming scheme.

Take note AMD: generation means generation not release year.

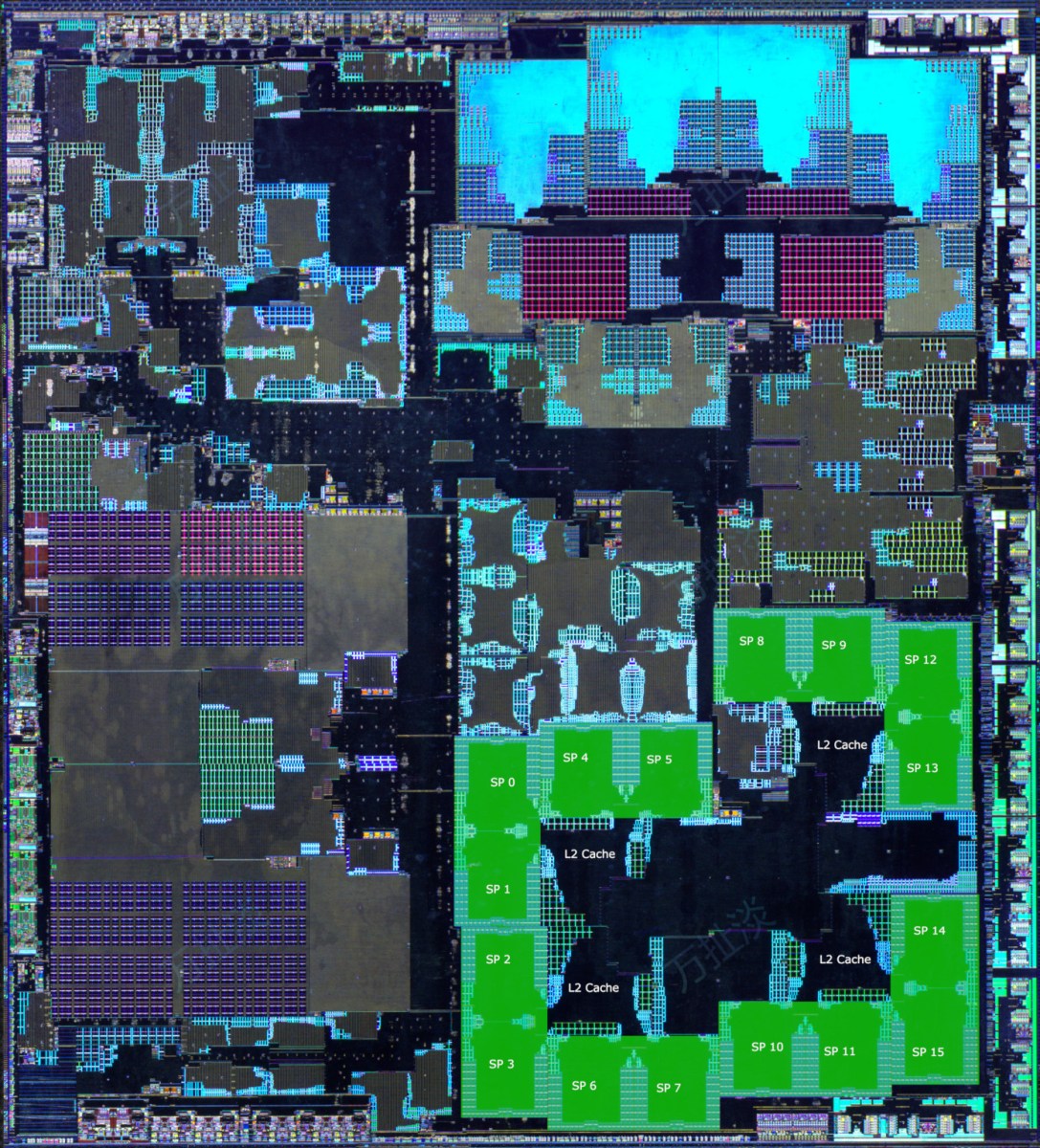

chipsandcheese.com

chipsandcheese.com

I am yet to confirm the authenticity of this slide, but it is showing the X Elite consuming almost 70W in Cinebench 2024 MT, which is far higher than the 40W in Geekbench 6 MT.

Compared to their October demos, however, there are a couple of important points to point out – areas where the chip specs have been downgraded. First and foremost, the peak dual core clockspeed on the chip (what Qualcomm calls Dual Core Boost) will only be 4.2GHz, instead of the 4.3GHz clockspeeds we saw in Qualcomm’s early demos. The LPDDR5X memory frequency has also taken an odd hit, with this chip topping out at LPDDR5X-8448, rather than the LPDDR5X-8533 data rate we saw last year. This is all of 85MHz or 1GB/second of memory bandwidth, but it’s an unexpected shift since 8448 isn’t a normal LPDDR5X speed grade.

Clocks going up to follow node gains is fine, pushing further not so much.And this is why I have been saying all along that X Elite G2 and it's Pegasus core should not increase the clock speed, and should arguably decrease it. Pushing the clocks up so hard is antithetical to a philosophy of efficient computing. In such a philosophy, performance gains should come from IPC increase, and not clock speed increases.

You’re reading too far into this without thinking through it. They didn’t, they’re just not showing it at those points inYeah, how did the they reduce the power consumption by 10W...

October 2023

View attachment 97766

March 2024

View attachment 97768

April 2024

View attachment 97769

Yeah I agree. AMD’s is a mess.This is a good naming scheme.

Take note AMD: generation means generation not release year.

This slide is causing some controversy in certain circles.View attachment 97750

I am yet to confirm the authenticity of this slide, but it is showing the X Elite consuming almost 70W in Cinebench 2024 MT, which is far higher than the 40W in Geekbench 6 MT.

During a Cinebench 2024 test, the M3 Pro on normal power mode used a max of 29w on the CPU & 17w on the GPU. While the 16-core M3 Max used a max of 54w on the CPU and 33w on the GPU.

www.notebookcheck.net

www.notebookcheck.net

So X Elite consumes more power than M3 Max in CB2024, while also delivering less performance.The small M3 Pro with 11 CPU cores consumes around 24 watts (M2 Pro with 10 cores: around 27 watts); the M3 Pro with 12 CPU cores consumes about 27 watts (M2 Pro with 12 cores: around 34 watts). More performance with lower consumption naturally means significantly better efficiency, and the new M3 Pro is clearly the leader in this respect.

We have so far only been able to test the top-of-the-range version of the M3 Max with 16 CPU cores and its performance advantage over the M3 Pro and the old M2 Max is a whopping 54-56 %, meaning Apple has finally gotten rid of a major point of criticism that the old models had. Although its power consumption has also increased significantly (M3 Max up to 56 watts; M2 Max up to 36 watts), its efficiency is still slightly better than the M2 Pro/M2 Max — but the M3 Max didn't come close to the M3 Pro's efficiency in our multi-core tests.

Time to change out of the QC T-shirt and start wearing the Apple cheerleading costumeSo X Elite consumes more power than M3 Max in CB2024, while also delivering less performance.

Unsure if I missed the discussion on this, but Qualcomm interestingly ever so slightly lowered their memory speeds for the X Elite.

The GB6.2 1T perf guidance has also lowered and Qualcomm is no longer mentioning their previous 3.2K runs. These are all presumably in the 80W device TDP config.

October 23, 2023:

QCOM says Oryon hits 3227 pts (100%) - ? clocks on ?

October 30, 2023:

QCOM says SXE hits 2939 to 2979 pts (91% to 92%) - 4.3 GHz boost on Windows

QCOM device shows SXE hitting 3236 (100%) - 4.3 GHz boost on Linux

April 24, 2023:

QCOM says SXE hits 2850 to 2900 (88% to 90%) - 4.2 GHz boost on ?

I'm all for limiting boost clocks, but funny Qualcomm promoted this 3,227 score beating its competitors, when Qualcomm's final shipping SoC will either be barely faster or may well lose:

Maybe only matching M2 Max, actually.

Maybe actually quite a bit slower than a heavily boosted i9 Raptor Lake

Of course, the headline (much lower power at equal perf) is great, but that bottom chart is becoming more suspicious, particularly when as the launch date moves closer, Qualcomm's internal benchmark scores keep getting lowered.

It’s the M3 Max. It has 4 more cores and is on N3..

Apple M3 Pro & M3 Max analysis - Apple has significantly upgraded its Max CPU

Notebookcheck performance and efficiency analysis of Apple's new SoCs, the M3 Pro and the M3 Max, compared with AMD, Intel and Qualcomm.www.notebookcheck.net

So X Elite consumes more power than M3 Max in CB2024, while also delivering less performance.

Making claims when the product is in the lab is easy. It gets increasingly more complicated as the launch window narrows down and the chips must match the specs in the wild. This is the reason people begin raising eyebrows as QC continue their barrage of marketing slides that we're supposed to take at face value.Of course, the headline (much lower power at equal perf) is great, but that bottom chart is becoming more suspicious, particularly when as the launch date moves closer, Qualcomm's internal benchmark scores keep getting lowered.

Apple does pretty well in CB2024;Also I would expect QC to do more poorly on CB24 power curves vs Intel and AMD than GB6, it makes sense. Cinebench is designed perfectly to benefit from HT.

Isn't GB5 deprecated?GB5 MT would be more interesting.

If you would read, I addressed that. They have a CPU on a better node and with superior perf/W still, and even Qualcomm beats AMD on a per-core basis by a ton, so that’s not surprising. However HT still gives them a significant boost on this app. Not hard.Apple does pretty well in CB2024;

The M3 Max (12P+4E) rivals the Ryzen 7945HX (16P/32T). Both do about ~1600 points.

Yeah, but the way they do MT in GB5 is different.Isn't GB5 deprecated?