- May 14, 2012

- 6,762

- 1

- 0

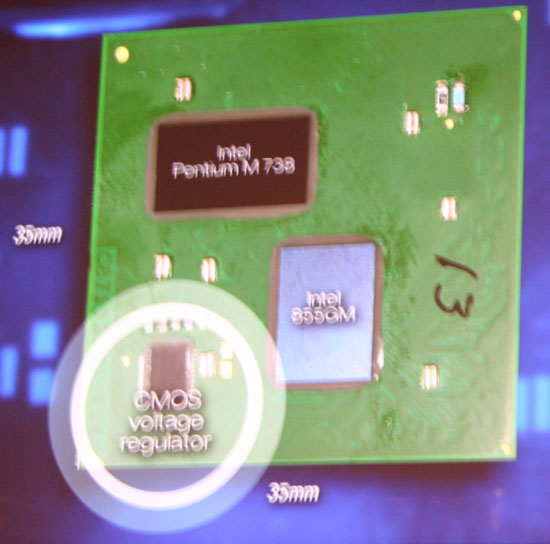

The advantage of integrating the memory controller, expansion interfaces and even the GPU are fairly self-evident. But I'm a bit puzzled as to Intel's thinking on the voltage regulators. There seem to be some obvious drawbacks but no real obvious advantages to me.

Drawback #1: Converting voltages is never 100% efficient, so simply by doing this on-chip means extra heat to dissipate.

Drawback #2: Higher cost for Intel due to development effort (fixed cost) and die size (variable cost).

Drawback #3: Potentially more risk of problems associated with power quality because of a lack of an intermediate step between the power supply and the CPU.

All of these are manageable, of course, but what's the upside? It's great news for motherboard manufacturers, but I don't see how it helps Intel much.

What do you think?

Drawback #1: Converting voltages is never 100% efficient, so simply by doing this on-chip means extra heat to dissipate.

Drawback #2: Higher cost for Intel due to development effort (fixed cost) and die size (variable cost).

Drawback #3: Potentially more risk of problems associated with power quality because of a lack of an intermediate step between the power supply and the CPU.

All of these are manageable, of course, but what's the upside? It's great news for motherboard manufacturers, but I don't see how it helps Intel much.

What do you think?