- Mar 27, 2009

- 12,968

- 221

- 106

Both these CPUs go on sale for around the same money at Newegg:

$100 (FX- 8300), Normal price $115 free shipping

$105 (Core i3 6100), Normal price I believe is $115 free shipping.

The FX8300 also comes with a Deus EX Mankind Divided game code.

Platform cost of AM3+ with 970 chipset and usb 3.0 added tends to be a tiny bit higher than a low end LGA 1151 board. There is also only one mATX board using the 970 chipset in contrast to the many LGA 1151 boards available in mATX. Furthermore, the idle (and total power consumption) on the AM3+ is higher than LGA 1151.

In addition, LGA 1151 also offers a greater level of CPU upgradability.

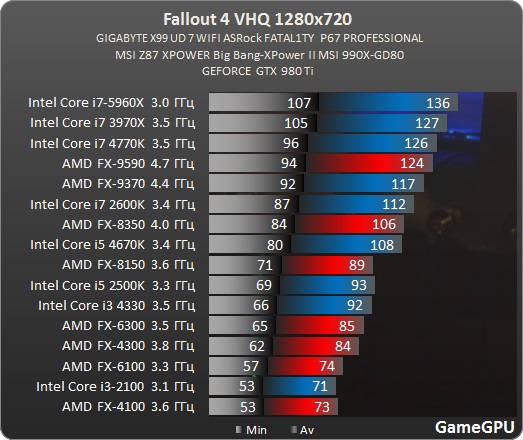

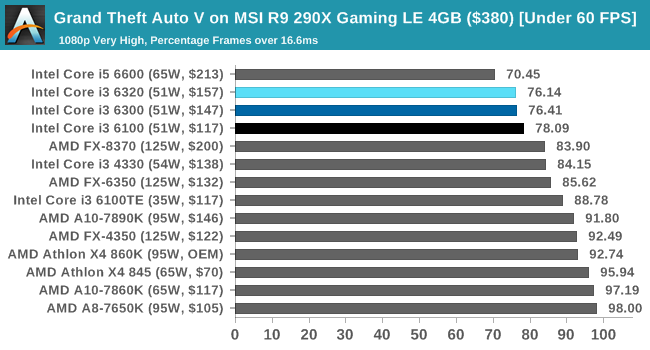

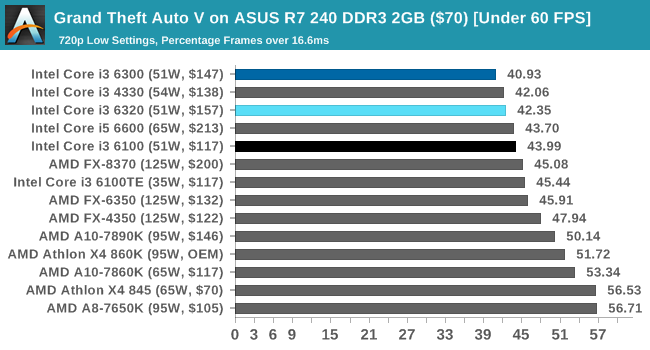

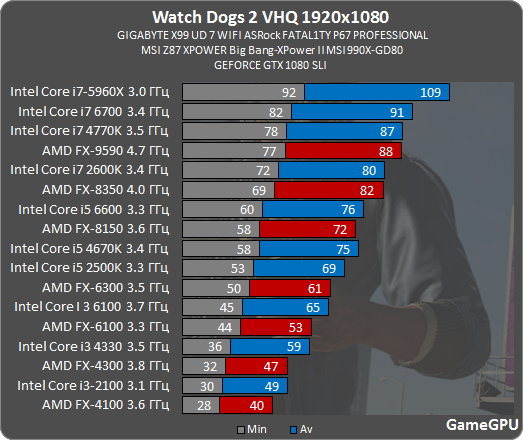

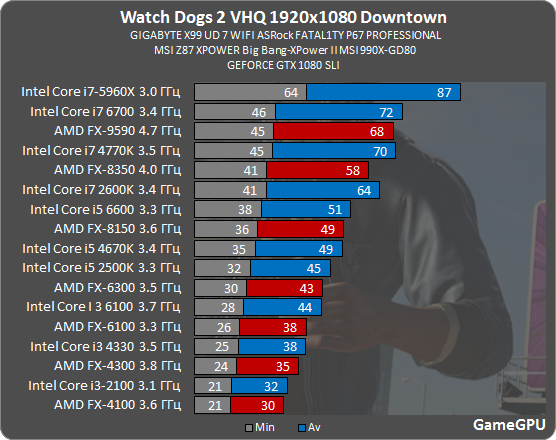

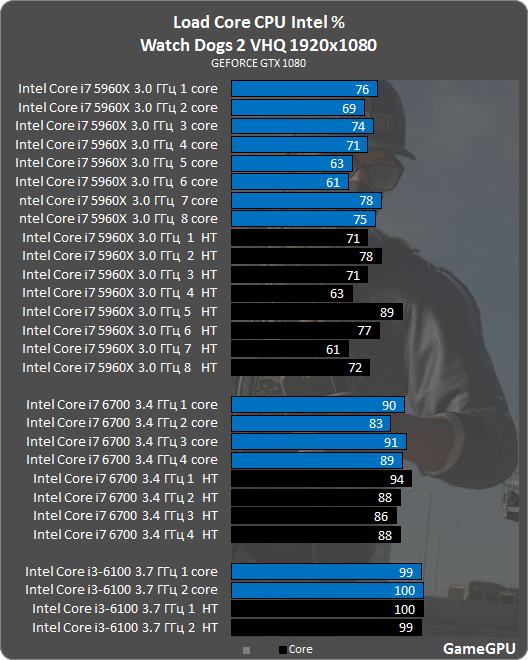

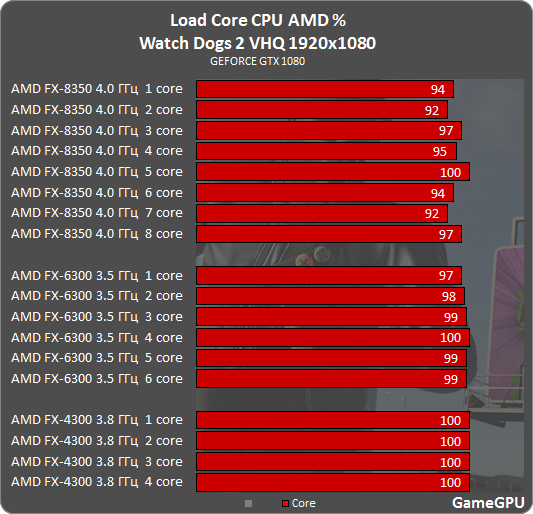

However, outside of CPU upgradability, I think the FX-8300 with 970 chipset motherboard could be a good CPU and platform for a repurposing a midrange or high end 28nm dGPU based on AtenRa's testing here. (Stock speed FX-8300 is about equal to the stock speed FX-8150 used in AtenRa's testing in terms of multi-thread and a bit faster in single thread due to the slightly higher IPC).

In fact, with a 28nm Nvidia card for DX11 I think a FX-8300 would do even better. This due to Nvidia's graphic driver being multi-threaded (as compared to the AMD single threaded DX11 graphic driver) and thus reducing the some of the single thread requirement of the CPU. (DX12 also fixes the driver issue).

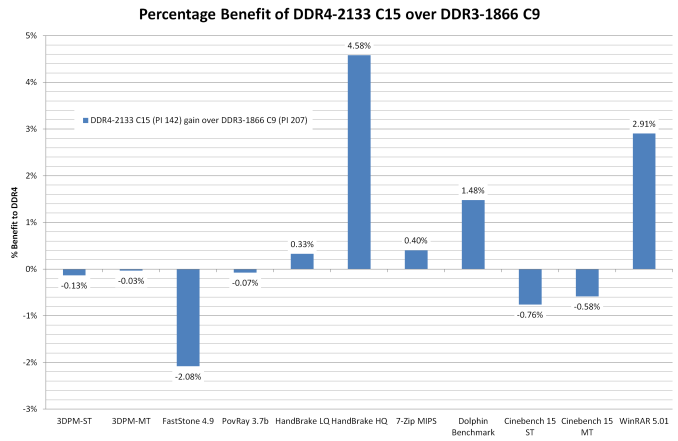

SIDE NOTE: AtenRa did use DDR3 1866 for this Core i3 6300 testing, but I don't think the performance difference would not be that much compared to DDR4 2133 (re: unlike DDR4 @ 1866, DDR3 1866 would have tighter timings). Also consider that I am referring to a Core i3 6100 for the purposes of this thread, not the 100 Mhz faster Core i3 6300.

$100 (FX- 8300), Normal price $115 free shipping

$105 (Core i3 6100), Normal price I believe is $115 free shipping.

The FX8300 also comes with a Deus EX Mankind Divided game code.

Platform cost of AM3+ with 970 chipset and usb 3.0 added tends to be a tiny bit higher than a low end LGA 1151 board. There is also only one mATX board using the 970 chipset in contrast to the many LGA 1151 boards available in mATX. Furthermore, the idle (and total power consumption) on the AM3+ is higher than LGA 1151.

In addition, LGA 1151 also offers a greater level of CPU upgradability.

However, outside of CPU upgradability, I think the FX-8300 with 970 chipset motherboard could be a good CPU and platform for a repurposing a midrange or high end 28nm dGPU based on AtenRa's testing here. (Stock speed FX-8300 is about equal to the stock speed FX-8150 used in AtenRa's testing in terms of multi-thread and a bit faster in single thread due to the slightly higher IPC).

In fact, with a 28nm Nvidia card for DX11 I think a FX-8300 would do even better. This due to Nvidia's graphic driver being multi-threaded (as compared to the AMD single threaded DX11 graphic driver) and thus reducing the some of the single thread requirement of the CPU. (DX12 also fixes the driver issue).

SIDE NOTE: AtenRa did use DDR3 1866 for this Core i3 6300 testing, but I don't think the performance difference would not be that much compared to DDR4 2133 (re: unlike DDR4 @ 1866, DDR3 1866 would have tighter timings). Also consider that I am referring to a Core i3 6100 for the purposes of this thread, not the 100 Mhz faster Core i3 6300.