- Mar 3, 2017

- 1,779

- 6,798

- 136

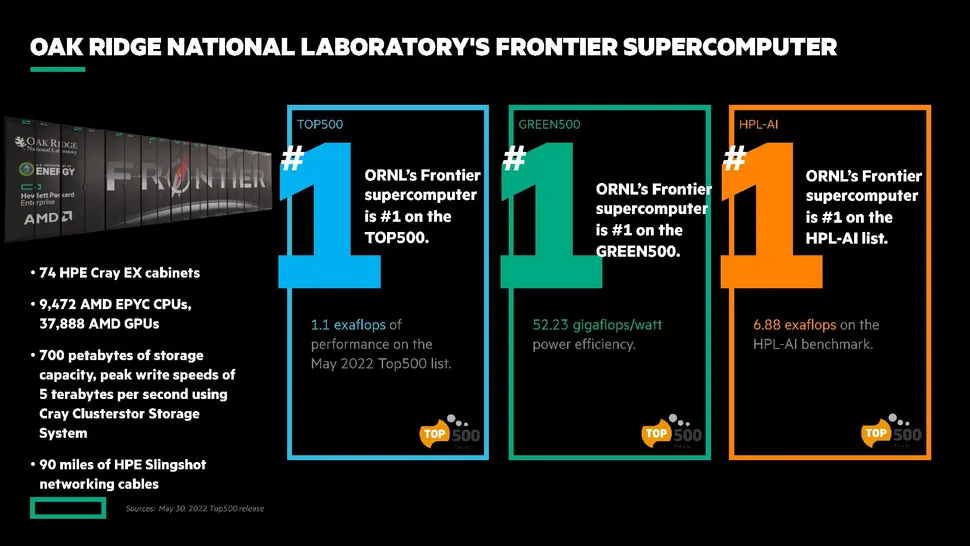

EPYC win for AMD for two exaflops exascale supercomputer.

EPYC Genoa + Future Radeon Instinct

Zen4 + DDR5 + Radeon Instinct

Big win for ROCm and users of open source SW.

www.tomshardware.com

www.tomshardware.com

I hope this gives a big boost to ROCm.

As a Linux user I am really looking forward to ROCm support upstreamed for most frameworks and the community can benefit without having to reverse engineer their ass out to find bugs and root causes if something is not working in the SW.

(Intel is also a big OSS contributor so I hope they win some too)

Update 1

AT Link

www.anandtech.com

www.anandtech.com

I hope it means what it sounds.

That we can write code which treat memory across the GPU as how we treat memory shared with another thread/core on the CPU? Sounds like a dream come true.

Best bit for me

www.hpcwire.com

www.hpcwire.com

Update 2

It is time to start a new Zen 4 thread

EPYC Genoa + Future Radeon Instinct

Zen4 + DDR5 + Radeon Instinct

Big win for ROCm and users of open source SW.

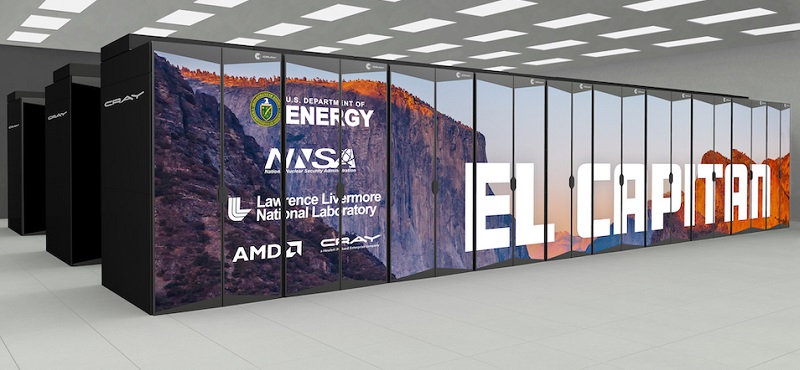

AMD Wins El Capitan: EPYC Genoa and Radeon Instinct to Power Two-Exaflop DOE Supercomputer

AMD hits another home run

I hope this gives a big boost to ROCm.

As a Linux user I am really looking forward to ROCm support upstreamed for most frameworks and the community can benefit without having to reverse engineer their ass out to find bugs and root causes if something is not working in the SW.

(Intel is also a big OSS contributor so I hope they win some too)

Update 1

AT Link

AnandTech Forums: Technology, Hardware, Software, and Deals

Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

However the most interesting claim is that these IF 3.0 device nodes will support unified memory across the CPU and GPU, which is something AMD doesn’t offer today.

I hope it means what it sounds.

That we can write code which treat memory across the GPU as how we treat memory shared with another thread/core on the CPU? Sounds like a dream come true.

Best bit for me

Scott said, “As part of this procurement, the Department of Energy has provided additional funds beyond the purchase of the machine to fund non-recurring engineering efforts and one major piece of that is to work closely with AMD on enhancing the programming environment for their new CPU-GPU architecture.” Work is ongoing by all three partners to take the critical applications and workloads forward and optimize them to get the best performance in the machine when El Capitan is delivered.

Exascale Watch: El Capitan Will Use AMD CPUs & GPUs to Reach 2 Exaflops - HPCwire

HPE and its collaborators reported today that El Capitan, the forthcoming exascale supercomputer to be sited at Lawrence Livermore National Laboratory and serve the National Nuclear Security Administration (NNSA), will use AMD’s next-gen ‘Genoa’ Epyc CPUs and Radeon Instinct GPUs and deliver 2...

Update 2

It is time to start a new Zen 4 thread

Last edited: