Back around 10 years ago I was pretty good at 3D modeling but what killed it was the time it took to render a scene. I would set the res to something pathetic like 320x240 and give it a day and I may have completed a 10 second bit.

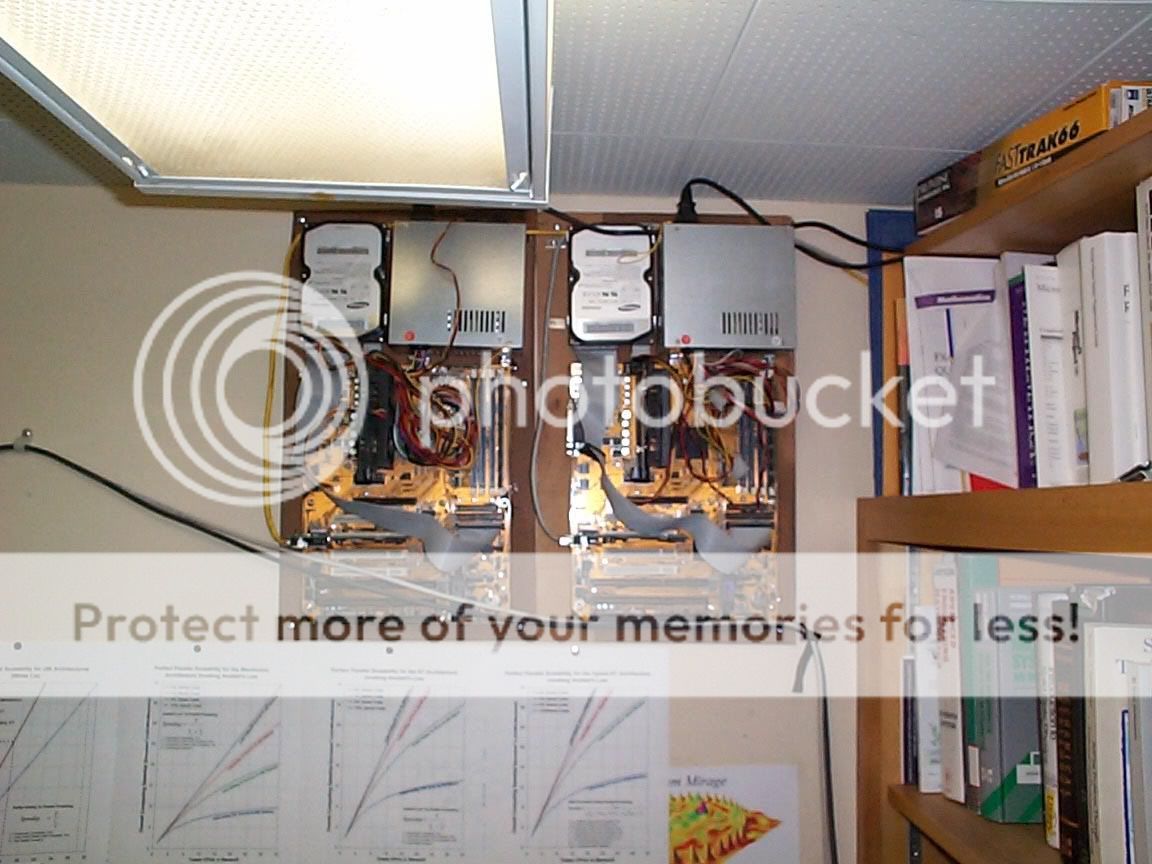

I was browsing through the hot deals and thought of something. You can purchase an AMD X4 840 (3.1 ghz stock) with a motherboard for $99. I have the 630 version of this chip and can hit 3.5 ghz. without even trying. Throw in $50 for cheap RAM, a used HD and a used or cheap power supply and you have a functioning quad core for $150. No need for a case for the render farm; a sheet of 4x8 ply will do! Now, buy 12 of these or so and for under $2000 you literally have 48 cores @ 3.5 ghz. for network rendering or any other number crunching you may desire. That's 168 ghz.

I think I drained about $1500 into my PC 10 years ago (thinking it was an Athlon 1.2 ghz) and to think of what you can build for that kind of money now is just staggering, especially for rendering 3D.

That is all. I will now go to bed!

I was browsing through the hot deals and thought of something. You can purchase an AMD X4 840 (3.1 ghz stock) with a motherboard for $99. I have the 630 version of this chip and can hit 3.5 ghz. without even trying. Throw in $50 for cheap RAM, a used HD and a used or cheap power supply and you have a functioning quad core for $150. No need for a case for the render farm; a sheet of 4x8 ply will do! Now, buy 12 of these or so and for under $2000 you literally have 48 cores @ 3.5 ghz. for network rendering or any other number crunching you may desire. That's 168 ghz.

I think I drained about $1500 into my PC 10 years ago (thinking it was an Athlon 1.2 ghz) and to think of what you can build for that kind of money now is just staggering, especially for rendering 3D.

That is all. I will now go to bed!