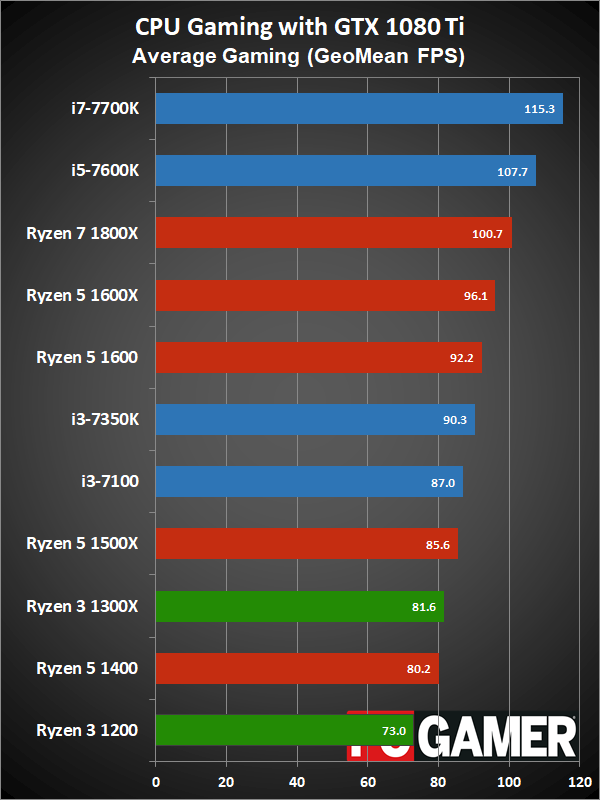

PC Gamer's setup is pretty bad, and shows how little they know about the AM4 platform.

When you use CL16 RAM with the awful "Auto" XMP settings on the Gigabyte Gaming 5 board that Jarred used, you get lousy performance. And maybe even system instability. Hell, Gigabyte couldn't even get Samsung B-die working consistently and stably via Auto-XMP on their

flagship K7 board until a few months ago. I had to run

manual memory timings with my K7. Or laugh at Auto-XMP's terrible sub-timings and occasional glitch to 2133 memory speeds. Here's a quote from Jarred on his testing:

If he's crashing, he's doing something wrong or he's got bad hardware. I have five Ryzen rigs that crunch data @ 100% load - some since March. Zero instability, and zero invalid work (work is validated against other machines).

The reason why Techspot gets "good" numbers for Ryzen is twofold: 1) They are using an X370 Taichi + DDR4-3200 CL14 (Samsung B-die) combination, which has worked via Auto-XMP settings since launch and 2) the 30-game test suite averages out differences, despite the large outliers (because of the regular distribution of results).

Anyone who isn't incompetent can get exactly the results Techspot does with a good board + 3200 CL14 memory.

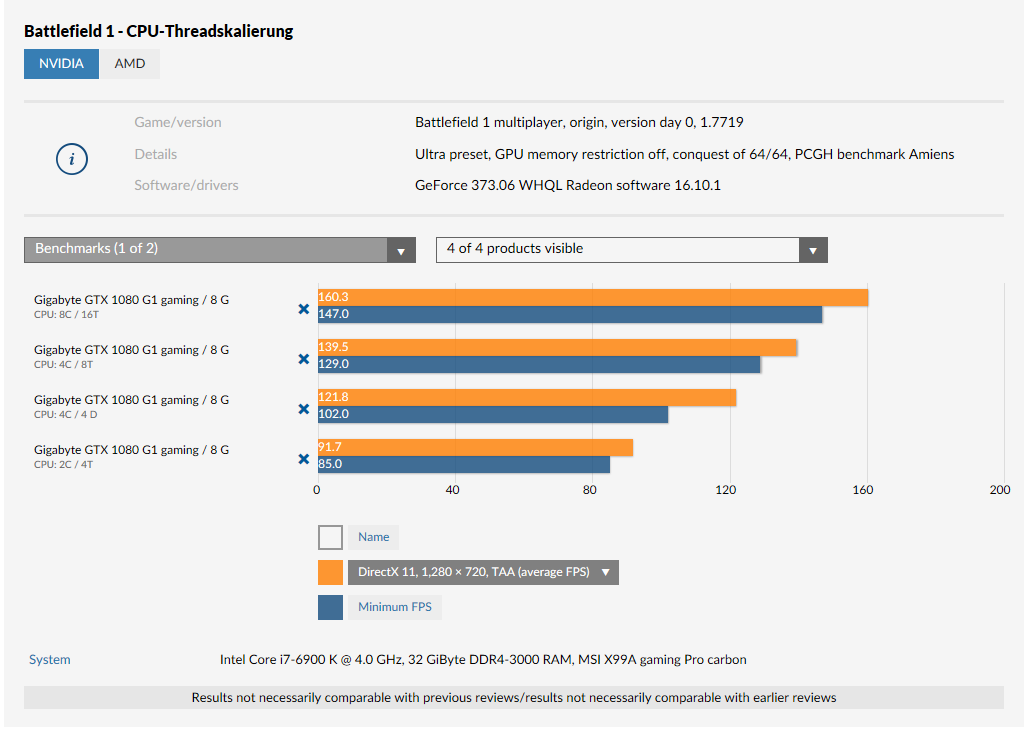

But that's actually only scratching the surface. Once you approach the gains that us enthusiasts get from tuning our memory timings...

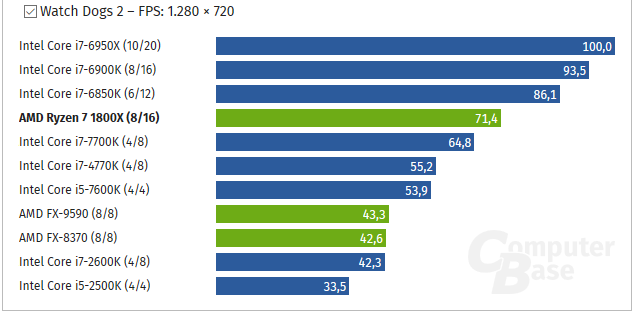

That's right, another double-digit FPS gain just from tuning memory settings from the default XMP 3200 CL14 settings. At 1080p using low-latency DDR4-3466 you are giving up almost nothing to a 7700K with Ryzen. Because the 7700K doesn't scale as well with memory as Ryzen does.

I can't wait for Zen 2, which should easily be +10% gains just addressing low-hanging fruit.

P.S. I've got a week off in 2 weeks time. I'll run benchmarks on my Asrock Taichi X370 w/1800X + Samsung B-die combo @ 4GHz DDR-3466 LL with a GTX 1080 Ti. I bet you I will demolish PC Gamer's results. Anyone willing to bet otherwise? Loser gets to eat cat food. Any takers?