There's a popular myth that extra threads/cores will result in significant performance gains for gaming at 1080p.

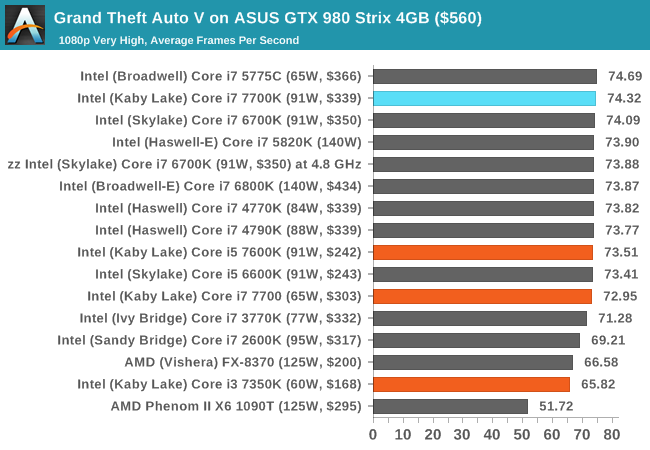

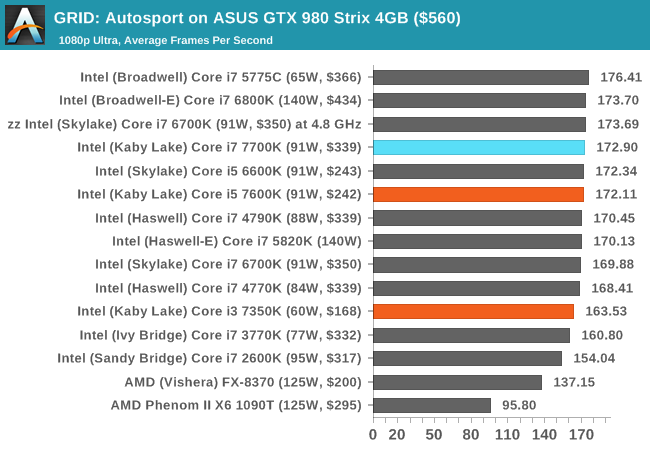

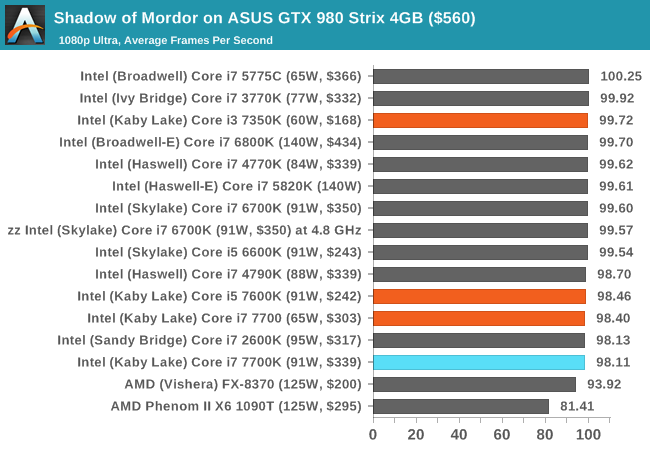

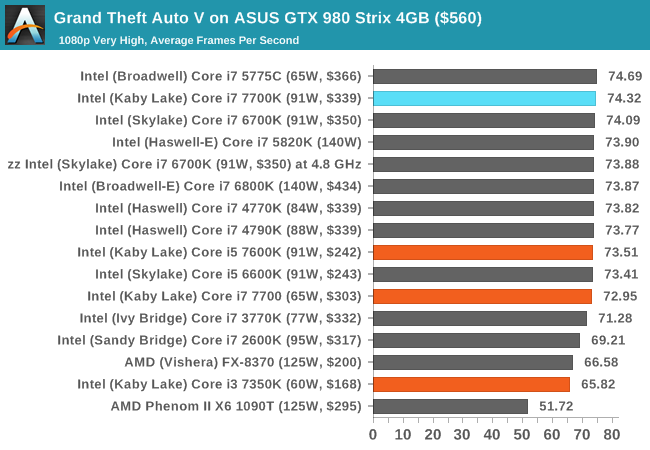

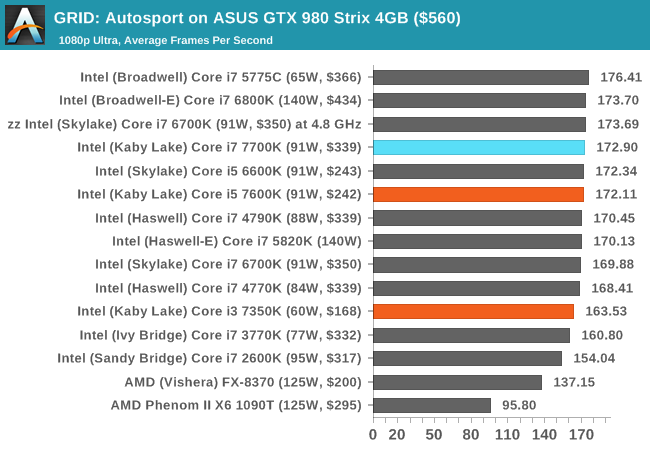

I present to you exhibit A:

Here we see from none other than our own Ian Cutress that in 3 games benchmarked at 1080p, there is less than 2% performance gain realized from 4 additional threads.

Based on this data, it's ridiculous to recommend extra threads/cores for the average PC gamer. We, as a community, need to stop propagating this myth.

I look forward to your vigorous and data based rebuttals.

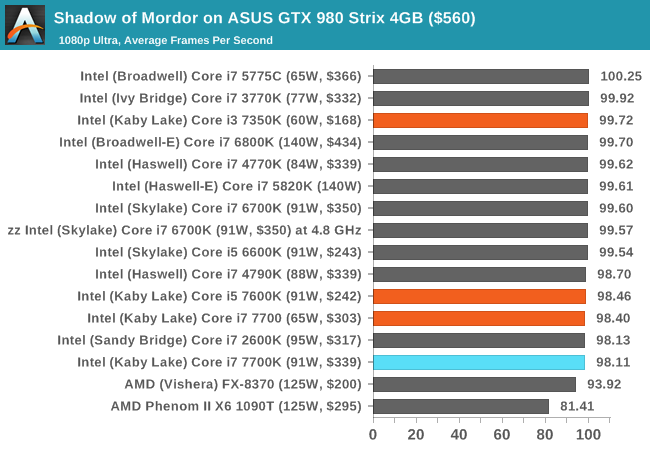

I present to you exhibit A:

Here we see from none other than our own Ian Cutress that in 3 games benchmarked at 1080p, there is less than 2% performance gain realized from 4 additional threads.

Based on this data, it's ridiculous to recommend extra threads/cores for the average PC gamer. We, as a community, need to stop propagating this myth.

I look forward to your vigorous and data based rebuttals.