First review of retail Intel Core i9-13900K “Raptor Lake” CPU emerges

Unlimited Core i9-13900K tested Over at Bilibili, one can find a comprehensive review of a retail version of the Core i9-13900K processor, the flagship Raptor Lake desktop CPU that is supposedly coming out next month. The review is a collaboration between ECSM_Official and OneRaichu, and it is...

videocardz.com

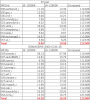

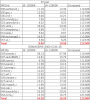

In Cinebench R23 tests, Core i9-13900K is faster by 13% with Performance (Raptor Cove) cores compared to 12900K (Golden Cove). Interestingly, even the performance of the Efficient cores has increased by 14%, although the same architecture is used (Gracemont). It is worth noting that not only the frequency has been increased for Raptor Lake CPUs, but also the size of the L3 cache has.

ECSM confirms that with unlimited (350w) i9-13900K can brake 40K points in Cinebench R23, which is 47% higher than 12900K with uncapped power.

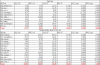

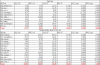

The reviewer concluded that i9-13900K brings 10% higher framerates than 12900K in CPU-bound games (CSGO, Ashes of the Singularity), but also improves frame times for the slowest 0.1% frames. Below is CSGO performance with unlimited i9-13900K and i912900K running with DDR5 and DDR4 memory

ECSM is to provide more test results later: with default PL2 limit and later on Z790 motherboard. The conclusion is that i9-13900K has 12% better single-threaded performance and multi-threaded ‘greatly improved to compete with AMD Zen4

*edit*

From the linked

Bilibili post:

Testing platform:

- CPU1: Intel Core i9 13900K

- CPU2: Intel Core i9 12900KF

- DRAM: DDR5-6000 CL30-38-38-76, DDR4-3600 CL17-19-19-39, Trefi=262143, other parameters=Auto.

- Motherboard: Z690 Taichi Razer Editon and Z790****

- BIOS version: 12.01 and ****

- GPU: AMD Radeon RX 6900 XTXH OC 2700MHz

- Cooling: NZXT Kraken X73 作者:

vs

2 extra stops on rings bus = higher memory latency by the looks of things.. L3 bandwidth much improved tho

2 extra stops on rings bus = higher memory latency by the looks of things.. L3 bandwidth much improved tho

Compared with Intel Core i9 12900K, due to the change of Ring bus structure and design, when Ecore is under load, Ringbug Frequency will no longer drop from 4700 MHz to 3600 MHz. The change is mainly from 5000 MHz. Changed to 4600 MHz, at this time Ringbus latency will no longer be a burden on core access latency. Coupled with possible changes in Ring bus topology, the core latency of Intel Core i9 13900K has an interesting change.

That is, there is no longer an obvious access penalty for the communication between P and E, and the communication speed between almost all cores is maintained at a consistent level, about 30-33 ns, except for the small cores in the same Cluster. There is a certain access delay penalty due to the bus snoop, and the E core delay in the same cluster is also slightly improved.

IPC test:

Based on the performance test, we used SPEC CPU 2017 1.1.8 and Geekbench 5.4.4 to conduct the corresponding IPC test, and also tested the default frequency and 3.6Ghz, for reference only.

- SPEC CPU 2017:

- OS: WSL2-Ubuntu 20.04

- Compiler: GCC/Gfortran/G++ 10.3.0

- Test parameters: -O3, the corresponding test and cfg are attached to the network disk, link: https://pan.baidu.com/s/1G0yD_FC3yXOJl3tkkyzjSg Extraction code: pa37, welcome to use the test.

P core part:

We first tested the single-thread performance at the

default frequency, and we can see that the

improvement is about 12.5%

Further, we conducted a 3.6GHz co-frequency test, and we can see that the co-frequency performance of the two cores of RPC/GLC is basically the same,

Further, we conducted a 3.6GHz co-frequency test, and we can see that the co-frequency performance of the two cores of RPC/GLC is basically the same, while RPC has a relatively lower memory access latency due to its larger L2 cache.