- Mar 21, 2004

- 13,576

- 6

- 76

I recently came across an article measuring the usefulness of NTFS compression on SSDs. My own SSD has a sandforce controller so it will not help there, but would it be useful on my HDD?

I remember back in the day I used to use it, but I stopped due to low performance, I was curious to know if performance is better with current hardware. Finding not results available online I did my own benchmarking.

System:

Win7x64

i5-3570K

16GB DDR3-1600

XFX HD6950

Gigabyte GA-Z77MX-D3H

240GB Intel 520 SSD

640GB WD 2 platter drive.

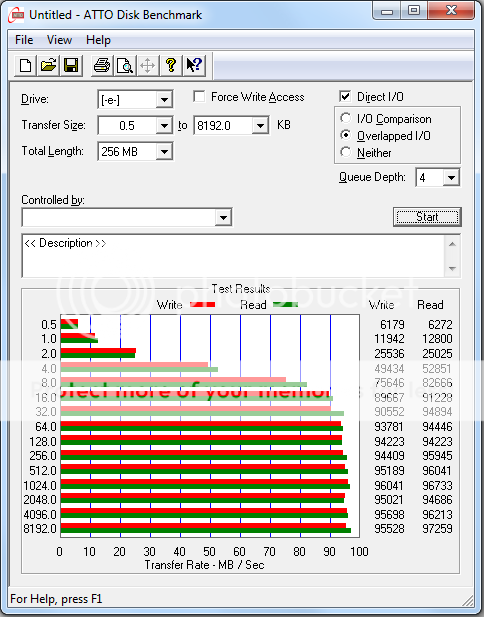

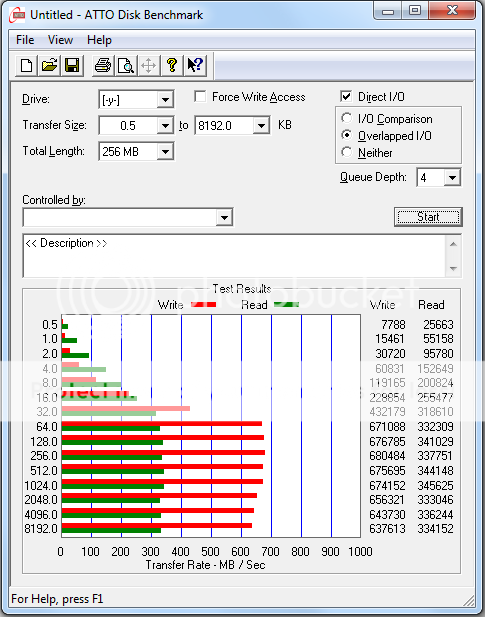

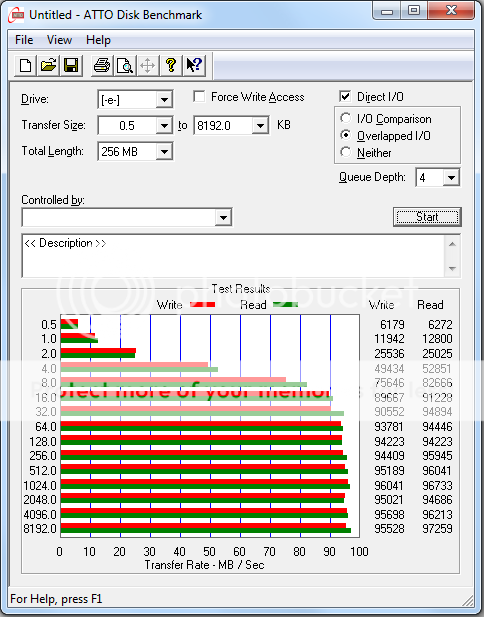

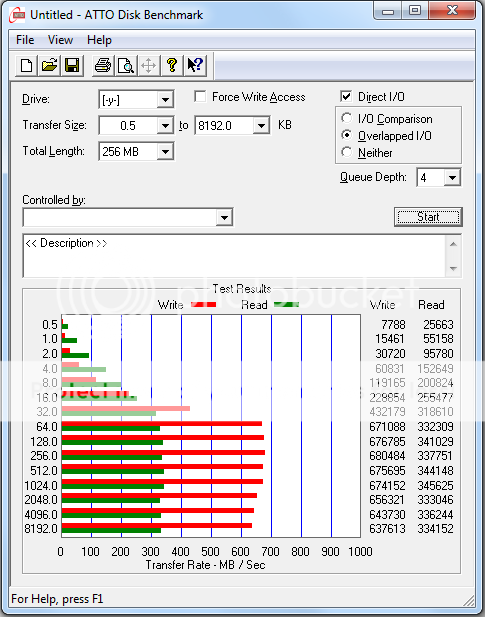

At first I ran the ATTO benchmark which showed MASSIVE improvement across the board when turning on compression

I tried AS SSD which uses incompressible data, unfortunately rather then seeing how much data it can push in a minute, it creates a set amount of data (made with SSDs in mind) and then runs it for as long as it takes. This resulted in the test stalling out (even without compression.

Incompressible Data - Windows file copy, potentially useless, needs repeating

I pulled out a stopwatch and tried just timing copying a single 5.85GB HD movie file (x264) from my SSD to the HDD.

Compression off: 45 seconds

Compression on: 110 seconds (CPU Usage fluctuated between 13 and 19%; mostly about 15-16%; according to win7, single core only)

Mixed Data - Windows file copy, useless

I went and made a folder on SSD called NTFSC test and filled it with 1, 4, or 8 copies of windows directory (skipping the 1 file I was unable to copy out of the windows folder).

1x windows (1.41GiB according to windows properties unusual rouding):

compression on v off both 5-6 seconds.

8x windows (11.3GiB according to windows properties unusual rouding):

compression off: estimated very long time, aborted.

4x windows (5.67GiB according to windows properties unusual rouding):

compression off: 121 seconds. - 10 seconds for first 50% then stalled for a long time before proceeding slowly.

compression on: 103 seconds. - 10 seconds for first 25% then stalled for a short time and proceeded slowly but not as slow as with compression off.

compression off: 71 seconds. - is superfetch "helping"? is the read speed an issue? who knows but this is definitely not a reliable experiment.

Will see about AS SSD overnight results (it creates custom random data every time)

Windows file copy seems to be a really REALLY REALLY bad benchmark.

I hope overnight AS SSD proves more repeatable.

Compressible data - ATTO bemchmark:

Compression off:

Compression on:

Testing notes:

I created an empty compressed folder and pasted a file in it for the stopwatch test.

For ATTO I shared and then mapped that empty folder as a network drive. (ATTO creates its test files in the root directory of chosen drive).

I remember back in the day I used to use it, but I stopped due to low performance, I was curious to know if performance is better with current hardware. Finding not results available online I did my own benchmarking.

System:

Win7x64

i5-3570K

16GB DDR3-1600

XFX HD6950

Gigabyte GA-Z77MX-D3H

240GB Intel 520 SSD

640GB WD 2 platter drive.

At first I ran the ATTO benchmark which showed MASSIVE improvement across the board when turning on compression

I tried AS SSD which uses incompressible data, unfortunately rather then seeing how much data it can push in a minute, it creates a set amount of data (made with SSDs in mind) and then runs it for as long as it takes. This resulted in the test stalling out (even without compression.

Incompressible Data - Windows file copy, potentially useless, needs repeating

I pulled out a stopwatch and tried just timing copying a single 5.85GB HD movie file (x264) from my SSD to the HDD.

Compression off: 45 seconds

Compression on: 110 seconds (CPU Usage fluctuated between 13 and 19%; mostly about 15-16%; according to win7, single core only)

Mixed Data - Windows file copy, useless

I went and made a folder on SSD called NTFSC test and filled it with 1, 4, or 8 copies of windows directory (skipping the 1 file I was unable to copy out of the windows folder).

1x windows (1.41GiB according to windows properties unusual rouding):

compression on v off both 5-6 seconds.

8x windows (11.3GiB according to windows properties unusual rouding):

compression off: estimated very long time, aborted.

4x windows (5.67GiB according to windows properties unusual rouding):

compression off: 121 seconds. - 10 seconds for first 50% then stalled for a long time before proceeding slowly.

compression on: 103 seconds. - 10 seconds for first 25% then stalled for a short time and proceeded slowly but not as slow as with compression off.

compression off: 71 seconds. - is superfetch "helping"? is the read speed an issue? who knows but this is definitely not a reliable experiment.

Will see about AS SSD overnight results (it creates custom random data every time)

Windows file copy seems to be a really REALLY REALLY bad benchmark.

I hope overnight AS SSD proves more repeatable.

Compressible data - ATTO bemchmark:

Compression off:

Compression on:

Testing notes:

I created an empty compressed folder and pasted a file in it for the stopwatch test.

For ATTO I shared and then mapped that empty folder as a network drive. (ATTO creates its test files in the root directory of chosen drive).

Last edited: