You are using a 3000 series cpu with DDR3 ram, as far as I remember some of the fastest DDR3 ram at the time was 2133mhz, even with that you are still way off from the DDR4 standard which is 2400MHz.

And you are looking at benchmark tests, usually these are done on clean machines with nothing but windows OS installed and the basic set of latest drivers. So right off the gate they are going to have slightly better fps than anyone running an everyday machine, sometimes bloated with various applications, windows bloat, maybe fragmented drives, possibly even few viruses running in the background.

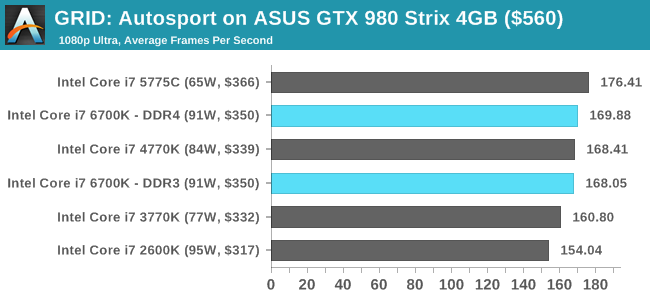

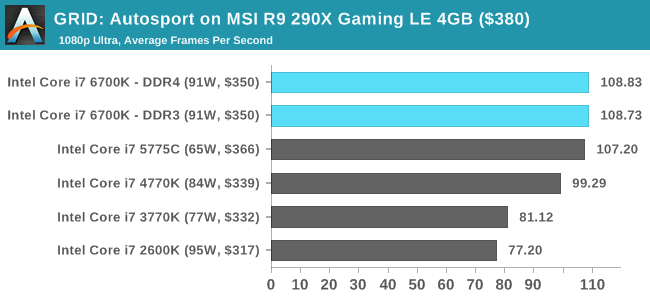

Second the 3570k is anywhere from 10 to 20% slower than a new processor, even clock for clock its going to be about 10-15% slower than the newest processors like the 8600k or 8700k. Add in another 5 to 10% slow due to your ram, add in the OS bloat factor as well, you are also running an antivirus, background tasks, etc... and that is another 5% or so performance hit.

So there you have it.

And you are looking at benchmark tests, usually these are done on clean machines with nothing but windows OS installed and the basic set of latest drivers. So right off the gate they are going to have slightly better fps than anyone running an everyday machine, sometimes bloated with various applications, windows bloat, maybe fragmented drives, possibly even few viruses running in the background.

Second the 3570k is anywhere from 10 to 20% slower than a new processor, even clock for clock its going to be about 10-15% slower than the newest processors like the 8600k or 8700k. Add in another 5 to 10% slow due to your ram, add in the OS bloat factor as well, you are also running an antivirus, background tasks, etc... and that is another 5% or so performance hit.

So there you have it.