- Nov 4, 1999

- 24,120

- 507

- 126

So please share your new scores for old & new GPUs & CPUs alike!

[update 7/2023] MW's Seperation project ended this month, so only the CPU project is running now.

[update 10/2020] Seems that the 227.5x credits have been sticking around for a while, although their does seem to be some variability in WUs sometimes. So I'm collecting WU times again (see end of thread), just bear in mind the times might be more fuzzy than previous benchmarking runs.

CPUs don't consistently get the 227.5x WUs unless the MT app is disabled.

Thanks to biodoc regarding CPU benchmarking for this :-

:-

I've added a table for running concurrent WUs as many people do that, & Nvidia cards in particular benefit from doing that. Note though that times from doing that can be more erratic than running singularly.

Requirements for the benchmark :-

Averaged time of at least 5 WUs for GPUs and an averaged time of at least 10 WUs for CPUs (not cherry picked please! ).

).

A dedicated physical CPU core for each GPU (for optimal MW WU times). If only using BOINC for CPU tasks, & you have an HT capable CPU, then the only way to be certain of this (bar disabling HT) is to set the BOINC computing preferences (in advanced mode>options) so that you have 2 less CPU threads running then you do physical cores. Don't panic too much about lost CPU ppd, it doesn't take long to run MW GPU WUs (see table).

(see table).

Please state what speed & type CPU you have, as it now has a significant affect on GPU WU times!

Please state GPU clock speeds if overclocked (including factory overclocks) or state 'stock'.

Please only crunch 1 WU at a time per GPU, preferably. Or if you are running concurrent WUs, state how many & I'll put your time in the 2nd GPU table.

For CPU times please state whether Hyper Threading (or equivalent) is enabled or not, times for both states welcomed.

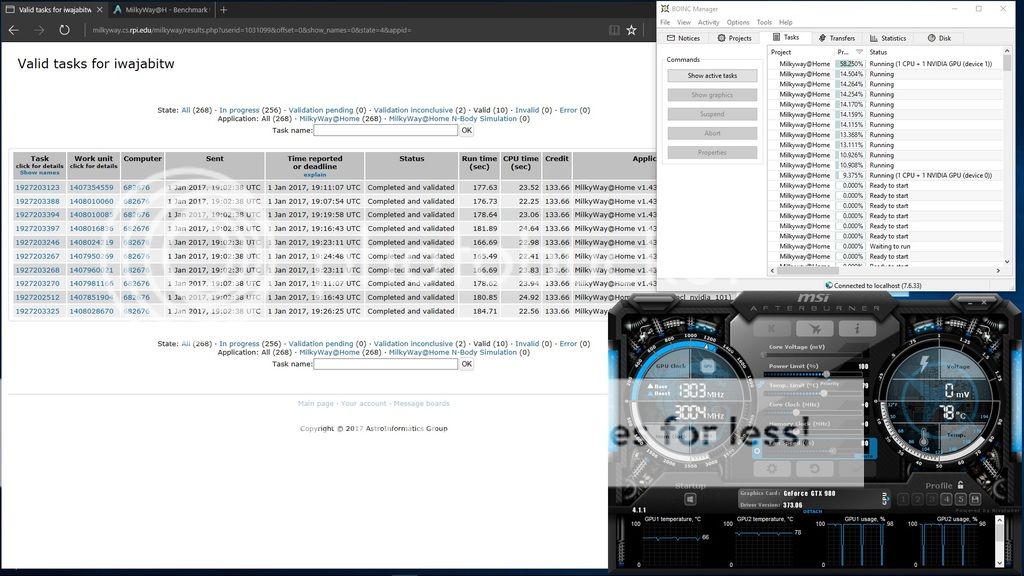

It would also be useful if you could state your BOINC & driver version, & OS, in case it does make any difference.

If you find your WU times are fluctuating more than a couple of % for singly run WUs then use GPU-Z or your grx card driver tools to check that your GPU is able to hit near 100% load (although I'm not sure that Nvidia cards can hit that for MW). Note that even when crunching normally, the GPU load will be on/off on this current MW app, so the GPU load graph should look like a series of blocks. Just looking at my RX 580, it was going to zero load roughly every 27s.

Also check using task manager that your CPU does actually have the spare load to give to MW (& btw, GPU crunching won't show up in the TM).

(stupid forum s/w is now busted and won't let me make the names here clickable without linking everything in the following text!!!)

GPU statistics - Average Run Time to Complete a MW v1.46 227.5x credit WU :-

Firepro S9150, (CPU i3 4160) ............................................................................... 31.2s .... Icecold - https://forums.anandtech.com/thread...imes-wanted-for-new-wus.2495905/post-40165439

R280X, 900 MHz, (CPU Ryzen 9 3900X @~3.9 GHz)........................................... 47s ....... Fardringle - https://forums.anandtech.com/thread...imes-wanted-for-new-wus.2495905/post-40334785

HD7870 XT 3GB (DS), GPU 850 MHz, (CPU, i7 4930k @4.1 GHz, 6 core) ......... 68s ...... Assimilator1 - https://forums.anandtech.com/thread...imes-wanted-for-new-wus.2495905/post-40317876

RX 6700 XT -6% power target, -25mV, (CPU Ryzen 9 5950X @~3 GHz) ........... 84s ....... Endgame124 - https://forums.anandtech.com/threads/6700xt-distributed-computing-results.2592770/post-40489586

RX 580 8GB, GPU 1266 MHz, (CPU Ryzen 5 3600 @~3.7 GHz) ......................... 96s ...... Assimilator1 - https://forums.anandtech.com/thread...imes-wanted-for-new-wus.2495905/post-40317876

R7 250X (CPU A10 7870k) .................................................................................. 405s ..... Endgame124 - https://forums.anandtech.com/thread...imes-wanted-for-new-wus.2495905/post-40419746

A10 7870k iGPU .................................................................................................. 472s ...... Endgame124 - https://forums.anandtech.com/thread...imes-wanted-for-new-wus.2495905/post-40419746

Quadro 4000 (CPU dual Xeon 5680s) ................................................................ 481s ...... Fardringle - https://forums.anandtech.com/thread...imes-wanted-for-new-wus.2495905/post-40324040

GPU statistics - Average Run Time to Complete concurrent MW v1.46 227.5x credit WUs :-

Radeon VII Pro, (CPU Xeon E5-1620 v3 @ 3.50GHz), 4 concurrent WUs ................... 30s ........ Holdolin - https://hardforum.com/threads/welcome-new-h-members-to-dc.2006491/post-1044892382

R9 280X, 900 MHz, (CPU Ryzen 9 3900X, ~3.9 GHz), 2 concurrent WUs ................... 77.2s .... Fardringle

HD7870 XT 3GB (DS), GPU 925 MHz, (CPU, i7 4930k @4.1 GHz, 6 core), 2 WUs .... 140s ....... Assimilator1 - https://forums.anandtech.com/thread...imes-wanted-for-new-wus.2495905/post-40322386

RX 580 8GB, GPU 1266 MHz, (CPU Ryzen 5 3600, ~3.7 GHz), 2 WUs ...................... 167s ....... Assimilator1 - https://forums.anandtech.com/thread...imes-wanted-for-new-wus.2495905/post-40322386

Quadro 4000, (CPU dual Xeon 5680s), 2 WUs ............................................................ 799s ....... Fardringle https://forums.anandtech.com/thread...imes-wanted-for-new-wus.2495905/post-40324040

CPU statistics - Average Run Time to Complete a MW v1.46 227.5x credit WU :-

.....

************************************************************************************************************************************************************************************

Former benchmarking runs (different credit WUs and/or apps).

GPU statistics - Average Run Time to Complete 1 MW v1.46 227.62 credit WU :-

HD 7970, GPU 1200 MHz(!) (CPU, Xeon E5 ES 10 core @2.7 GHz, HT off) ..... 38.2s .... tictoc

R9 290, GPU 1000 MHz, (CPU, Xeon E5 ES 10 core @2.7 GHz, HT off) ........... 70.9s .... tictoc

HD 7870 XT 3GB(DS), GPU 925 MHz (CPU, C2 Q9550 @3.58 GHz) ................ 73.2s .... Assimilator1

RX 580 8GB, GPU 1350 MHz (CPU, i7 4930k @4.1 GHz) .................................. 97.3s .... Assimilator1

RTX 2080 Ti, GPU ???? MHz (CPU, i7-8700K @4.7 GHz no AVX) ................... 110.6s .... IEC

R7 iGPU on an AMD A12-9800 APU (CPU, 4.2 GHz) ....................................... 120.3s .... hoppisaur

RX 570, GPU stock (CPU, i7-4771 ?? GHz) ....................................................... 121s ....... Jim1348

Tesla T4, (CPU, ????) ......................................................................................... 151s ........ vseven

GPU statistics - Average Run Time to Complete multiple MW v1.46 227.62 credit WU :-

RX 570, GPU stock (CPU, i7-4771 ?? GHz) (2 concurrent WUs) ..................... 194s ....... Jim1348

CPU statistics - Average Run Time to Complete 1 MW v1.74 227.62 credit WU :-

**********************************************************************************************************************************

Since v1.46 was released on 1/5/17 (UK date format ), the WU times & credits changed. Times are apparently 'slightly longer' & the main WUs (99%+) & thus the new benchmark WU was 227.23 credits. See this post for more info. See below v1.46 table for the other benchmark requirements.

), the WU times & credits changed. Times are apparently 'slightly longer' & the main WUs (99%+) & thus the new benchmark WU was 227.23 credits. See this post for more info. See below v1.46 table for the other benchmark requirements.

Btw, watch out for the 227.26 credit WUs, they are very rare (approx. 1% of WUs atm), but despite their tiny increase in credit they take about 5% longer, at least on my HD 7970, ~56s vs 53s.

GPU statistics ~ Average Run Time to Complete 1 MW v1.46 227.23 credit WU :-

R9 280X, GPU 1030 MHz (CPU, ???) ................................................................... 50.4s .... JoeM

HD 7970, GPU 1000 MHz (CPU, i7 4930k @4.1 GHz) ........................................ 53s ....... Assimilator1

Vega 56, stock (CPU, 2500k @4.3 GHz) .............................................................. 63s ....... Chooka

HD 6970, GPU 890 MHz (CPU, Phenom II X6 1090T, stock) .............................. 94s ....... Hassan Shebli

HD 6970, stock (CPU, ???????) .......................................................................... 107s ....... JoeM

RX 480 8GB, GPU o/c to? (CPU, Phenom II X6 1100T @?) .............................. 110s ....... Darrell

HD 5870, GPU 900 MHz, (CPU, ?????? ) ............................................................ 116s ....... JoeM

RX 470 4GB, GPU 1205 MHz (CPU, Phenom II 1100T, stock) .......................... 127s ....... [AF>HFR] Seeds

GTX 1070 Ti, GPU 2 GHz (CPU, Ryzen 1700X @3.9 GHz) ................................ 170s ...... Keith Myers

GTX 1060, stock (CPU, Pentium G3900) ........................................................... 250s ...... DVDL

HD 7750, stock (CPU, ? ) ..................................................................................... 647s ..... JoeM

CPU statistics ~ Average Run Time to Complete 1 MW v1.46 227.23 credit WU :-

Ryzen R7 1700X (8C, stock 3.4 GHz, RAM o/c 2667 MHz) .................................. 3315s no HT ... JoeM

Ryzen R7 1700X (8C, stock 3.4 GHz, RAM o/c 2667 MHz) .................................. 4428s HT on ... JoeM

8350 (7C, ?????) ...................................................................................................... 5105s .............. JoeM

8350 (7C, ?????) ...................................................................................................... 5388s .............. JoeM

**********************************************************************************************************

Old app GPU statistics ~ Average Run Time to Complete 1 MW v1.42 133.66 credit WU :-

HD 7970, GPU 1250 MHz (CPU, AMD R7 1700 @3.8 GHz) ................................ 32.1s ... tictoc

R9 280X, GPU 1080 MHz (CPU, Pentium G3220 @3 GHz) ................................. 40.1s ... Tennessee Tony

HD 7970, GPU 1000 MHz (CPU, i7 4930k @4.1 GHz) ......................................... 42s ...... Assimilator1

R9 280X, Stock (CPU, C2D E6550, stock) ............................................................. 54.3s ... iwajabitw

R9 280X, GPU 1020 MHz (CPU, AMD FX8320E @3.47 GHz) .............................. 54.8s ... Tennessee Tony

HD 7950, GPU 860 MHz (CPU, i7 3770k, stock) .................................................. 56.5s ... salvorhardin

HD 7870 XT 3GB(DS), GPU 925 MHz (CPU, C2 Q9550 @3.58 GHz) .................. 56.8s ... Assimilator1

R9 390, GPU 1015 MHz (CPU, i7 3770k, stock) ................................................... 60.7s ... salvorhardin

R9 Fury, GPU 1050 MHz (i7 5820k @4.4 GHz) .................................................... 65.9s ... crashtech

RX 480, GPU 1415 MHz, RAM 2025 MHz (CPU, i5 6600k, 4.6 GHz) .................. 72.1s ... TomTheMetalGod

HD 6950, stock (CPU Athlon2 X4 620 @2.6 GHz) ............................................. 101.2s ... waffleironhead

GTX 1080, GPU 2000 MHz (CPU, i7 6950X @4 GHz) ........................................ 116s ...... StefanR5R

GTX 980, GPU 1303 MHz (CPU, i7 5820k @3.3 GHz) ....................................... 184s ...... iwajabitw

RX 460, GPU 1244 MHz (CPU, i5 4460 @3.2 GHz) ............................................ 240.5s ... waffleironhead

Quadro K2100M, stock (CPU, i7 4900 MQ turbo @3.8 GHz) ............................ 1784s ...... StefanR5R

StefanR5R has posted a load of scores here. So if you're interested in scores for a Xeon E5-2690 v4, Phenom II X4 905e, Core 2 T7600, i7 6950X, i7 4960X, i7 4900MQ, GTX 1070, GTX 1080 (I put the highest clock score in the table above), & a Firepro W7000 then check out his very useful post!

Current CPU statistics ~ Average Run Time to Complete 1 MW v1.4x 133.66 credit WU :-

i7 5820k @3.3 GHz ......................................................................... 2723s no 'HT load' .... iwajabitw

i7 4930k @4.1 GHz (6 threads for CPU) ....................................... 2825s no 'HT load' .... Assimilator1

i7 4930k @4.1 GHz (10 threads for CPU, 2 for GPU).................... 4171s HT on .............. Assimilator1

I7 4930k @4.1 GHz (12 threads for CPU) ..................................... 4557s HT on .............. Assimilator1

***********************************************************************************************

Info:-

My previous MW benchmark thread spring 2014 - summer 2016

Stock clocks for some of the commonly used graphics cards for MW (& cards with good double precision power), source Wiki (GPU/RAM MHz or MT/s if stated) :-

AMD .............................GPU/RAM ................................... DP GFLOPS

HD 4890 ...................... 850/975 ....................................... 272*

HD 5830 ...................... 800/1000 ..................................... 358

HD 5850 ...................... 725/1000 ..................................... 418

HD 5870 ...................... 850/1200 ..................................... 544

HD 5970 ...................... 725/1000 (dual GPU) .................. 928

HD 6930 ...................... 750/1200 ..................................... 480

HD 6950 ...................... 800/1250 ..................................... 563

HD 6970 ...................... 880/1375 ..................................... 675

HD 6990 ...................... 830/1250 (dual GPU) ................ 1277

HD 7870 XT ................. 925-975/1500 ............................. 710-749

HD 7950 ...................... 800/1250 ..................................... 717

HD 7950 Boost ........... 850-925/1250 .............................. 762-829

HD 7970 ...................... 925/1375 ..................................... 947

HD 7970 GE ............... 1000-1050/1500 ......................... 1024-1075

HD 7990 ..................... 950-1000/1500 (dual GPU) ........ 1894-2048

R9 280 ........................ 827-933/1250 .............................. 741-836

R9 280X ...................... 850-1000/1500 ............................ 870-1024

R9 290 ........................ >947/5000 MT/s .......................... 606

R9 290X ...................... >1000/5000 MT/s ....................... 704

R9 295X2 .................... 1018/5000 MT/s (dual GPU) .... 1433

R9 390 ........................ >1000/6000 MT/s ....................... 640

R9 390X ...................... >1050/6000 MT/s ....................... 739

R9 Fury ....................... 1000/1000 MT/s ......................... 448

R9 Nano ..................... 1000/1000 MT/s .......................... 512

R9 Fury X ................... 1050/1000 MT/s .......................... 538

R9 Pro Duo ................ 1000/1000 MT/s (dual GPU) ....... 900

RX 470 ........................ 926-1206/6600 MT/s .................. 237

RX 480 ...................... 1120-1266/7000-8000 MT/s ........ 323

RX Vega 56 .............. b/w 410 GB/s ................................. 518-659

RX Vega 64 ...............1890 MT/s ...................................... 638-792

RX Vega 64 Liquid .... 1890 MT/s ..................................... 720-859

RX 580 ...................... b/w 256 GB/s ................................. 362-386

RX 5700 XT .............. 1605-1905 b/w 448 GB/s ............. 562

Wow, just noticed how feeble the entire R 400s line is at Double Precision!, even the top of the line (as of 12/16) RX 480 only manages 323 GFLOPs, which is a little less than the HD 5830s 358 from 2/2010 & only a bit more than the HD 4890 from 4/2009! Although it is more than the R9 380X's 248 GFLOPs .

.

I see I should use memory bandwidth rather than clockrate, it's misleading for the Vega's as they actually have much higher bandwidth than the 480/580s. The RX 580 is 256 GB/s, the Vega 56 410 GB/s! (added).

I can see it won't be long before we have ancient 5800s, 6900s & 7900s (& 7870 XTs) as a secondary card in our rigs solely for crunching MW & Einstein, & modern cards for gaming & SP DC! ..........maybe I'm behind the times & some of you guys are already doing that!?

* The 4800s can't run MW atm, see here

NVidia ...............................GPU/RAM ....................... DP GFLOPS

GTX 980 ................ 1126-1216 MHz/7010 MT/s .............. 144

GTX 980 Ti ............ 1000-1076 MHz/7010 MT/s .............. 176

GTX 1060 6GB ...... 1506-1708 MHz/8000 or 9000 MT/s 120-137

GTX 1070 .............. 1506-1683 MHz/8000 MT/s .............. 181-202

GTX 1080 .............. 1607-1733 MHz/10,000 MT/s ........... 257-277

GTX 2080 .............. 1515-1710 MHz/14,000 MT/s ........... 279-315

GTX 2080 Ti .......... 1350-1545 MHz/14,000 MT/s ........... 367-421

Why so few NVidia cards? Well traditionally AMD's cards had (& still have?) far better DP performance, but I will add more if they crop up more in the benchmarks, or if requested.

[update 7/2023] MW's Seperation project ended this month, so only the CPU project is running now.

[update 10/2020] Seems that the 227.5x credits have been sticking around for a while, although their does seem to be some variability in WUs sometimes. So I'm collecting WU times again (see end of thread), just bear in mind the times might be more fuzzy than previous benchmarking runs.

CPUs don't consistently get the 227.5x WUs unless the MT app is disabled.

Thanks to biodoc regarding CPU benchmarking for this

It's the separation tasks that were used for bench-marking in the past. To get accurate benchmarks on the CPU separation tasks, I would suggest opening up your milkyway account preferences and uncheck the N-body simulation mt app and also the GPU separation app so you only get the CPU separation tasks. Running tasks from all 3 apps at the same time just adds too many variables.

I've added a table for running concurrent WUs as many people do that, & Nvidia cards in particular benefit from doing that. Note though that times from doing that can be more erratic than running singularly.

Requirements for the benchmark :-

Averaged time of at least 5 WUs for GPUs and an averaged time of at least 10 WUs for CPUs (not cherry picked please!

A dedicated physical CPU core for each GPU (for optimal MW WU times). If only using BOINC for CPU tasks, & you have an HT capable CPU, then the only way to be certain of this (bar disabling HT) is to set the BOINC computing preferences (in advanced mode>options) so that you have 2 less CPU threads running then you do physical cores. Don't panic too much about lost CPU ppd, it doesn't take long to run MW GPU WUs

Please state what speed & type CPU you have, as it now has a significant affect on GPU WU times!

Please state GPU clock speeds if overclocked (including factory overclocks) or state 'stock'.

Please only crunch 1 WU at a time per GPU, preferably. Or if you are running concurrent WUs, state how many & I'll put your time in the 2nd GPU table.

For CPU times please state whether Hyper Threading (or equivalent) is enabled or not, times for both states welcomed.

It would also be useful if you could state your BOINC & driver version, & OS, in case it does make any difference.

If you find your WU times are fluctuating more than a couple of % for singly run WUs then use GPU-Z or your grx card driver tools to check that your GPU is able to hit near 100% load (although I'm not sure that Nvidia cards can hit that for MW). Note that even when crunching normally, the GPU load will be on/off on this current MW app, so the GPU load graph should look like a series of blocks. Just looking at my RX 580, it was going to zero load roughly every 27s.

Also check using task manager that your CPU does actually have the spare load to give to MW (& btw, GPU crunching won't show up in the TM).

(stupid forum s/w is now busted and won't let me make the names here clickable without linking everything in the following text!!!)

GPU statistics - Average Run Time to Complete a MW v1.46 227.5x credit WU :-

Firepro S9150, (CPU i3 4160) ............................................................................... 31.2s .... Icecold - https://forums.anandtech.com/thread...imes-wanted-for-new-wus.2495905/post-40165439

R280X, 900 MHz, (CPU Ryzen 9 3900X @~3.9 GHz)........................................... 47s ....... Fardringle - https://forums.anandtech.com/thread...imes-wanted-for-new-wus.2495905/post-40334785

HD7870 XT 3GB (DS), GPU 850 MHz, (CPU, i7 4930k @4.1 GHz, 6 core) ......... 68s ...... Assimilator1 - https://forums.anandtech.com/thread...imes-wanted-for-new-wus.2495905/post-40317876

RX 6700 XT -6% power target, -25mV, (CPU Ryzen 9 5950X @~3 GHz) ........... 84s ....... Endgame124 - https://forums.anandtech.com/threads/6700xt-distributed-computing-results.2592770/post-40489586

RX 580 8GB, GPU 1266 MHz, (CPU Ryzen 5 3600 @~3.7 GHz) ......................... 96s ...... Assimilator1 - https://forums.anandtech.com/thread...imes-wanted-for-new-wus.2495905/post-40317876

R7 250X (CPU A10 7870k) .................................................................................. 405s ..... Endgame124 - https://forums.anandtech.com/thread...imes-wanted-for-new-wus.2495905/post-40419746

A10 7870k iGPU .................................................................................................. 472s ...... Endgame124 - https://forums.anandtech.com/thread...imes-wanted-for-new-wus.2495905/post-40419746

Quadro 4000 (CPU dual Xeon 5680s) ................................................................ 481s ...... Fardringle - https://forums.anandtech.com/thread...imes-wanted-for-new-wus.2495905/post-40324040

GPU statistics - Average Run Time to Complete concurrent MW v1.46 227.5x credit WUs :-

Radeon VII Pro, (CPU Xeon E5-1620 v3 @ 3.50GHz), 4 concurrent WUs ................... 30s ........ Holdolin - https://hardforum.com/threads/welcome-new-h-members-to-dc.2006491/post-1044892382

R9 280X, 900 MHz, (CPU Ryzen 9 3900X, ~3.9 GHz), 2 concurrent WUs ................... 77.2s .... Fardringle

HD7870 XT 3GB (DS), GPU 925 MHz, (CPU, i7 4930k @4.1 GHz, 6 core), 2 WUs .... 140s ....... Assimilator1 - https://forums.anandtech.com/thread...imes-wanted-for-new-wus.2495905/post-40322386

RX 580 8GB, GPU 1266 MHz, (CPU Ryzen 5 3600, ~3.7 GHz), 2 WUs ...................... 167s ....... Assimilator1 - https://forums.anandtech.com/thread...imes-wanted-for-new-wus.2495905/post-40322386

Quadro 4000, (CPU dual Xeon 5680s), 2 WUs ............................................................ 799s ....... Fardringle https://forums.anandtech.com/thread...imes-wanted-for-new-wus.2495905/post-40324040

CPU statistics - Average Run Time to Complete a MW v1.46 227.5x credit WU :-

.....

************************************************************************************************************************************************************************************

Former benchmarking runs (different credit WUs and/or apps).

GPU statistics - Average Run Time to Complete 1 MW v1.46 227.62 credit WU :-

HD 7970, GPU 1200 MHz(!) (CPU, Xeon E5 ES 10 core @2.7 GHz, HT off) ..... 38.2s .... tictoc

R9 290, GPU 1000 MHz, (CPU, Xeon E5 ES 10 core @2.7 GHz, HT off) ........... 70.9s .... tictoc

HD 7870 XT 3GB(DS), GPU 925 MHz (CPU, C2 Q9550 @3.58 GHz) ................ 73.2s .... Assimilator1

RX 580 8GB, GPU 1350 MHz (CPU, i7 4930k @4.1 GHz) .................................. 97.3s .... Assimilator1

RTX 2080 Ti, GPU ???? MHz (CPU, i7-8700K @4.7 GHz no AVX) ................... 110.6s .... IEC

R7 iGPU on an AMD A12-9800 APU (CPU, 4.2 GHz) ....................................... 120.3s .... hoppisaur

RX 570, GPU stock (CPU, i7-4771 ?? GHz) ....................................................... 121s ....... Jim1348

Tesla T4, (CPU, ????) ......................................................................................... 151s ........ vseven

GPU statistics - Average Run Time to Complete multiple MW v1.46 227.62 credit WU :-

RX 570, GPU stock (CPU, i7-4771 ?? GHz) (2 concurrent WUs) ..................... 194s ....... Jim1348

CPU statistics - Average Run Time to Complete 1 MW v1.74 227.62 credit WU :-

**********************************************************************************************************************************

Since v1.46 was released on 1/5/17 (UK date format

Btw, watch out for the 227.26 credit WUs, they are very rare (approx. 1% of WUs atm), but despite their tiny increase in credit they take about 5% longer, at least on my HD 7970, ~56s vs 53s.

GPU statistics ~ Average Run Time to Complete 1 MW v1.46 227.23 credit WU :-

R9 280X, GPU 1030 MHz (CPU, ???) ................................................................... 50.4s .... JoeM

HD 7970, GPU 1000 MHz (CPU, i7 4930k @4.1 GHz) ........................................ 53s ....... Assimilator1

Vega 56, stock (CPU, 2500k @4.3 GHz) .............................................................. 63s ....... Chooka

HD 6970, GPU 890 MHz (CPU, Phenom II X6 1090T, stock) .............................. 94s ....... Hassan Shebli

HD 6970, stock (CPU, ???????) .......................................................................... 107s ....... JoeM

RX 480 8GB, GPU o/c to? (CPU, Phenom II X6 1100T @?) .............................. 110s ....... Darrell

HD 5870, GPU 900 MHz, (CPU, ?????? ) ............................................................ 116s ....... JoeM

RX 470 4GB, GPU 1205 MHz (CPU, Phenom II 1100T, stock) .......................... 127s ....... [AF>HFR] Seeds

GTX 1070 Ti, GPU 2 GHz (CPU, Ryzen 1700X @3.9 GHz) ................................ 170s ...... Keith Myers

GTX 1060, stock (CPU, Pentium G3900) ........................................................... 250s ...... DVDL

HD 7750, stock (CPU, ? ) ..................................................................................... 647s ..... JoeM

CPU statistics ~ Average Run Time to Complete 1 MW v1.46 227.23 credit WU :-

Ryzen R7 1700X (8C, stock 3.4 GHz, RAM o/c 2667 MHz) .................................. 3315s no HT ... JoeM

Ryzen R7 1700X (8C, stock 3.4 GHz, RAM o/c 2667 MHz) .................................. 4428s HT on ... JoeM

8350 (7C, ?????) ...................................................................................................... 5105s .............. JoeM

8350 (7C, ?????) ...................................................................................................... 5388s .............. JoeM

**********************************************************************************************************

Old app GPU statistics ~ Average Run Time to Complete 1 MW v1.42 133.66 credit WU :-

HD 7970, GPU 1250 MHz (CPU, AMD R7 1700 @3.8 GHz) ................................ 32.1s ... tictoc

R9 280X, GPU 1080 MHz (CPU, Pentium G3220 @3 GHz) ................................. 40.1s ... Tennessee Tony

HD 7970, GPU 1000 MHz (CPU, i7 4930k @4.1 GHz) ......................................... 42s ...... Assimilator1

R9 280X, Stock (CPU, C2D E6550, stock) ............................................................. 54.3s ... iwajabitw

R9 280X, GPU 1020 MHz (CPU, AMD FX8320E @3.47 GHz) .............................. 54.8s ... Tennessee Tony

HD 7950, GPU 860 MHz (CPU, i7 3770k, stock) .................................................. 56.5s ... salvorhardin

HD 7870 XT 3GB(DS), GPU 925 MHz (CPU, C2 Q9550 @3.58 GHz) .................. 56.8s ... Assimilator1

R9 390, GPU 1015 MHz (CPU, i7 3770k, stock) ................................................... 60.7s ... salvorhardin

R9 Fury, GPU 1050 MHz (i7 5820k @4.4 GHz) .................................................... 65.9s ... crashtech

RX 480, GPU 1415 MHz, RAM 2025 MHz (CPU, i5 6600k, 4.6 GHz) .................. 72.1s ... TomTheMetalGod

HD 6950, stock (CPU Athlon2 X4 620 @2.6 GHz) ............................................. 101.2s ... waffleironhead

GTX 1080, GPU 2000 MHz (CPU, i7 6950X @4 GHz) ........................................ 116s ...... StefanR5R

GTX 980, GPU 1303 MHz (CPU, i7 5820k @3.3 GHz) ....................................... 184s ...... iwajabitw

RX 460, GPU 1244 MHz (CPU, i5 4460 @3.2 GHz) ............................................ 240.5s ... waffleironhead

Quadro K2100M, stock (CPU, i7 4900 MQ turbo @3.8 GHz) ............................ 1784s ...... StefanR5R

StefanR5R has posted a load of scores here. So if you're interested in scores for a Xeon E5-2690 v4, Phenom II X4 905e, Core 2 T7600, i7 6950X, i7 4960X, i7 4900MQ, GTX 1070, GTX 1080 (I put the highest clock score in the table above), & a Firepro W7000 then check out his very useful post!

Current CPU statistics ~ Average Run Time to Complete 1 MW v1.4x 133.66 credit WU :-

i7 5820k @3.3 GHz ......................................................................... 2723s no 'HT load' .... iwajabitw

i7 4930k @4.1 GHz (6 threads for CPU) ....................................... 2825s no 'HT load' .... Assimilator1

i7 4930k @4.1 GHz (10 threads for CPU, 2 for GPU).................... 4171s HT on .............. Assimilator1

I7 4930k @4.1 GHz (12 threads for CPU) ..................................... 4557s HT on .............. Assimilator1

***********************************************************************************************

Info:-

My previous MW benchmark thread spring 2014 - summer 2016

Stock clocks for some of the commonly used graphics cards for MW (& cards with good double precision power), source Wiki (GPU/RAM MHz or MT/s if stated) :-

AMD .............................GPU/RAM ................................... DP GFLOPS

HD 4890 ...................... 850/975 ....................................... 272*

HD 5830 ...................... 800/1000 ..................................... 358

HD 5850 ...................... 725/1000 ..................................... 418

HD 5870 ...................... 850/1200 ..................................... 544

HD 5970 ...................... 725/1000 (dual GPU) .................. 928

HD 6930 ...................... 750/1200 ..................................... 480

HD 6950 ...................... 800/1250 ..................................... 563

HD 6970 ...................... 880/1375 ..................................... 675

HD 6990 ...................... 830/1250 (dual GPU) ................ 1277

HD 7870 XT ................. 925-975/1500 ............................. 710-749

HD 7950 ...................... 800/1250 ..................................... 717

HD 7950 Boost ........... 850-925/1250 .............................. 762-829

HD 7970 ...................... 925/1375 ..................................... 947

HD 7970 GE ............... 1000-1050/1500 ......................... 1024-1075

HD 7990 ..................... 950-1000/1500 (dual GPU) ........ 1894-2048

R9 280 ........................ 827-933/1250 .............................. 741-836

R9 280X ...................... 850-1000/1500 ............................ 870-1024

R9 290 ........................ >947/5000 MT/s .......................... 606

R9 290X ...................... >1000/5000 MT/s ....................... 704

R9 295X2 .................... 1018/5000 MT/s (dual GPU) .... 1433

R9 390 ........................ >1000/6000 MT/s ....................... 640

R9 390X ...................... >1050/6000 MT/s ....................... 739

R9 Fury ....................... 1000/1000 MT/s ......................... 448

R9 Nano ..................... 1000/1000 MT/s .......................... 512

R9 Fury X ................... 1050/1000 MT/s .......................... 538

R9 Pro Duo ................ 1000/1000 MT/s (dual GPU) ....... 900

RX 470 ........................ 926-1206/6600 MT/s .................. 237

RX 480 ...................... 1120-1266/7000-8000 MT/s ........ 323

RX Vega 56 .............. b/w 410 GB/s ................................. 518-659

RX Vega 64 ...............1890 MT/s ...................................... 638-792

RX Vega 64 Liquid .... 1890 MT/s ..................................... 720-859

RX 580 ...................... b/w 256 GB/s ................................. 362-386

RX 5700 XT .............. 1605-1905 b/w 448 GB/s ............. 562

Wow, just noticed how feeble the entire R 400s line is at Double Precision!, even the top of the line (as of 12/16) RX 480 only manages 323 GFLOPs, which is a little less than the HD 5830s 358 from 2/2010 & only a bit more than the HD 4890 from 4/2009! Although it is more than the R9 380X's 248 GFLOPs

I see I should use memory bandwidth rather than clockrate, it's misleading for the Vega's as they actually have much higher bandwidth than the 480/580s. The RX 580 is 256 GB/s, the Vega 56 410 GB/s! (added).

I can see it won't be long before we have ancient 5800s, 6900s & 7900s (& 7870 XTs) as a secondary card in our rigs solely for crunching MW & Einstein, & modern cards for gaming & SP DC! ..........maybe I'm behind the times & some of you guys are already doing that!?

* The 4800s can't run MW atm, see here

NVidia ...............................GPU/RAM ....................... DP GFLOPS

GTX 980 ................ 1126-1216 MHz/7010 MT/s .............. 144

GTX 980 Ti ............ 1000-1076 MHz/7010 MT/s .............. 176

GTX 1060 6GB ...... 1506-1708 MHz/8000 or 9000 MT/s 120-137

GTX 1070 .............. 1506-1683 MHz/8000 MT/s .............. 181-202

GTX 1080 .............. 1607-1733 MHz/10,000 MT/s ........... 257-277

GTX 2080 .............. 1515-1710 MHz/14,000 MT/s ........... 279-315

GTX 2080 Ti .......... 1350-1545 MHz/14,000 MT/s ........... 367-421

Why so few NVidia cards? Well traditionally AMD's cards had (& still have?) far better DP performance, but I will add more if they crop up more in the benchmarks, or if requested.

Last edited: