- Sep 5, 2003

- 19,458

- 765

- 126

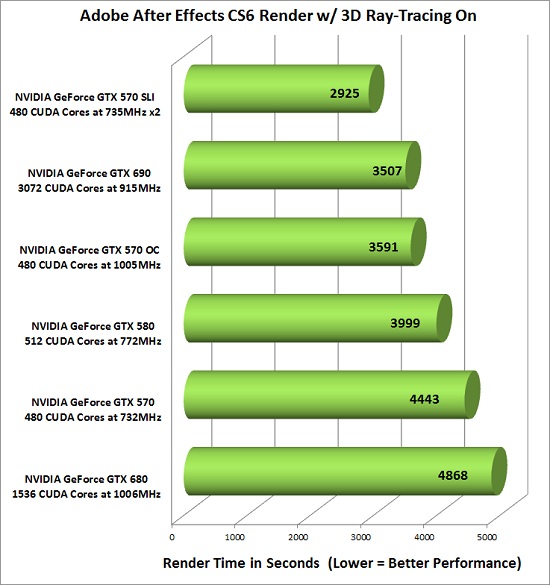

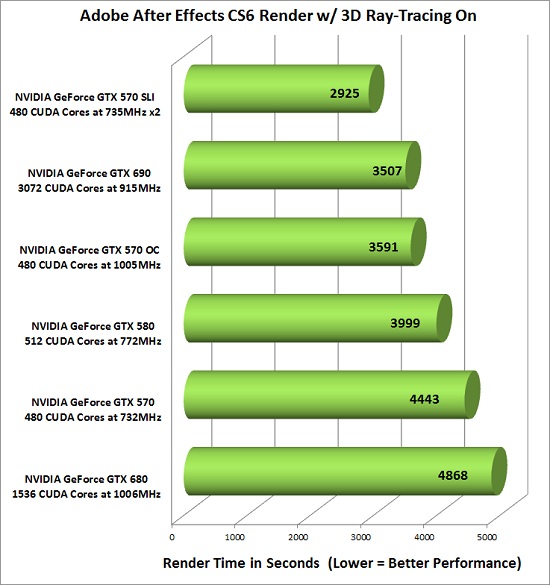

After Effects CS6 version 11.0.2.12 finally supports the NVIDA GeForce GTX 690 card for Ray-traced 3D rendering.

" Our test results show that the NVIDIA GeForce GTX 580 'Fermi' video card was about 18% faster than the NVIDA GeForce GTX 680 video card! This result might shock some, but not to others. Kepler is certainly faster when it comes to gaming, but when it comes to raw compute performance the clear leader is still Fermi! A pair of NVIDIA GeForce GTX 570 video cards running in SLI finished the render in just 48 minutes, which is impressive considering NVIDIA's flagship GeForce GTX 690 video card completes the same task in 58 minutes!

Are you wondering how long it took to do the render off just the Intel Core i7 2700K processor at 5GHz in just "classic 3D" mode? It took only 7 minutes and 36 seconds, but was about 75% the quality of the ray traced version! Running Ray-traced 3D on the NVIDIA graphics card was the way to go when it came to quality, but that is another story for a different day!"

Source

Right now a GTX570 + OC gives similar level of performance to a GTX690. Assuming the Titan fixes Kepler's compute performance, it might be the ticket for those who play games and do other things with their GPU but can't afford K20/K20X cards.

" Our test results show that the NVIDIA GeForce GTX 580 'Fermi' video card was about 18% faster than the NVIDA GeForce GTX 680 video card! This result might shock some, but not to others. Kepler is certainly faster when it comes to gaming, but when it comes to raw compute performance the clear leader is still Fermi! A pair of NVIDIA GeForce GTX 570 video cards running in SLI finished the render in just 48 minutes, which is impressive considering NVIDIA's flagship GeForce GTX 690 video card completes the same task in 58 minutes!

Are you wondering how long it took to do the render off just the Intel Core i7 2700K processor at 5GHz in just "classic 3D" mode? It took only 7 minutes and 36 seconds, but was about 75% the quality of the ray traced version! Running Ray-traced 3D on the NVIDIA graphics card was the way to go when it came to quality, but that is another story for a different day!"

Source

Right now a GTX570 + OC gives similar level of performance to a GTX690. Assuming the Titan fixes Kepler's compute performance, it might be the ticket for those who play games and do other things with their GPU but can't afford K20/K20X cards.

Last edited: