AMD's FX line hasn't had a true refresh in a while. Recently some new models were released with an "e" suffix that indicates they're binned for better power usage characteristics, but AFAIK there were no design changes.

A brief history:

Late 2011, AMD released Bulldozer-based FX-81xx, 61xx and 41xx as their successor to Stars (Phenom II), which was largely a Core2 competitor. Bulldozer introduced "modules" which were basically core-pairs that shared resources. If both cores were in-use, they would suffer a performance penalty (something like 25% for each on BD?), but the advantage was that each module was significantly smaller than two cores would have been otherwise. It was up against Sandy Bridge, which had been on the market for around 9 months. Generally speaking, Bulldozer was slower than Stars and power consumption was also higher, despite a process shrink, and thus was pretty non-competitive with Intel's offerings, considering Stars was a Core2 competitor and Intel had their 2nd generation i7 out.

In mid-2012 Intel released Ivy Bridge, which was a die shrink and slight design change from Sandy Bridge. Performance was up 0-10%, while power consumption was down. Shortly after, AMD released Piledriver-based FX-83xx, 63xx and 43xx, which were a significant improvement over Bulldozer in single-threaded performance, clocked higher, had less of a penalty from module shared resources. Despite this, compared with Piledriver, an Ivy Bridge core was often more than 50% faster. AMD would sell you twice as many cores for the same price though, which resulted in FX-8 chips being a fair value at around $250. You could get performance that was competitive with an i7 (if your programs could saturate 8 threads) for $75 less, though single-threaded performance was still relatively bad, and power consumption (when all threads were saturated) was considerably higher too. Games such as Starcraft 2 and Guild Wars 2 performed badly on Piledriver, while Battlefield ran as well or better than on Intel's counterparts.

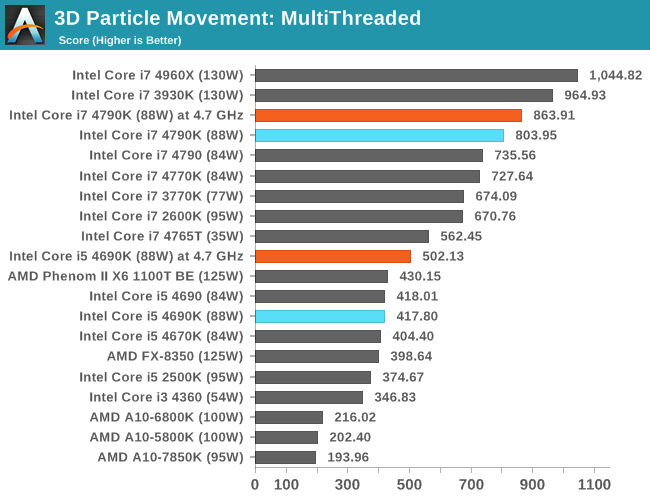

Since then, AMD has not released a new FX-based architecture on AM3, only a 2-module/4 core APU on FM2 called Steamroller, while Intel has released Haswell, which included another single-threaded performance improvement and efficiency bump, and is relatively close to releasing Broadwell, Haswell's successor. AMD has gradually lowered their prices, and it's arguable that at around $100, an FX-8310 would be a suitable chip for some. Multithreaded performance is equal or better than a Haswell i5, which starts at around $180, but power consumption when doing the same amount of work is much higher. Single-threaded performance on Haswell is 50-60% higher. AM3 is also a very old socket and lacks some of the features of AMD's more recent FM2 and Intel's 1150 sockets, which matters to some but often isn't a deal-breaker.

The FX-9xxx series are a recent release of chips binned to run at very high frequency, but also have a very high power consumption, so much so that AMD originally shipped them with watercoolers. They are also a lot more expensive than the FX-8xxx chips.

~

It's worth noting that most games, while bottlenecked by Piledriver's poor single-threaded performance, are still bottlenecked above 40fps minimums. This may improve when Directx12 is released too, but Piledriver will probably be more than 4 years old before we see the first games that take advantage of this.

Here is a benchmark from the Battlefield Hardline beta:

FarCry 4:

CoD Advanced Warfare:

It's worth noting that AMD's chips idle nearly as well as Intel's, and that multithreaded performance is significantly better than Intel's similarly priced i3:

I probably wouldn't put one in a gaming rig though.