railven

Diamond Member

- Mar 25, 2010

- 6,604

- 561

- 126

I played FC4 and Dying Light just fine at launch...

Same way I played Diablo 3 fine at launch. We're the exceptions. It feels good to be them sometimes.

I played FC4 and Dying Light just fine at launch...

AMD is to blame for Fury's lackluster debut.

I played FC4 and Dying Light just fine at launch...

Far Cry 4 : https://www.youtube.com/watch?v=M25Le3JdHH8

AC Unity : https://www.youtube.com/watch?v=SgpzT5V5Mgs

Arkham Knight : https://www.youtube.com/watch?v=bFBd5GgGkMs

Forum anecdotes do not reality make. People were claiming Arkham Knight ran great for them as well with no issues. Meanwhile the game is pulled from shelves because it's just that broken.

Dying light had a memory leak that caused the RAM usage to climb until it crashed to the desktop. I had that issue for about a few weeks until they patched it. I'm surprised you had no issues, I guess you were one of the lucky ones.

Exactly my point. Initially it was trying to lay the blame on Ubisoft as a crap developer - partially true :awe: - because Unity, Watch Dogs and Far Cry 4 all shipped broken. Now though we've see games from other developers with gameworks all ship broken as well.

The universal is gameworks; Dying Light, Watch Dogs, Far Cry 4, Arkham Knight, AC: Unity. All shipped seriously broken and most continue to have issues. All suffered from terrible optimization, buggy graphics etc. Arkham Knight is so bad they pulled it from shelves. Even Witcher 3 while not using gameworks, does implement hairworks and that costs you 15-20fps at all times to mildly alter the hair on Geralt. This has become all the inefficiency of gpu physx amplified to ruin game optimization.

Gameworks really is a disaster for gaming. I would expect game developers to turn away from it until it's fixed or axed. What developer wants to follow in the footsteps of Rocksteady and have to pull a game off the shelves ? The game runs fine on consoles, within those 30fps limitations. What's different on PC ? Gameworks. In fact it's even worse on PC as half the features are bugged and don't work - see ambient occlusion - broken on PC and doesn't even activate...

There may be a bit of reversed cause and effect there. The devs who are lazy enough to make awful games may well be the ones who are lazy enough to scab GW onto their stuff.

Either way, I personally find GW beneficial because it puts a nice logo on all the games I may as well ignore.

Exactly my point. Initially it was trying to lay the blame on Ubisoft as a crap developer - partially true :awe: - because Unity, Watch Dogs and Far Cry 4 all shipped broken. Now though we've see games from other developers with gameworks all ship broken as well.

The universal is gameworks; Dying Light, Watch Dogs, Far Cry 4, Arkham Knight, AC: Unity. All shipped seriously broken and most continue to have issues. All suffered from terrible optimization, buggy graphics etc. Arkham Knight is so bad they pulled it from shelves. Even Witcher 3 while not using gameworks, does implement hairworks and that costs you 15-20fps at all times to mildly alter the hair on Geralt. This has become all the inefficiency of gpu physx amplified to ruin game optimization.

Gameworks really is a disaster for gaming. I would expect game developers to turn away from it until it's fixed or axed. What developer wants to follow in the footsteps of Rocksteady and have to pull a game off the shelves ? The game runs fine on consoles, within those 30fps limitations. What's different on PC ? Gameworks. In fact it's even worse on PC as half the features are bugged and don't work - see ambient occlusion - broken on PC and doesn't even activate...

Arkham Knight has a 30fps lock and elements of the physics engine and texture streaming break when you mod it to run at 60fps. Nvidia doubled the speed of their promo video to put up a 1080p60fps video.

https://youtu.be/zsjmLNZtvxk?t=25

In the background you can hear the voices of the NPCs sounding like chipmunks from the video being sped up from 30fps to 60.

if (GPU == "AMD") {

gameBreaks();

} else if (GPU == "Nvidia") {

gameWorks();

}Found gameworks source code

PHP:if (GPU == "AMD" || "Kepler") { gameBreaks(); } else if (GPU == "Maxwell") { gameWorks(); }

for anyone who missed it

https://www.youtube.com/watch?v=fZGV5z8YFM8

AMD interview

There is one way in which this is worryingly similar to the Conroe situation: AMD failed to win the performance crown in spite of having a new technology that the competitor lacked. In this case, it's HBM. In the case of the K10, it was the on-die memory controller (Conroe still used a classic front-side bus). When Intel integrated the memory controller in Nehalem (which could basically be described as Conroe+IMC), they took another big step ahead of AMD, and AMD was never really able to catch up. What will happen when Nvidia starts using HBM2? In that case, AMD has to compete on a raw architecture basis, but die-shrunk GCN 1.2 doesn't seem like it is going to be competitive enough with Pascal (Maxwell+HBM2).

That said, AMD's CPU business still managed to do OK for a while even after Conroe's release. It was a serious blow, but not an insta-kill. Thuban was a decent budget alternative to Nehalem, especially if you did lots of multi-threaded work, and it didn't do too badly on single-threaded tasks either. It was Bulldozer that really torpedoed AMD's CPU division. Billions of dollars of R&D spent on a product that in many ways was objectively worse than its own predecessor. AMD would have been far better off with a straight die-shrink of Thuban followed by incremental tweaks.

Disagree. Gameworks is the universal constant in all these broken titles that use it. It's worth looking at the gameworks information. It goes beyond just turning on the gpu compute things like smoke or debris effects. It does shadowing, animations, fire etc. Games using it have a lot of the functionality throughout the game without the ability to disable any of it.

My comment had nothing to do with Fury X reviewing badly. The card is just bad, positioned poorly in terms of its performance, price etc. Gameworks is hurting users of either AMD or nvidia cards though, with broken games, terrible performance, abysmal optimization etc. It needs to go or nvidia needs to fix it, because currently it's a disaster for gaming.

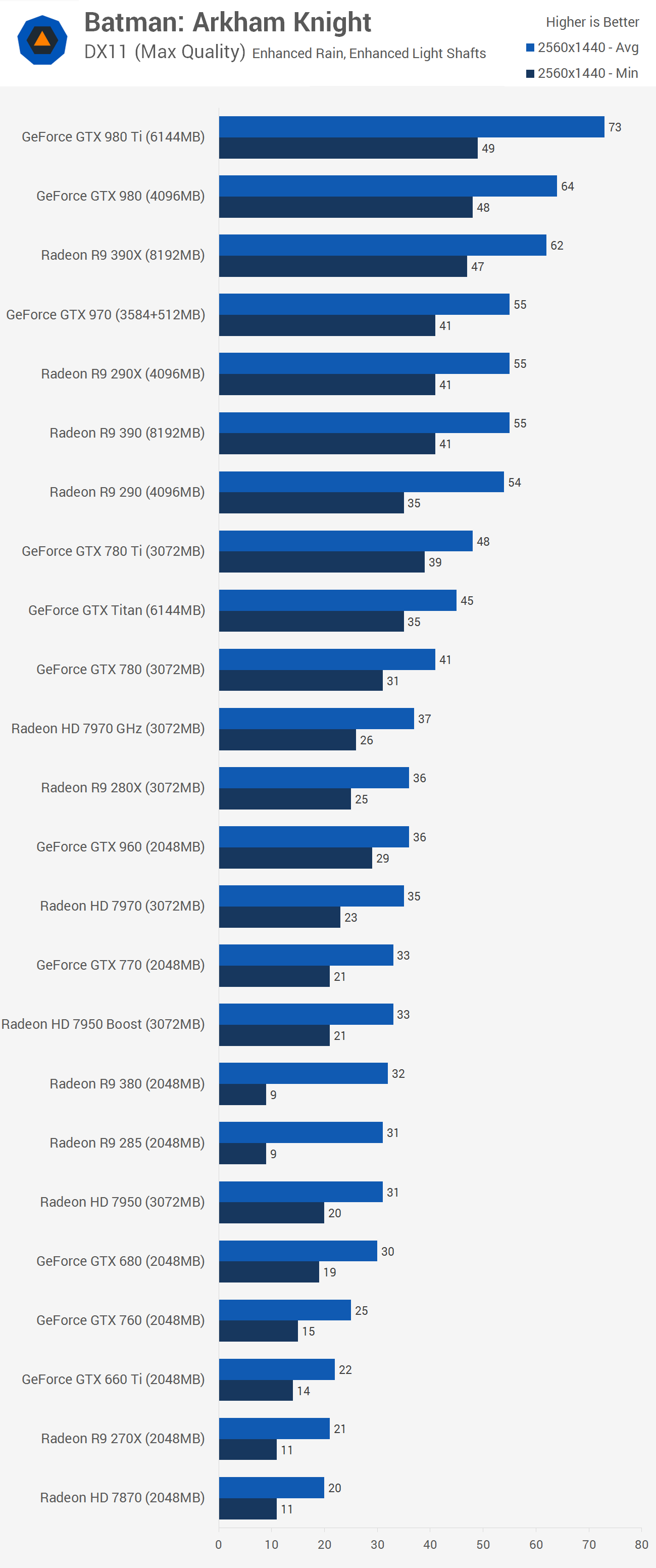

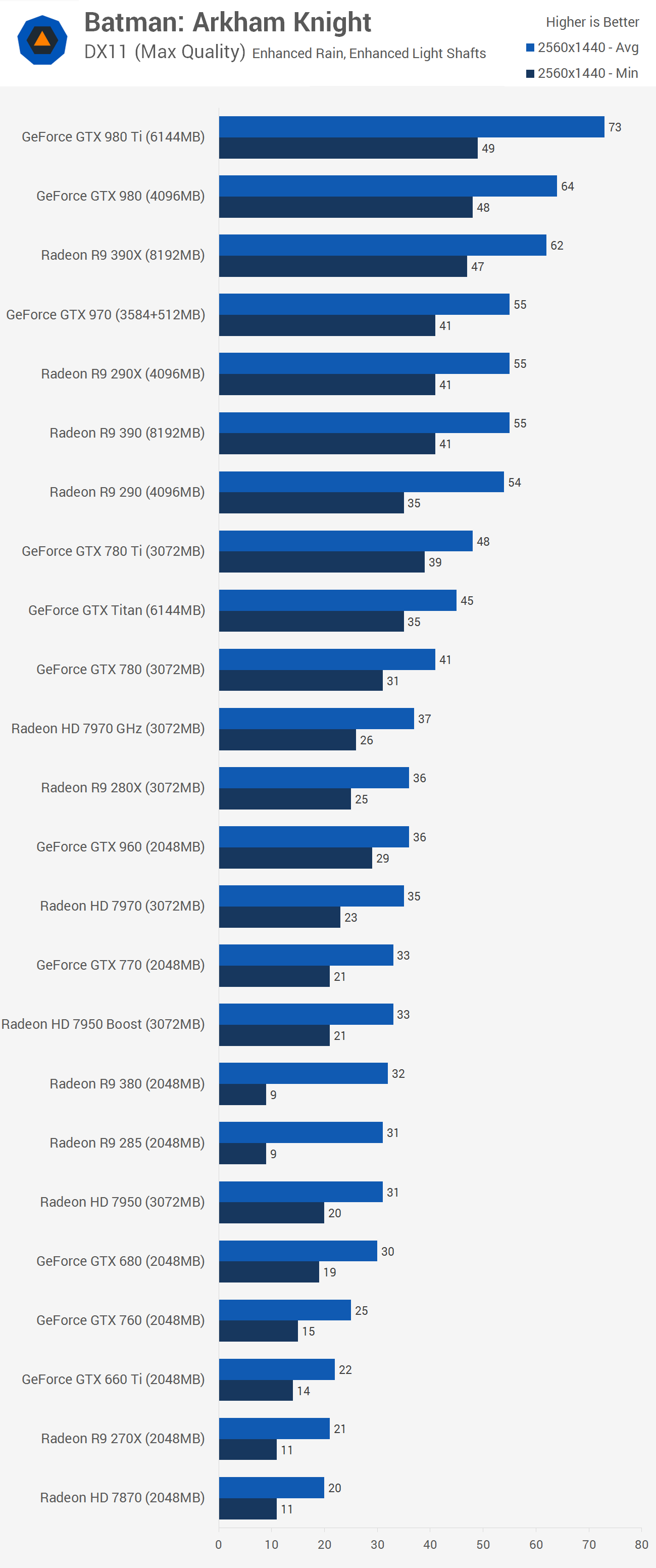

Yet another GameWorks title that Kepler tanks in.. welldone nVidia, the "bug" is truly a "feature".

Look at how crap 780, Titan and 780Ti in relation to GCN and Maxwell.

Maxwell is a generation ahead of 780Ti. I don't understand the exaggeration going on here. 780Ti has min 39 and avg. 48 compared to GTX970's min of 41 and avg. of 55. 960 is showing it's architectural advantage over last gens 770 by a good margin but barely playable at this res and settings. the 770 will need reduced settings to be playable.

Kepler seems to be right where it's supposed to be in relation to a newer generation of GPUs. I don't remember Fermi getting slammed when Kepler outshined it and Fermi fell behind in performance improvements.

I'm not saying there won't be any improvements over time for Kepler. There was just released a performance improvement for Kepler in a recent driver.

At any rate. Overblown is the word of the week around here.

Yes. Kepler is behind Maxwell. As it should be. Nowhere near the "tank" name you use as your descriptor.

Trying to use Batman as an example of anything seems a bit silly being as there are few more broken PC ports out there...

As for gamesworks working better with maxwell, well yes they code the effects to play to maxwells strengths not keplers. Previously they coded the effects to work best for kepler, and maxwell arrived and was still a bit faster, but obviously when you code for maxwell the gap will grow.

The same thing would probably happen for AMD - it's nothing to do with forward thinking, it's just that their cards architecture has barely changed for 3.5 years. If they actually released a next gen architecture the current gen would fall away rapidly. In the past AMD were notorious for that - the drivers only supporting the current gen well.

GameWorks pretty much tanks performances with it on regardless if you're on Maxwell, Kepler or GCN for , IMO, very minimal visual gains. It just takes the least amount of performance hits on Maxwell.