No, this has been the case for quite a while now, you've already drawn this conclusion in your head and have sought after evidence to support your unsubstantiated notions. BF4 is very highly threaded and shows no significant advantage (even in dx11) for Nvidia. Max Payne 3 is older now, but very well threaded (improvements on up to 6 cores) and had no advantage for Nvidia (after a couple patches/driver updates).

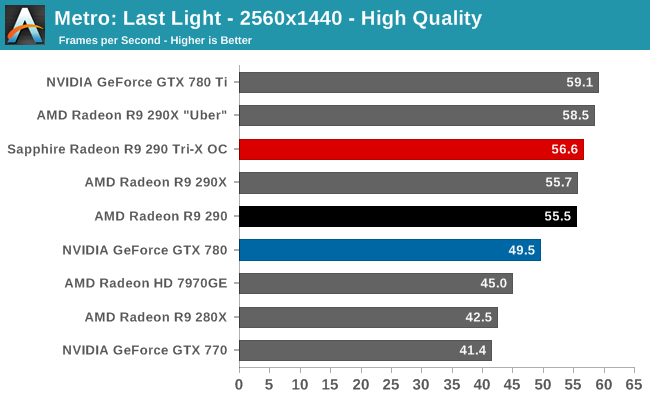

Metro Last Light scaled up to 6 threads and guess what, no advantage for Nvidia:.

I was going to do a full response, but then I thought the better of it. We could continue arguing and debating about this subject forever. Right now, there's just not enough data to come to any firm conclusions.

We'll just have to wait until the inevitable deluge of next gen games come in, particularly the big open world ones like AC Unity, Batman Arkham Knight, Dragon Age Inquisition, Witcher 3 etcetera....

One thing I have to say though, is that you're confusing multithreaded games, with multithreaded rendering, which is what I've been attributing NVidia's strong performance in Watch Dogs to. The two phrases are not really synonymous.

Max Payne 3 and Metro Last Light are all multithreaded, but they do not use multithreaded rendering as far as I know. Only a few engines do to my knowledge, and they are the Lore engine used in Civ 5, Frostbite 3, CryEngine 3, Disrupt Engine, Unreal Engine 4 plus some others that will be making their debut this year and next year.

Anyway, after thinking about things for a bit, I think you may be right that there are so many variables that come into play to determine how a video card performs in a given game; certainly more than just the amount of threads an engine supports.

There's something about Watch Dogs that's different. No other game I can think of matches Watch Dogs in terms of how much detail is being rendered at any given moment. Maybe that's it. I still believe that NVidia has lower CPU overhead and superior multithreaded optimizations, but it's nowhere near as simple as I thought..

One other thing, that HardOCP benchmark was done shortly after the game was released. Multiple patches and driver updates since then have certainly changed the performance characteristics of the game, so I don't think it's even valid..