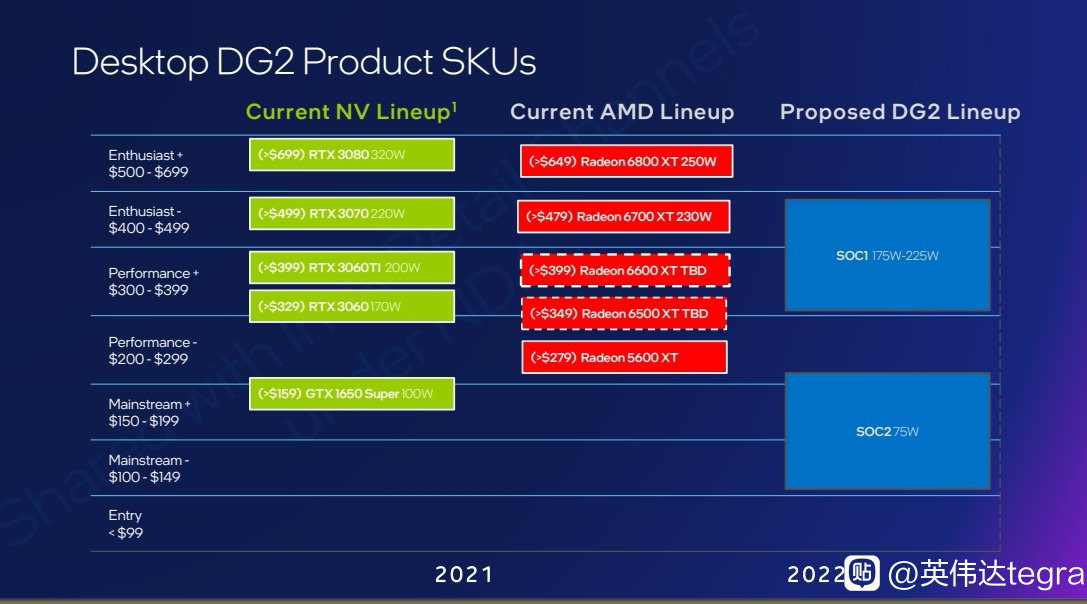

Current speculation/rumors says that DG2 is anywhere between RTX 3060 and RTX 3070 Ti.

LOL.

Well, we can make some assumptions.

512 EUs(4096 ALUs), 128 ROPs, 256 bit bus

384 EUs(3072 ALUs), 96 ROPs, 192 bit bus

256 EUs(2048 ALUs), 64 ROPs, 128 bit bus.

128 EUs(1024ALUs), 32 ROPs, 64 bit bus.

Lets assume that it has per alu performance parity with Turing, not Ampere.

2048 ALUs would have RTX 2060 Super performance, or better(RTX 3060) performance.

3072 ALUs would have RTX 2080 Super performance, or better(higher clock speeds)

4096 ALUs would have RTX 2080 Ti performance(slightly below), which directly translates to the rumored performance numbers.

So the performance might be there.

Rumors also talk about those GPUs being cheap because Intel wanting to make a splash entry into the market, and flooding it with GPUs, and great value.

P.S. I would not be surprised if we would see CPU+GPU+Mobo bundles from Intel.

They would make possible getting GPUs at MSRPs this way...