How? We're not talking about Atom here. Why would you agree with everything, btw? What will happen to a lot of the 6C? It'll go into a gaming notebook... Guess what? It's largely useless, because you can't upgrade the GPU. It's nice for the OEMs who will sell you mismatched CPUs and GPUs, though. In addition, I can't imagine many users needing a 6C with a GT2 for whatever outside of games. A lot of people see "i7" and think "better" even though it's not relevant to their needs.

Upcoming games will have far better MT support due to DX12/Vulkan, making very good use of all available cores, and thus lowering CPU power consumption (more cores at lower frequency) and allowing more TDP budget for the GPU. More GPU TDP -> more performance in the same form factor.

As for using the GPU upgrade problem in a notebook, it's a weak argument: notebooks come with their unique set of compromises, they always did. Whether you think a gaming notebook is worth the investment or not is not the topic.

The only situation in which I would tend to agree with you would be Intel creating a new price ceiling for the 6C CPUs, thus keeping the rest of the product stack at similar prices with today.

IMO Anandtech measured completely the wrong thing in that article (or else failed to make additional measurements to confirm their hypothesis). Simply put, it doesn't matter what power states the CPU is in or what cores are online - at a high level all that matters is the performance (and consistency) and the power consumption.

IT DOESN'T MATTER HOW MANY CORES ARE USED IF THE ADDITIONAL CORES FAIL TO PROVIDE ANY REAL (WORLD) GAIN.

AT never tested in any way shape or form whether there was any advantages/disadvantages in running the tested tasks over more cores or not.

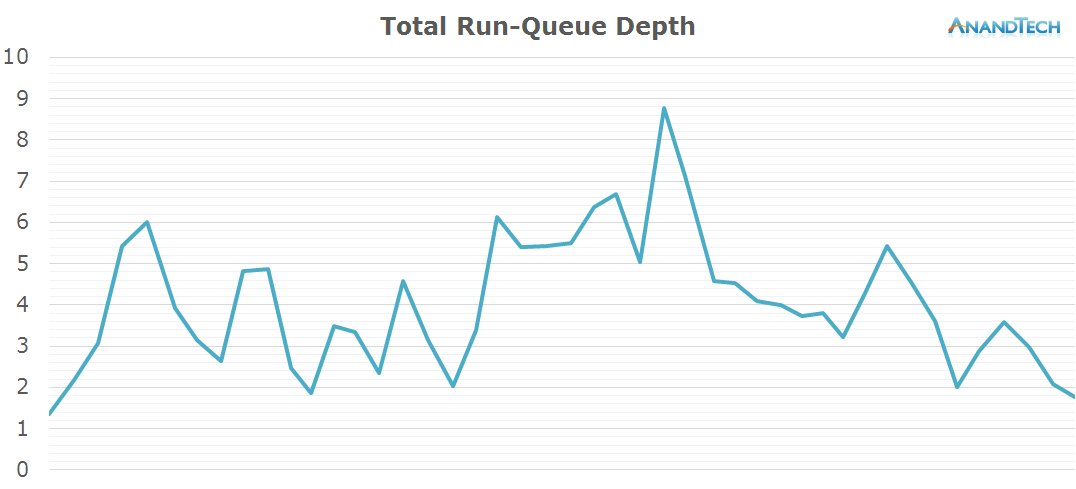

For the first part, Anandtech recorded and correlated the following data: power state distribution, frequency distribution, and

run-queue depth - which is an exact representation of the number of threads running through the system. Not only did Anandtech measure the right thing, they did so in a comprehensive manner to give us a detailed representation of how the big.LITTLE SOC behaves under Android. Please take your time and read through the article, it really is worth the read.

For the second part, it seems to me you imply that optimised multithreaded software running on many cores needs proof of efficiency (perf, power, or both). No offense, but for you to claim that in a scenario when the browser can use up to 6-8 threads, using the additional available cluster of power optimised cores does not yield additional efficiency gains is a bit much. You're entitled to your opinion though, maybe we'll get the chance to compare results in a test with the little cores disabled, since that's the only way we can maintain data consistency (keep software and CPU arch/process the same).