- Aug 14, 2017

- 1,152

- 974

- 146

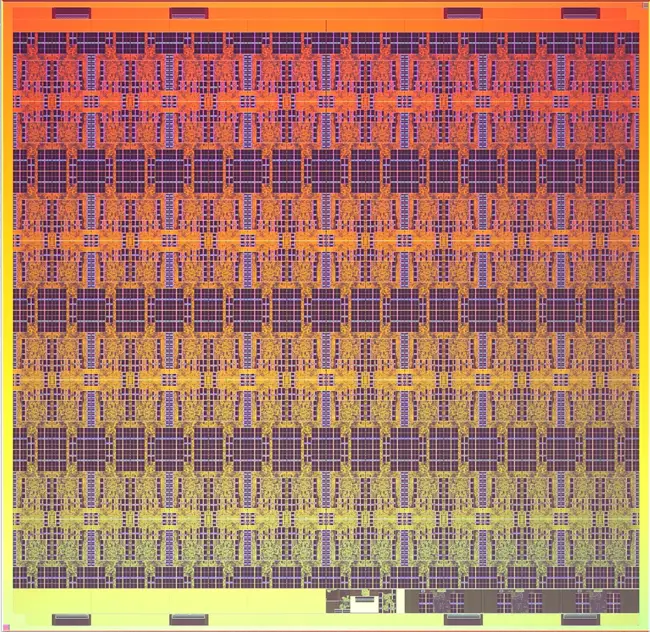

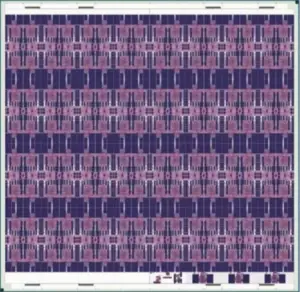

First lets head over to Wikichip for a die shot, floor plan and some more info

Now enter Intel's recent announcement here, they have launched a Neuromorphic Research Community to Advance ‘Loihi’ Test Chip.

Intel claims that their version of "Hello World" is scanning and training Loihi to recognize 3D Objects from multiple angles, they say this uses <1% of Loihi and 10s of milliwatts and only takes a few seconds.

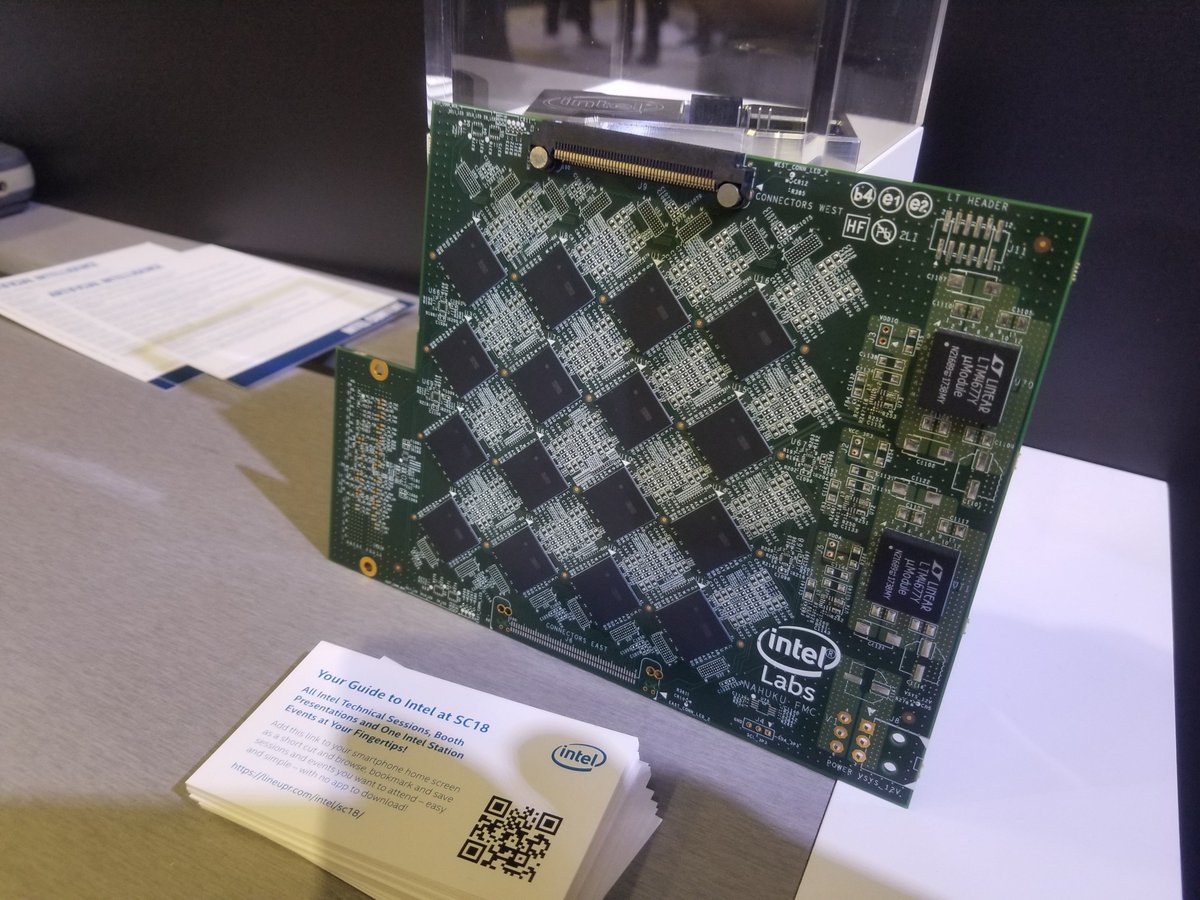

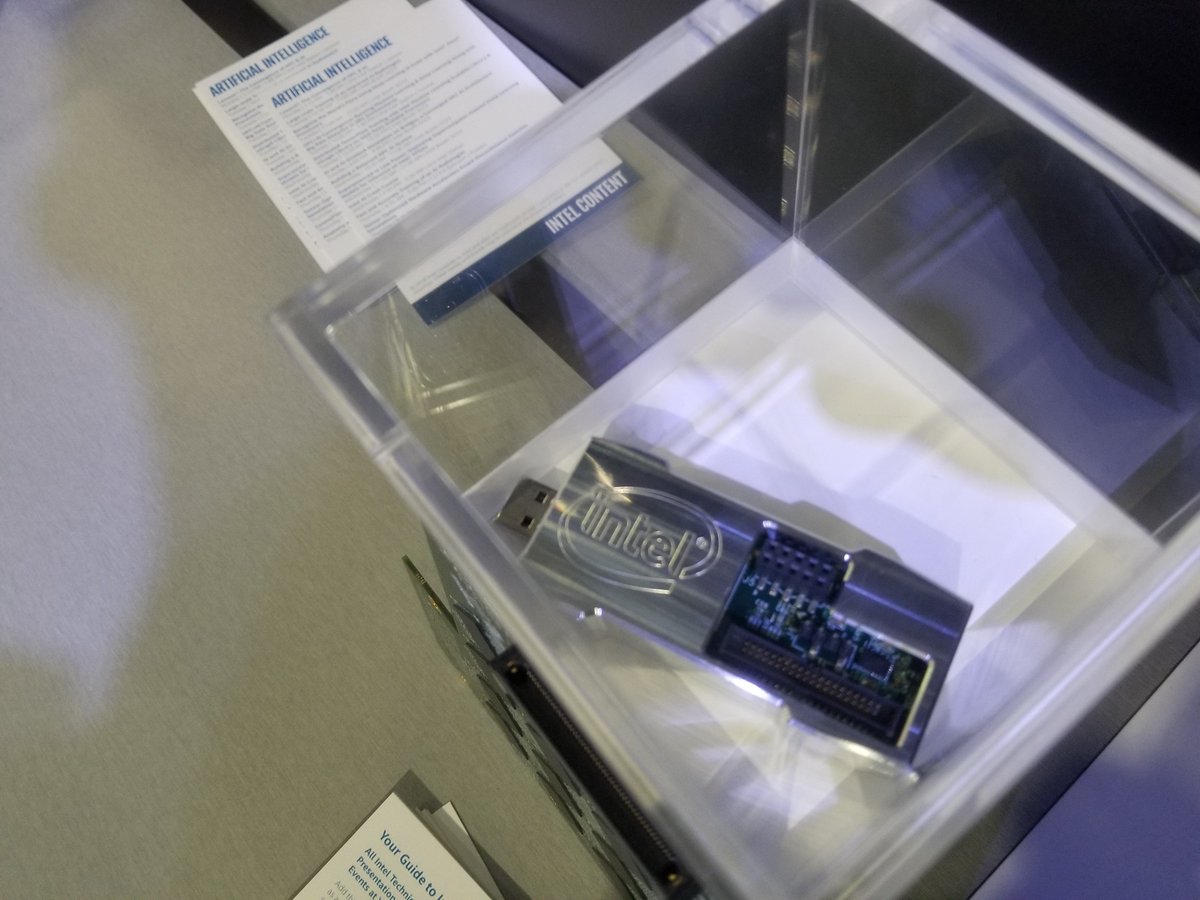

They have also sent out development boards to researchers who are working on a variety of applications including sensing, motor control, information processing and more. They also say Software development is a key focus and that they are looking forward to demonstrate much larger scale applications for Loihi

Intel's 'Loihi' Neuromorphic Chip in the Lab - Youtube

In this video they show the Loihi chip learning and training an object(a bobble head) in <5 seconds and it is able to recognize it from any side

If you want more details on the Loihi architecture Intel has published details over at IEEE

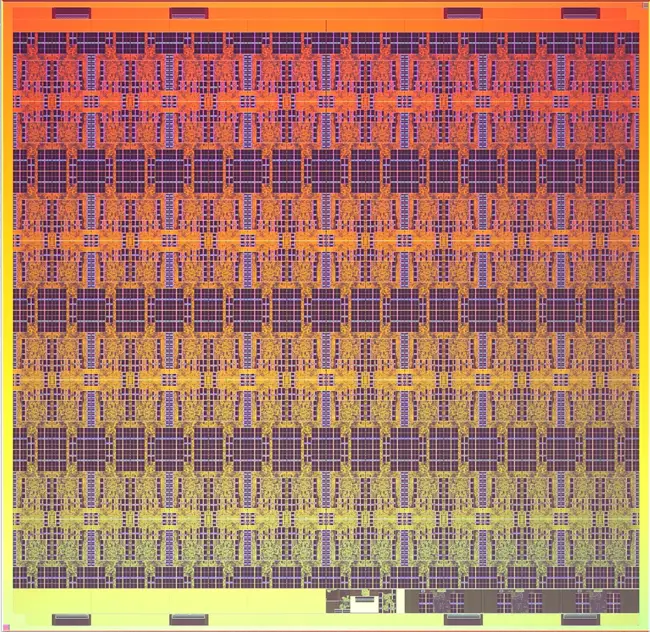

The die is a mere 60mm^2 and has 2,070,000,000 transistorsWikiChip said:Loihi is fabricated on Intel's 14 nm process and has a total of 130,000 artificial neurons and 130 million synapses. In addition to the 128 neuromorphic cores, there are 3 managing Lakemont cores.

WikiChip said:The chip itself implements of a fully asynchronous many-core mesh of 128 neuromorphic cores. The network supports a wide variety of artificial neural network such as RNNs, SNN, sparse, hierarchical, and various other topologies where each neuron in the chip is capable of communicating with 1000s of other neurons.

There are 128 neuromorphic cores, each containing a "learning engine" that can be programmed to adopt to the network parameters during operation such as the spike timings and their impact. This makes the chip more flexible as it allows various paradigms such as supervisor/non-supervisor and reinforcing/reconfigurablity without requiring any particular approach. The choice for higher flexibility is intentional in order to defer various architectural decisions that could be detrimental to research.

Now enter Intel's recent announcement here, they have launched a Neuromorphic Research Community to Advance ‘Loihi’ Test Chip.

Intel claims that their version of "Hello World" is scanning and training Loihi to recognize 3D Objects from multiple angles, they say this uses <1% of Loihi and 10s of milliwatts and only takes a few seconds.

They have also sent out development boards to researchers who are working on a variety of applications including sensing, motor control, information processing and more. They also say Software development is a key focus and that they are looking forward to demonstrate much larger scale applications for Loihi

Intel's 'Loihi' Neuromorphic Chip in the Lab - Youtube

In this video they show the Loihi chip learning and training an object(a bobble head) in <5 seconds and it is able to recognize it from any side

If you want more details on the Loihi architecture Intel has published details over at IEEE

Last edited: