To be honest with you guys, I have not been caring about Intel GPU efforts, until I heard rumors about the prices of those GPUs.

Midrange for 300$? Im up for that!

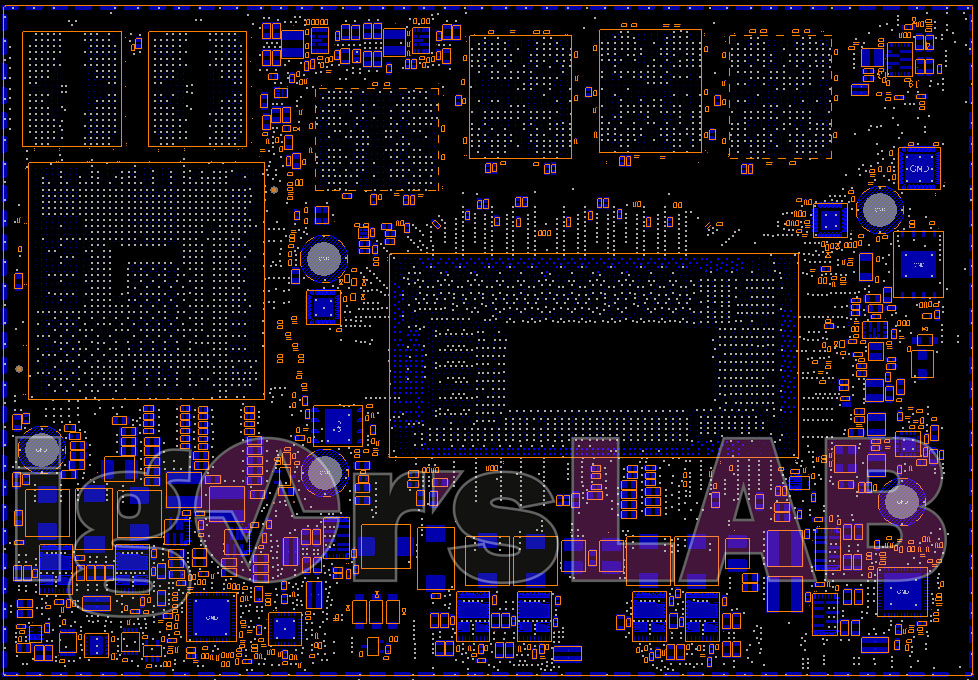

Also, its interesting to see that 4096 ALU GPU, with 256 Bit GDDR6 VRAM and clocked at around 2000 MHz is going to perform around RX 6800/RTX 3070 Ti, GPUs which should have similar ALU counts.

If that will be maintained, and filter down the product stack, the 384 EU/192 bit chip might perform around RX 6700 XT. If rumors are correct, this GPU might cost 300$, which would be logical since its supposed to have 190 mm2 die size(roughly, according to VCZ calculations).

If Intel is able to deliver enough GPUs to the desktop, DIY market, I might pull the trigger, just out of curiosity.