ajc9988

Senior member

- Apr 1, 2015

- 278

- 171

- 116

It is a compound. AMD Zen was 7% IPC behind coffee, putting it somewhere around Haswell and Broadwell. Here shows AMD only got about 3% or so IPC with Zen+:Source?

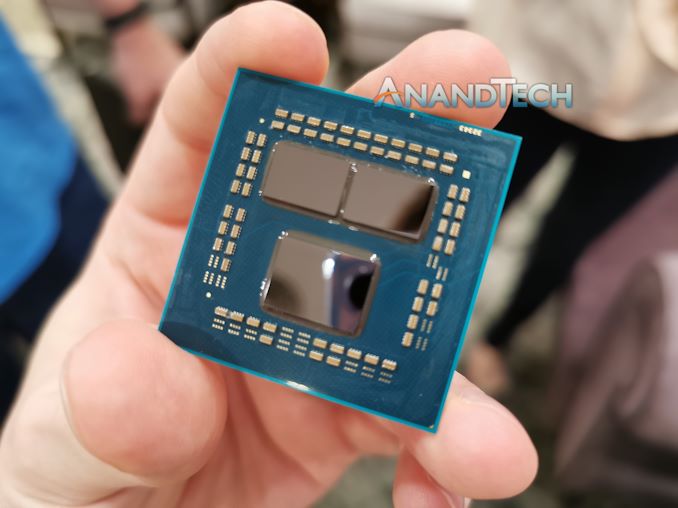

The AMD 2nd Gen Ryzen Deep Dive: The 2700X, 2700, 2600X, and 2600 Tested

And at some point, I will tell you do your own darn research at this point, especially since I even searched out the old Intel roadmaps another member was referencing.

If you are too lazy to read the articles by anandtech then speak in their forum and call into question someone citing their work, there is something wrong.