"The L2 cache’s large capacity and high associativity makes destructive interference between the two threads less likely." <- BULLDOZER: AN APPROACH TO MULTITHREADED COMPUTE PERFORMANCE

"For "Bulldozer" AMD implemented a write-thru L1D cache and focused on improving pre-fetch algorithms and increasing L1D cache bandwidth on popular small block transfers. For those less frequent, larger block transfers we rely upon the efficiencies built into our large 16-way 2MB L2.

Based on the average workloads today, we see this new design - despite the smaller L1 cache - as a more efficient way to process data." Mike Butler, Senior Fellow Design Engineer, AMD / HardOCP Readers Ask AMD Bulldozer Questions

¯\_(ツ)_/¯

The large L2 seems to stem more from the smaller L1 caches which provided more aggressive thrashing of an unknown smaller L2 option. I guess it could figuratively be the same with SMT, high-associativity and high-capacity is preferred in server workloads.

Skylake => 256 KiB/core - 4-way set associative - 12-cycle latency

Skylake-SP => 1 MiB/core - 16-way set associative - 14-cycle latency

Technically, some how Skylake-SP should have more effective multi-threading per core.

I have identified three different Willowcove implementations; a Server WLC(WillowcoveX), a Client WLC(Willowcove), and a Mobility WLC(Willowcove_M). Just because TGL doesn't have large L2 doesn't mean SPR won't.

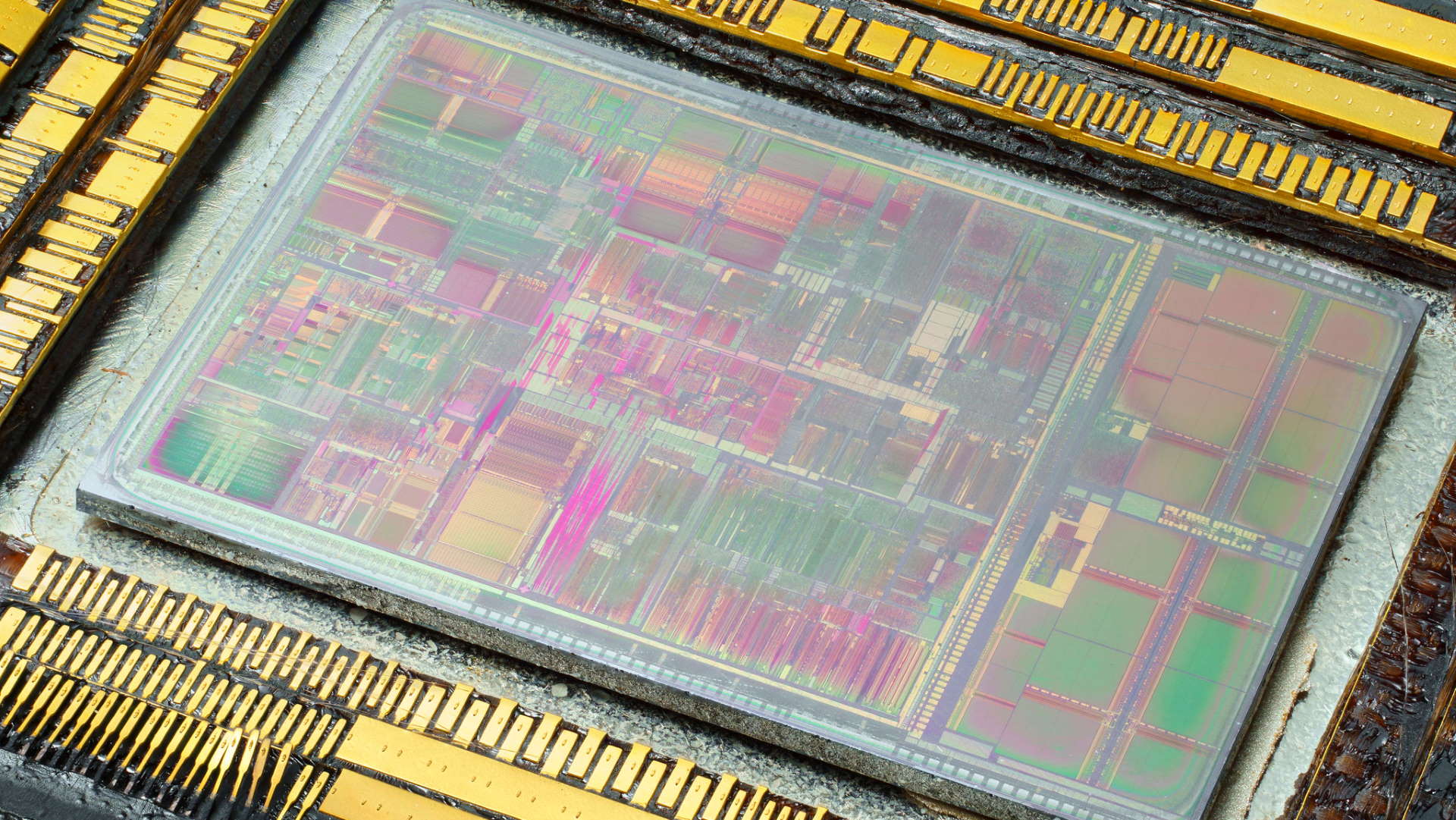

View attachment 11229

^-- Bulldozer had an option for 1 MB 16-way w/ 18-cycle latency. But, is the 2-cycle gap worth the cache misses?