- Oct 30, 2015

- 94

- 1

- 0

New here and I'm quite interested in how CPUs work!

So, how exactly does a CPU work?

I know it has the control unit and the ALU.

I also know it uses different types of registers like how the storage register is used to store the result of an arithmetic operation or a logical calculation (correct me if I'm wrong) and a general register that does whatever the control unit tells it to as well but there's obviously a lot more I don't know.

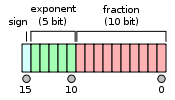

What is a Floating point unit? What exactly is it? I know that AMD's Bulldozer shared one FPU between two cores which proved to be a pretty big bottle neck. Why?

If the core "modules" posed such an issue to AMD for performance, why couldn't they use a software implementation to "merge" two physical cores into one like the rumour was back in 2006 for AMD and reverse hyperthreading? Is that possible? If not, why not?

Does every core get a control unit? Or is it more like there's one control unit for one CPU and then each core has its own ALU? If each core gets a control unit, wouldn't that introduce overhead?

What exactly is an instruction set? I see talks of Intel implementing new instruction sets into their CPUs. How does that impact performance? Does it mean that new instruction sets means that the CPUs process the raw data they've been given in a better and more efficient manner?

What is branch prediction? Why is a deep pipeline(correct me if this is the wrong terminology) a bad thing if branch prediction algorithms aren't good?

Sorry for all these questions ( I have more, lol) but YouTube was no help and I think I want to be a CPU architect one day

So, how exactly does a CPU work?

I know it has the control unit and the ALU.

I also know it uses different types of registers like how the storage register is used to store the result of an arithmetic operation or a logical calculation (correct me if I'm wrong) and a general register that does whatever the control unit tells it to as well but there's obviously a lot more I don't know.

What is a Floating point unit? What exactly is it? I know that AMD's Bulldozer shared one FPU between two cores which proved to be a pretty big bottle neck. Why?

If the core "modules" posed such an issue to AMD for performance, why couldn't they use a software implementation to "merge" two physical cores into one like the rumour was back in 2006 for AMD and reverse hyperthreading? Is that possible? If not, why not?

Does every core get a control unit? Or is it more like there's one control unit for one CPU and then each core has its own ALU? If each core gets a control unit, wouldn't that introduce overhead?

What exactly is an instruction set? I see talks of Intel implementing new instruction sets into their CPUs. How does that impact performance? Does it mean that new instruction sets means that the CPUs process the raw data they've been given in a better and more efficient manner?

What is branch prediction? Why is a deep pipeline(correct me if this is the wrong terminology) a bad thing if branch prediction algorithms aren't good?

Sorry for all these questions ( I have more, lol) but YouTube was no help and I think I want to be a CPU architect one day