Well, THAT was disappointing.

I had the chance to work on an older Sandy Bridge Pentium G630 (?) PC, 2x4GB DDR4, Gateway SX-something (285x?) slim PC.

The previous config that I sold it in, had a PNY GT430 128-bit DDR3 card, with LP brackets.

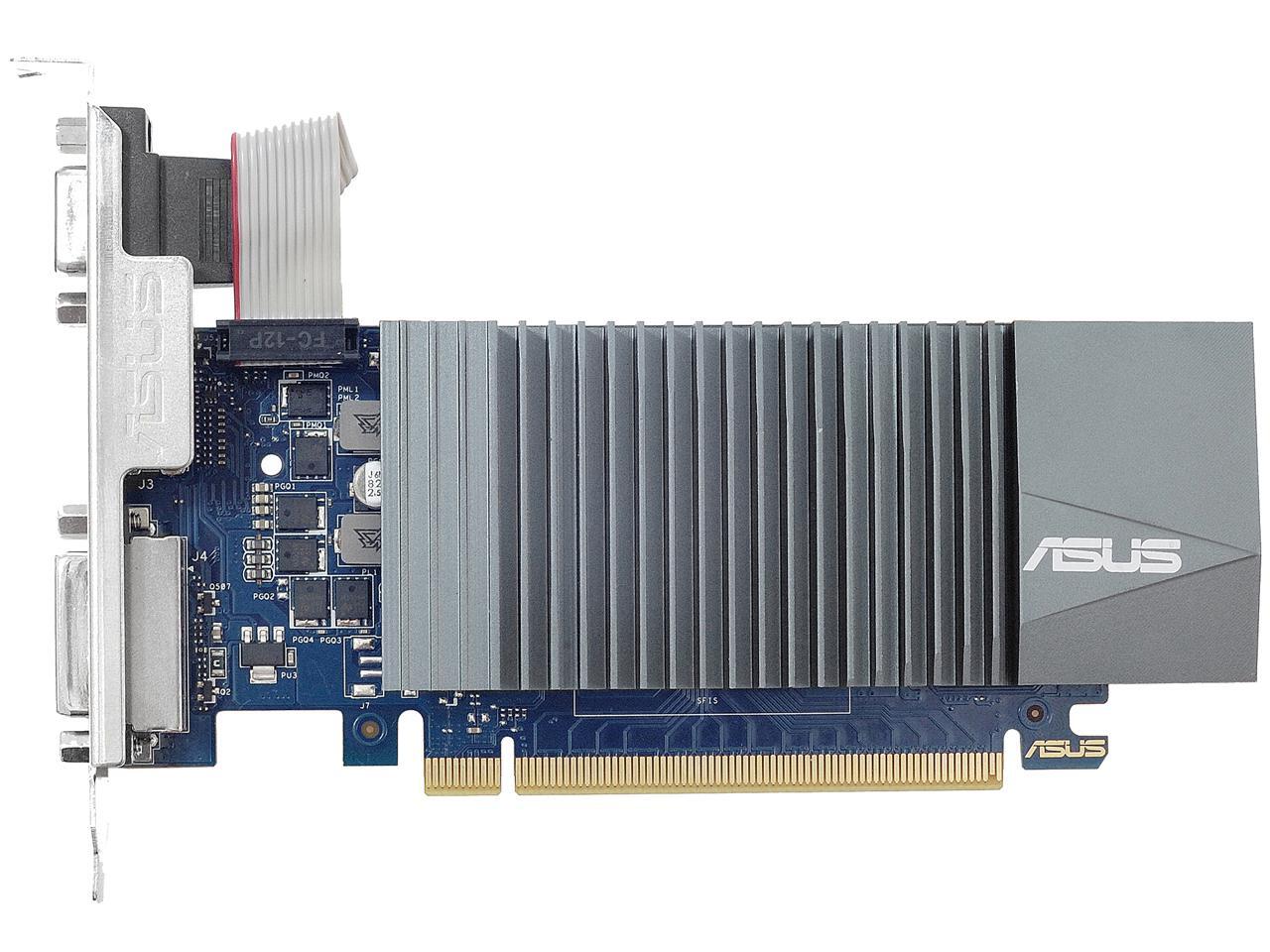

I swapped out the HDD for a 480GB Acer SATA SSD, and then removed the PNY GT430, and put the LP bracket(s) onto the GT710 GDDR5 version card, and then slotted it in.

I can't get any video output?!? I tried the HDMI, no BIOS screen, I tried the DVI-D with a DVI-to-HDMI cable, and a 7" mini-LCD with HDMI inputs that I previously had working with my server PC (with onboard Intel DVI-D output).Nothing!

I tried removing the VGA header. Nothing.

Not sure what's going on here. The card initializes, and makes proper boot beeps, I can hit the keys to enter BIOS, hit ESC and ENTER, and it beeps not too long after that as it reboots out of the BIOS screen. But it displays nothing to the monitor.

I have yet to try the card in a more modern PC. I don't know if the card's outputs are defective, or what. (Or if the +5V line on the PSU in the slim desktop isn't working? Surely, it wouldn't boot if that were the case?)

Is there a chance that "modern" low-end NVidia-chipset cards, are no longer compatible with "Legacy" mainboards? This is frustrating. I don't want want to put the PNY card back in, as it's Fermi, unsupported by current drivers, and I don't know how much life the fan has in it left. (These parts are all fairly old.)

The Kepler GT710 GDDR5 card was passively-cooled, which would have lasted basically forever, and the SSD should last a long time, which basically leaves the PSU and PSU fan as the most likely parts to fail in time.

The other possibility is, it's not making full PCI-E slot contact somehow, but it seems like it's plugged in OK. I took it out and adjusted the rear bracket, and slid it all the way up that I could, and tightened it back down, so that it could plug as far into the socket as possible. I don't see any gold fingers or "tilt" to the card when it's plugged in.

I also can't get a display out of the onboard, when the card is plugged in. So it does seem to be detecting it. Just no visual output. (I do get a display and POST with the onboard, without the GT710 plugged in. Also, I believe, from the beeps and keyboard, that it is indeed POSTing with it plugged in as well, but just no video output.)

Could this be a PCI-E slot power spec issue? I know that PCI-E x16 is supposed to provide 75W, but in this PC, it may only be 25W. Or I might be incorrect about that, how would a GT430 function on 25W?