- May 4, 2000

- 16,068

- 7,380

- 146

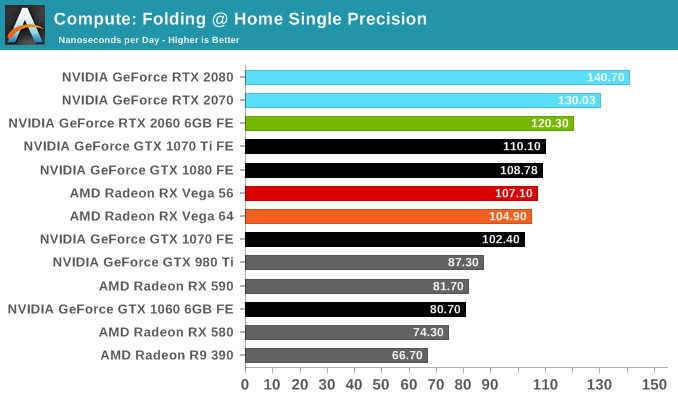

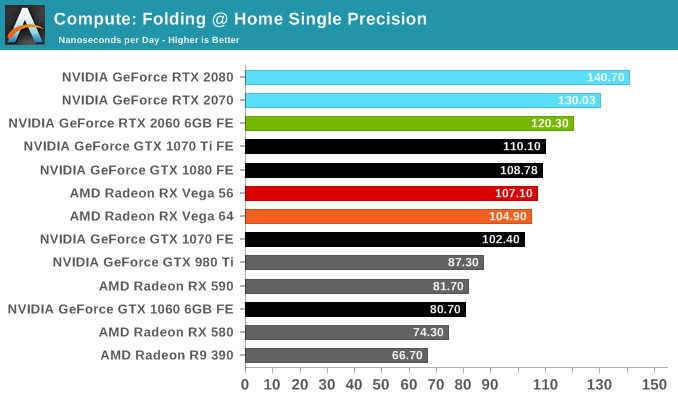

This looks like the best price/performance card for Folding ($349 Founders Edition):

(BTW, not a big fan of the auto-loading videos on every single page now like at Tom's )

)

https://www.anandtech.com/show/13762/nvidia-geforce-rtx-2060-founders-edition-6gb-review/13

(BTW, not a big fan of the auto-loading videos on every single page now like at Tom's

https://www.anandtech.com/show/13762/nvidia-geforce-rtx-2060-founders-edition-6gb-review/13