thesmokingman

Platinum Member

- May 6, 2010

- 2,307

- 231

- 106

Doesn't NV had actually poor support for CR, it's more political save face? The spec is most supported by Intel.

Doesn't NV had actually poor support for CR, it's more political save face? The spec is most supported by Intel.

•Tier 1 enforces a maximum 1/2 pixel uncertainty region and does not support post-snap degenerates. This is good for tiled rendering, a texture atlas, light map generation and sub-pixel shadow maps.

--MISSING

•Tier 2 reduces the maximum uncertainty region to 1/256 and requires post-snap degenerates not be culled. This tier is helpful for CPU-based algorithm acceleration (such as voxelization).

•Tier 3 maintains a maximum 1/256 uncertainty region and adds support for inner input coverage. Inner input coverage adds the new value SV_InnerCoverage to High Level Shading Language (HLSL). This is a 32-bit scalar integer that can be specified on input to a pixel shader, and represents the underestimated Conservative Rasterization information (that is, whether a pixel is guaranteed-to-be-fully covered). This tier is helpful for occlusion culling.is that why tomb raider dev messed up their release?

Aren't these the same people who did Mantle? Surely they could do DX12 too.

DX12 is mess now.You cant use it with very high textures unless you have 6GB card.So why so much spam here?(you can but with crap performance and stutering)

Dx11 is way better and you can actually use very high textures even on GTX970(if you have win10 and 16GB fast DDR4 ram)

Even GTX670 2GB can manage very high textures under dx11.

Just dont use dx12 and be happy.

If you mean computerbase's bench , they're are reference , both running around 1K ~ 1050Mhz while pcgameshardware's bench shows boost for Asus GTX 980 Ti.all of GTX 980Ti cards are overclocking version and they're still faster than R9 390 by around 15 ~ 30 depend on card frequency , but it's AMD's Title and you don't see that they lose to DX11.All cards(except some) in Hitman get more fps than DX11.

So is it fine that Ti outperforms 390 by only 15%?

It all depends on your perspective.

If you think that the 980Ti is and always will be the top card of this generation, then no.

If you have a 980Ti no.

If you have a 390 or another Hawaii card, then yes.

If you have an AMD card and are counting on it to keep improving and beating its original competitors, then yes.

Hawaii just keeps on trucking, getting better against each generation the NV puts up against it. If Pascal wasn't on a node shrink, somehow it wouldn't surprise me to see Hawaii challenge the 1070 or 1080. It's the little chip that doesn't know how to quit.

So is it fine that Ti outperforms 390 by only 15%?

Look at this :

http://tpucdn.com/reviews/ASUS/GTX_980_Ti_Matrix/images/perfrel_1920_1080.png

GTX 980Ti Reference = 86%

R9 390 stock = 62%

So If GTX 980Ti is 100% then R9 390 (100*62 / 86 = 72.09 ).this is DX11, now add DX12 you get massive boost by removing API overhead , add 5% or more to to R9 390 Performance by optimizing Game engine's source code.we know that GTx980Ti doesn't get benefit more from DX12 because if Efficiency Is 90 % then in DX12 it won't be near 100% or less on other hand there is little room for optimizing source code to get full utilization , while GCN Efficiency is around 70% or less.96 ROP doesn't mean this should be God like.

Just look at Tomb riader , despite having a Radeon R9 390 with 8GB, why is it slower than GTX 970 ?

again , look at Techpowerup's image.for Resolution 1920x1080 :

R9 390 = 62%

GTX 970 = 64%

so if R9 390 is 100% then GTX 970 is 3% (64*100 / 62 ) faster than Radeon one.but in Tomb raider it's much much worse than before even if you disable Gameworks ,It still slower than GTX970.Isn't DX12 supposed to removing API overhead ? If yes , Then what happened here?

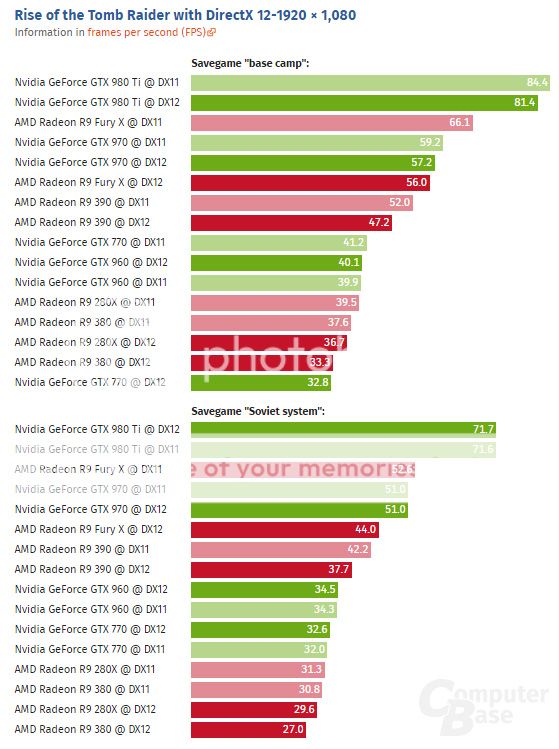

Really is it normal to you that in DX12 bench , GTX 970 with 4GB is 21% faster than Radeon R9 390 8GB? I agree for DX11.everyone know AMD GCN doesn't get a good Utilization from DX11 API.

See the chart

http://forums.anandtech.com/showthread.php?t=2466535

980Ti @ DX 11 72.5

390X @ DX 11 68.6

According to the TPU chart you linked a 980Ti should be 28% faster than 390X in 1080P.

See the chart

http://forums.anandtech.com/showthread.php?t=2466535

980Ti @ DX 11 72.5

390X @ DX 11 68.6

According to the TPU chart you linked a 980Ti should be 28% faster than 390X in 1080P.

I personally like extra features that differentiate the PC version from a mere console port running at higher-res with better textures. Having only used Radeon graphics cards over the years I missed some of that. Bring on GameWorks. Fanboys should be asking AMD to come up with a similar solution (open or not) instead of bashing it.

NV implementation hurt everything. But it hurts amd gpus more so they can cut their fingers off, if it makes amd loose its arm.

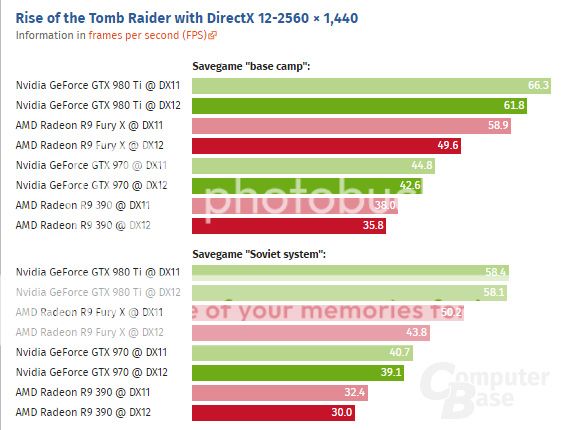

This shows how the CPU bottleneck is alleviated with DX12. Running at 1440P I get more fps with DX11 over DX12 but then switching resolution to 720P, I then get more frames with DX12 over DX11. I assume my CPU (3930K @ 4.4) isn't being pushed at 1440P but is when it is at 720P.

System used is a 3930K - 16GB Ram (2133Mhz) - RIVF motherboard - Titan X - ROG Swift G-Sync monitor.

I can see the benefits for DX12 and especially for those that run multi GPU or older/slower CPUs and I am sure when SLI and Crossfire are patched into DX12, people will start to see some nice gains.

No it doesn't. >30% better performance using Core i7-2600K according to the developer, Core i3-4330 and FX6300 seeing improved performance @ Soviet Camp (with Fury X) according to CB and even Haswell-E is faster in CPU bound scenarios now. Needs some polish on high-end systems (shouldn't regress performance at all), but I'm sure it will improve over time.

www.youtube.com/watch?v=CoKmLvjxSnE

Next time do your research before you post.

Mind you we are in GPU Forum:

http://i1024.photobucket.com/albums/y303/martmail55/1080ptb_zpsrapchxss.jpg

No improvements.

With a Core i7-6700K, which means nothing for slower CPUs.

The fact that your favourite brand doesn't see huge improvements here is not my fault. Have a nice day.

The fact that DX12 is slower than DX11 across the board in this game is not your fault aswell, assuming you were not involved in development of this game.

Good day to you aswell.

And, in all honesty, the only thing that's showing as genuinely slower on those DX 11 vs 12 charts seems to be fury.

I would not be hugely surprised if these sorts of charts end up being what most programmed DX12 games to end up looking like.

Yes the API lets you get non trivial advantages for specific GPU architectures if you really put big effort into optimising at a low level for that architecture.

Given the amount of 'effort' seemingly put into PC ports, would anyone expect them to do that? For, the ~half dozen architectures there will be the PC space?

(For GCN 1.1 yes, but that architecture is gone in 3-6 months time.).

Especially given that all that effort is wasted/even counter productive in 2/3 years time when all the cards on sale are using different architectures.

I imagine that the CPU benefits should be much safer to get.

PS - Serious question. Has anyone checked to see what all these DX12 things do on Intel iGPU's? That would be quite an interesting sort of data point. And, yes, given trends we do need to take those reasonably seriously too.

I'm pretty sure both AMD and nVIDIA had said there are ways to do Conservative Rasterization and ROV without relying on the hardware. I remember an AMD employee specifically said those methods were used in Dirt Rally.

NopeIt needs to be hardware.

developer.nvidia.com - Don't be conservative with Conservative RasterizationIs it possible to achieve Conservative Raster without HW support?

Yes, it is indeed possible to do this, and there is a very good article describing it here.

Essentially it involves using the Geometry Shader stage to either:

a) Add an apron of triangles around the main primitive

b) Enlarge the main primitive

However both approaches add performance overhead, and as such usage of conservative rasterization in real time graphics has been pretty limited so far.