It could be even fewer cents on a denser node, TSMC for example.2006 Intel stumbled because a reasonable engineering gamble to leverage an overwhelming process advantage on a radical architecture did not pan out.

2020 Intel:

- Has a process disadvantage, will likely never hold an advantage ever again

- Continues to stick to stale design practices and methods which were advantages with a process advantage, but are now liabilities

- Engaged in a four year series of politicized layoffs which in turn drove out yet more engineering talent

- Still thinks it can rely on its name to attract talent with 30% less pay than all the other companies and therefore fails to attract new talent for boots on the ground

- Is under attack on every market and is outclassed in most of them

My two cents after 16+ years in the industry... works out to 1/8 cent a year.

Discussion Comet Lake Intel's new Core i9-10900K runs at over 90C, even with liquid cooling TweakTown

Page 8 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Because AMD made a big fuss of using "7nm" for their chips, AMD fans followed that and suddenly everyone on PC forums became a semiconductor expert.I dont even know what there is to discuss about tsmcs 7nm. no one cared about intels better node then why would anyone care about tsmcs better node for amd. we have what we have.

In fact until 2017 there weren't that many discussions about CPUs at all. Some PC review websites weren't even reviewing them that often (e.g. TPU).

Intel's 4 cores somehow worked for pretty much everyone. 1-2 years later - despite still gaming up to 4K, using the same OS and software - suddenly 4 cores became good only for basic office work and browsing the web.

I recommend you take a look at benchmarks of modern games, not just GTAV and Witcher 3.Because AMD made a big fuss of using "7nm" for their chips, AMD fans followed that and suddenly everyone on PC forums became a semiconductor expert.

In fact until 2017 there weren't that many discussions about CPUs at all. Some PC review websites weren't even reviewing them that often (e.g. TPU).

Intel's 4 cores somehow worked for pretty much everyone. 1-2 years later - despite still gaming up to 4K, using the same OS and software - suddenly 4 cores became good only for basic office work and browsing the web.

4 cores are indeed starting to be useless for modern multiplayer games, and also for more and more single player games that don't necessarily cheap out on investing in the engine.

With AMD pushing core count a lot of people realized that having to pay hundreds of extra dollars for the 'luxury' of not needing to close every browser tab, app and other software when you wanna play a demanding game is NOT normal.

This has nothing to do with brands, it's just what it is.

Taking it personally just because it doesn't fit your agenda and babbling about AMD fans conspiring against quad core Intel CPUs only makes you look silly.

Thunder 57

Platinum Member

- Aug 19, 2007

- 2,675

- 3,801

- 136

Because AMD made a big fuss of using "7nm" for their chips, AMD fans followed that and suddenly everyone on PC forums became a semiconductor expert.

In fact until 2017 there weren't that many discussions about CPUs at all. Some PC review websites weren't even reviewing them that often (e.g. TPU).

Intel's 4 cores somehow worked for pretty much everyone. 1-2 years later - despite still gaming up to 4K, using the same OS and software - suddenly 4 cores became good only for basic office work and browsing the web.

AMD 7nm Marketing made a big fuss about it. I have rarely cared for their marketing. Four cores started to suck years ago. My 3570k limited my FPS in BF1. That was 2016. 4C/8T is the minimum now. But Intel was giving you the middle finger for all those years to put it in your words. Why do you think HT is suddenly coming back to all of Intel's CPU's? In the bad years Intel charged you about a $100 Intel tax for HT. Then they ditched shipping them with coolers. Intel is the one giving us the middle finger. It looks like they may have changed their minds. We shall see.

coercitiv

Diamond Member

- Jan 24, 2014

- 6,205

- 11,916

- 136

Lol, what world were you living in?! CPUs were always a hot discussion topic among enthusiasts, and this forum even had continuous talk about nodes too.In fact until 2017 there weren't that many discussions about CPUs at all.

Please don't try rewriting history to suit an argument, it lowers the quality of this discussion even further.

Yes, going from 4 to 8 cores gives you... 5-10% more fps? That's what I've seen. Maybe some titles show a larger gain - I don't track games that much anymore.I recommend you take a look at benchmarks of modern games, not just GTAV and Witcher 3.

4 cores are indeed starting to be useless for modern multiplayer games, and also for more and more single player games that don't necessarily cheap out on investing in the engine.

And I know some people just can't accept the idea that a CPU bottlenecks GPU. Well, it's their choice.

On a cost basis, considering a PC as a whole, this makes very little sense - maybe unless you're really going for the top experience at any cost...

If the difference was significant - for example: spending 20% more on a PC (just CPU-related) gave me 20% more fps - I'd probably agree. But it seldom works like that in gaming.

Of course it's a different story in some other tasks. For example: if you're doing simulations (engineering, financial etc), getting 8 cores instead of 4 means you can perform twice as many runs. So for, say, 30% more money you get 100% more performance.

I still don't understand why you focus on Intel so much. Yes, they sat on up to 4 cores in the consumer lineup. But they worked on other things.But Intel was giving you the middle finger for all those years to put it in your words. Why do you think HT is suddenly coming back to all of Intel's CPU's? In the bad years Intel charged you about a $100 Intel tax for HT. Then they ditched shipping them with coolers. Intel is the one giving us the middle finger. It looks like they may have changed their minds. We shall see.

AMD's CPU division didn't give us anything new in that period, on any front. Why don't you criticize them?

Pricing is a separate story. Intel, having no competition in most segments, could ask whatever they wanted. Why would I blame them for that?

Thunder 57

Platinum Member

- Aug 19, 2007

- 2,675

- 3,801

- 136

I still don't understand why you focus on Intel so much. Yes, they sat on up to 4 cores in the consumer lineup. But they worked on other things.

AMD's CPU division didn't give us anything new in that period, on any front. Why don't you criticize them?

Pricing is a separate story. Intel, having no competition in most segments, could ask whatever they wanted. Why would I blame them for that?

Enough with the stupid emojis. So you say that "Intel worked on other things" but limit AMD to "AMD's CPU division didn't give us anything new..."

AMD probably survived because of their video cards at the time and console sales. I am criticizing you for not understanding how things were back then, and picking and choosing what you think matters.

See, I can emoji too. Let's examine this:

Pricing is a separate story. Intel, having no competition in most segments, could ask whatever they wanted. Why would I blame them for that?

Intel took advantage of their superior products. Good work resulting in a great product should come at a premium. You shouldn't blame them for that as I (and many others) went with Intel. What you should be praising is AMD's return yo competition. Why do you not see that as a good thing? It had benefited us all.

Looking at this "supposedly leaked " review of 10900K it seems a fine CPU

It is compared to 3900x and 3950 running 1900 FLCK and CL15, so pretty much top of the line ZEN2 performance.

For Intel it is running abysmal DDR4 4000 18-22-22-42, so there is at least some performance left on the table, i'd have preferred 3800 CL15 setup with tuned secondaries, but i guess that's life with leaks.

P.S. Kinda sad when Anandtech will be running these CPUs "stock" with stock memory settings, that are not really relevant for enthusiasts that read these sites, and relevant to people that don't.

It is compared to 3900x and 3950 running 1900 FLCK and CL15, so pretty much top of the line ZEN2 performance.

For Intel it is running abysmal DDR4 4000 18-22-22-42, so there is at least some performance left on the table, i'd have preferred 3800 CL15 setup with tuned secondaries, but i guess that's life with leaks.

P.S. Kinda sad when Anandtech will be running these CPUs "stock" with stock memory settings, that are not really relevant for enthusiasts that read these sites, and relevant to people that don't.

Last edited:

You may have looked at benchmarks but you didn't see too much I guess. Comprehension makes all the difference in life when you're not being breastfed anymore.Yes, going from 4 to 8 cores gives you... 5-10% more fps?

Going from 4 cores to more cores gives you... guess what... the difference between being playable and unplayable. You won't see a big difference in average fps, but a tremendous difference in minimum fps. If you really insist, we can do this all day - all night.

DrMrLordX

Lifer

- Apr 27, 2000

- 21,634

- 10,849

- 136

P.S. Kinda sad when Anandtech will be running these CPUs "stock" with stock memory settings, that are not really relevant for enthusiasts that read these sites, and relevant to people that don't.

They run Matisse with DDR4-3200 CAS/CL16 usually, don't they?

Looking at this "supposedly leaked " review of 10900K it seems a fine CPU

It is compared to 3900x and 3950 running 1900 FLCK and CL15, so pretty much top of the line ZEN2 performance.

For Intel it is running abysmal DDR4 4000 18-22-22-42, so there is at least some performance left on the table, i'd have preferred 3800 CL15 setup with tuned secondaries, but i guess that's life with leaks.

P.S. Kinda sad when Anandtech will be running these CPUs "stock" with stock memory settings, that are not really relevant for enthusiasts that read these sites, and relevant to people that don't.

nah 15-16-16-38 is slow for ryzen. Probably didnt even change subtimings either.

coercitiv

Diamond Member

- Jan 24, 2014

- 6,205

- 11,916

- 136

DDR4 3800 CL15 is slow for Ryzen?!nah 15-16-16-38 is slow for ryzen. Probably didnt even change subtimings either.

nah 15-16-16-38 is slow for ryzen. Probably didnt even change subtimings either.

Those are decent timings, in fact not every 39XX will run 3900 FCLK either, but it is way more effort than loading retarded XMP that probably has RFC @800.

Last edited:

Gideon

Golden Member

- Nov 27, 2007

- 1,644

- 3,692

- 136

4000 CL18 isn't perfect (9 ns) but I wouldn't call it Abysmal. It's almost the same as 3600 CL16 (8.88 ns) and better than 3200 CL15 (9.375 ns).For Intel it is running abysmal DDR4 4000 18-22-22-42

With datarate in Mhz you get the mem-access latency in ns with the following calculation:

Code:

1 / (dataRate / 2) * casLatency * 1000 = latency

Last edited:

It's quite obvious from the link you posted that the 2700x is TDP restricted. I call that going belly up, as Zen+ usually does when it smells a power virus. What I'm trying to say is that, you won't see XFR(2) or PBO in a power virus test, whereas an Intel chip will always run everything, apps or power virus, AVX or not, with turbo boost applied until it hits some kind of limitation, be it thermal, or power.It's amazing how people can spew BS to support their argument and hope it sticks. Package power torture test was just under 105W. Before you complain about chilled water, the 8700k in that same chart drew 50w+ more power.

Do you remember when power consumption tests went from Prime 95 to Blender, Handbrake, or Cinebench? Who do you think was responsible for that?

Let's take some numbers from your link:

Prime 95, Small FFTs, AVX Enabled:

Ryzen R7 2700x = 104.7 watts

Core i5 8400 = 117.5 watts

Compared to this:

Blender Gooseberry: (System Power Consumption)

Ryzen R7 2700x = 205 watts

Core i5 8400 = 117 watts

Intel Core i9-9900K Re-Review

Today we're revisiting our original Core i9-9900K review and updating it with 95 watt TDP limited results, basically results based on the official Intel specification. For better...

www.techspot.com

www.techspot.com

Obviously, when I said the 2700x consumes 200W+, I meant system. So here we go:

205 Watts at stock running blender at stock, and 246 Watts running same at just 4.2GHz:

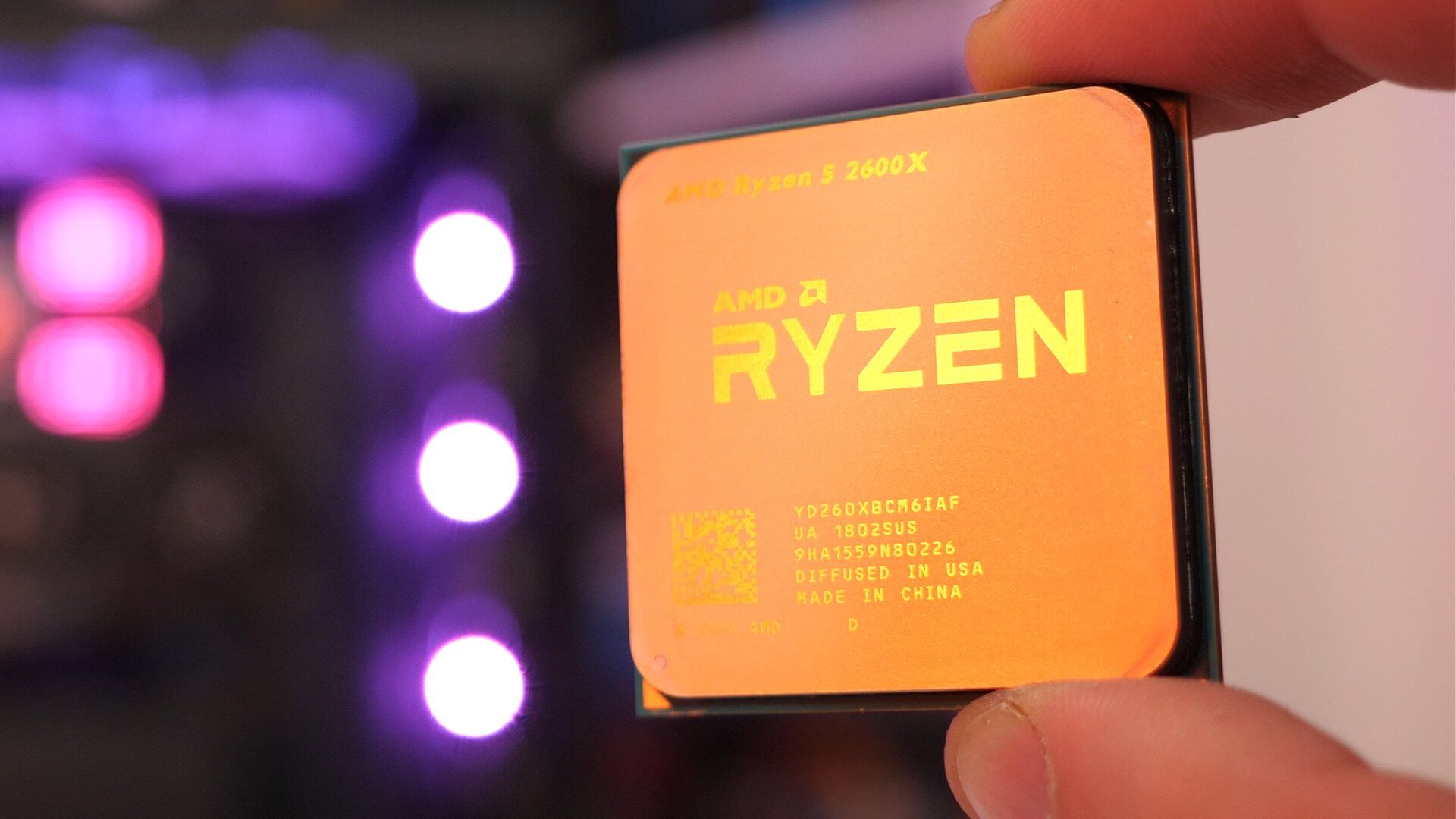

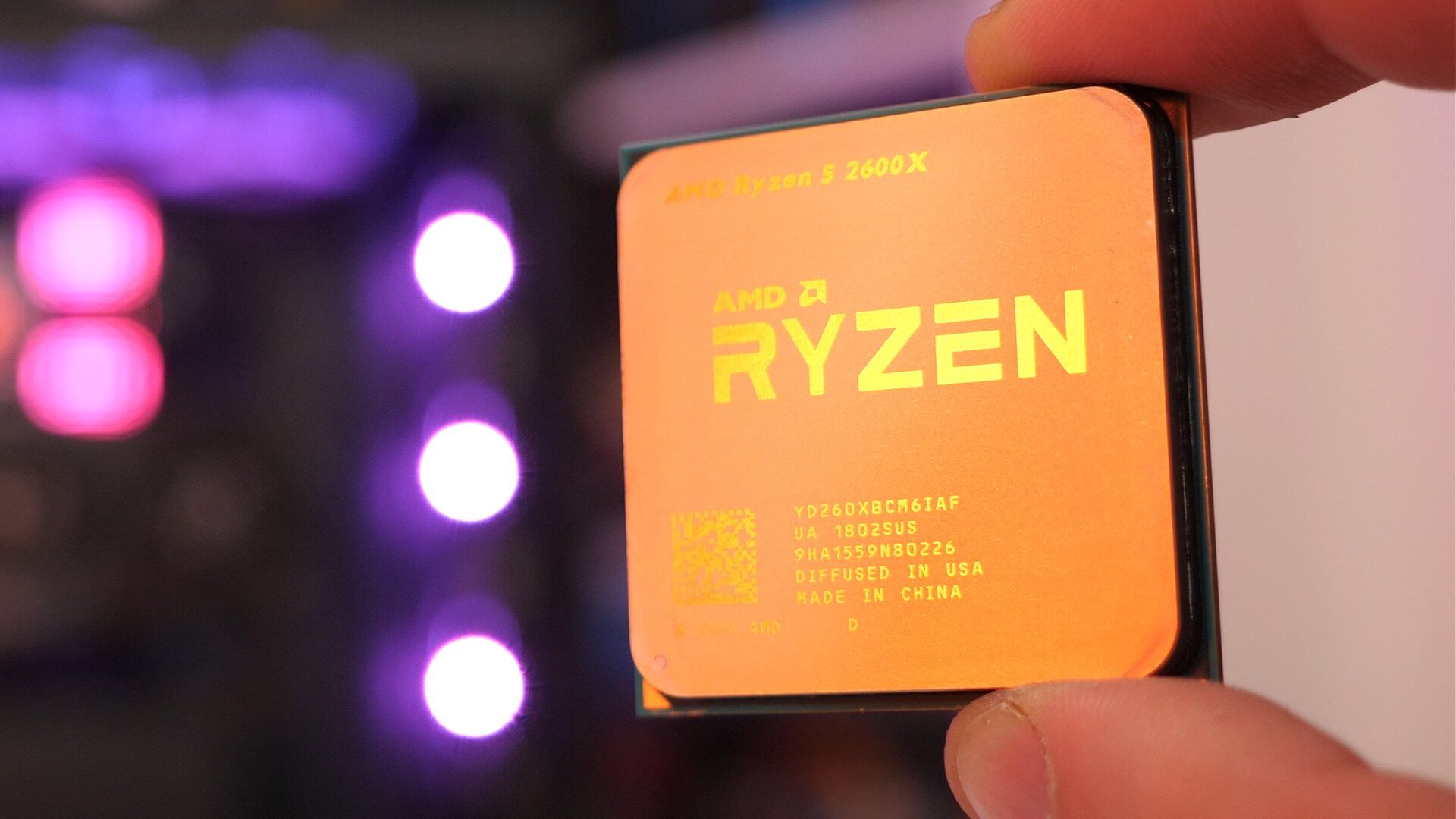

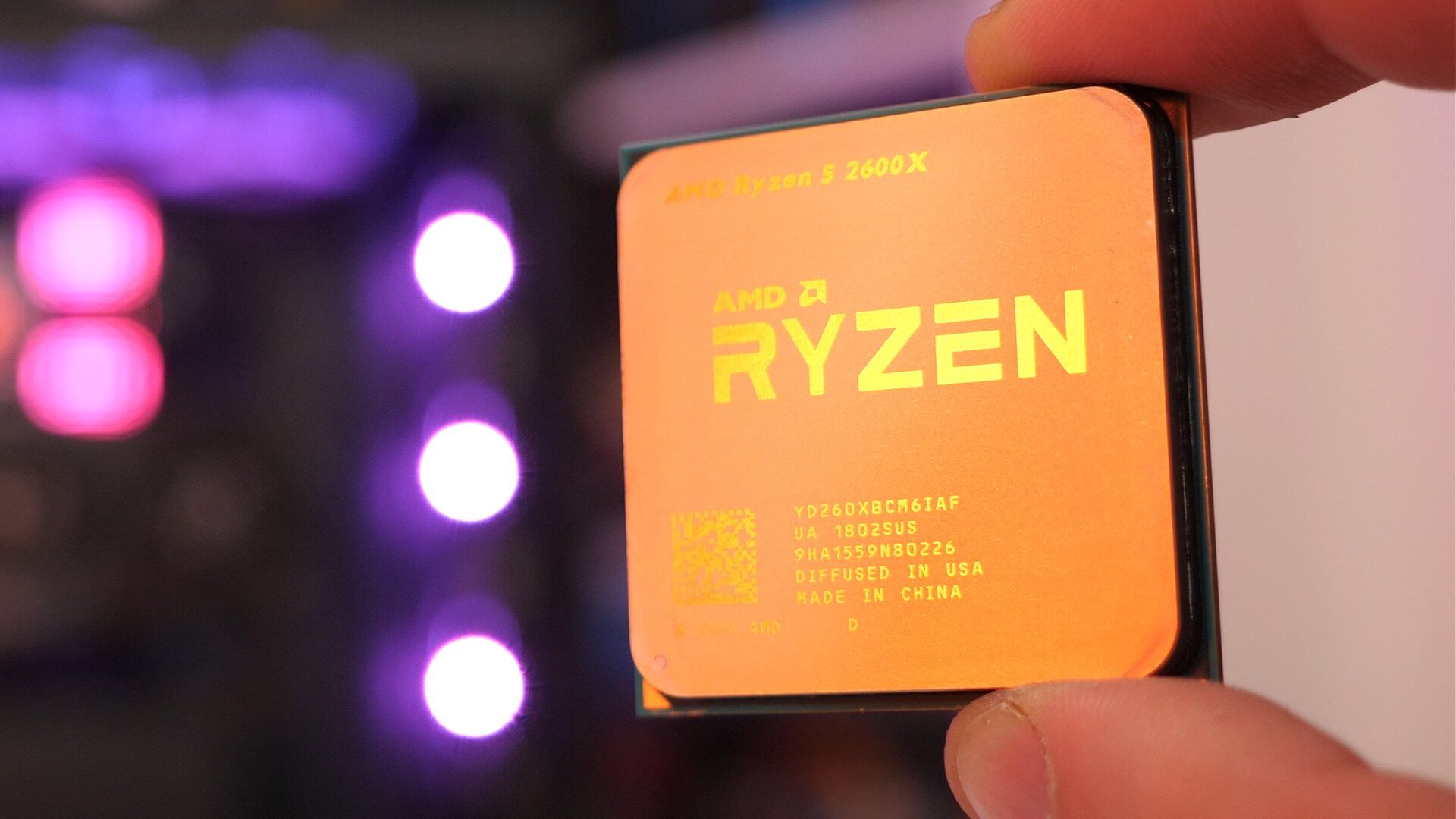

AMD Ryzen 7 2700X & Ryzen 5 2600X Review

Power Consumption, Operating Temperatures. It's been great to have more competition in the CPU sector since Ryzen arrived. Based on a refreshed Zen+ architecture, today we're testing AMD's new X processors:...

www.techspot.com

www.techspot.com

Now that you've been called out on complete nonsense, of course you say you obviously meant system power consumption... HilariousIt's quite obvious from the link you posted that the 2700x is TDP restricted. I call that going belly up, as Zen+ usually does when it smells a power virus. What I'm trying to say is that, you won't see XFR(2) or PBO in a power virus test, whereas an Intel chip will always run everything, apps or power virus, AVX or not, with turbo boost applied until it hits some kind of limitation, be it thermal, or power.

Do you remember when power consumption tests went from Prime 95 to Blender, Handbrake, or Cinebench? Who do you think was responsible for that?

Let's take some numbers from your link:

Prime 95, Small FFTs, AVX Enabled:

Ryzen R7 2700x = 104.7 watts

Core i5 8400 = 117.5 watts

Compared to this:

Blender Gooseberry: (System Power Consumption)

Ryzen R7 2700x = 205 watts

Core i5 8400 = 117 watts

View attachment 21262

Intel Core i9-9900K Re-Review

Today we're revisiting our original Core i9-9900K review and updating it with 95 watt TDP limited results, basically results based on the official Intel specification. For better...www.techspot.com

Obviously, when I said the 2700x consumes 200W+, I meant system. So here we go:

205 Watts at stock running blender at stock, and 246 Watts running same at just 4.2GHz:

View attachment 21263

AMD Ryzen 7 2700X & Ryzen 5 2600X Review

Power Consumption, Operating Temperatures. It's been great to have more competition in the CPU sector since Ryzen arrived. Based on a refreshed Zen+ architecture, today we're testing AMD's new X processors:...www.techspot.com

Nevertheless, thanks for this post, as this reminder of older data points makes @piokos 's argument (or more like conspiracy theory) totally moot pretty much by itself, as the 2700X is marginally slower than the 7820X in Blender, while consuming 20W less, while being manufactured on an inferior process node.

Keep on contributing nothing but your usual nonsense calling people who are making real arguments out. What's your contribution to the thread? Nothing, as usual.Now that you've been called out on complete nonsense, of course you say you obviously meant system power consumption... Hilarious

Nevertheless, thanks for this post, as this reminder of older data points makes @piokos 's argument (or more like conspiracy theory) totally moot pretty much by itself, as the 2700X is marginally slower than the 7820X in Blender, while consuming 20W less, while being manufactured on an inferior process node.

I don't know why do you choose to ignore my 2 arguments in the very same post you've just replied to, but it's mostly a free world, so go for it. Great contribution, to phrase a classic.Keep on contributing nothing but your usual nonsense calling people who are making real arguments out. What's your contribution to the thread? Nothing, as usual.

As to why I formulated my argument in such a pungent way: because I've found what you said about measuring CPU power consumption using system power consumption to be very disingenuous.

Last edited:

What I meant is:Enough with the stupid emojis. So you say that "Intel worked on other things" but limit AMD to "AMD's CPU division didn't give us anything new..."

Intel worked on other things for CPUs. They just didn't focus on increasing core count in desktop parts - so maybe the only thing you care for.

AMD CPU division did pretty much nothing.

When exactly did I say that AMD's comeback is not a good thing?What you should be praising is AMD's return yo competition. Why do you not see that as a good thing? It had benefited us all.

So games were unplayable until March 2017?Going from 4 cores to more cores gives you... guess what... the difference between being playable and unplayable. You won't see a big difference in average fps, but a tremendous difference in minimum fps. If you really insist, we can do this all day - all night.

And what about 6 cores? Are games playable or not? Or half of the time?

Markfw

Moderator Emeritus, Elite Member

- May 16, 2002

- 25,562

- 14,515

- 136

Why are we looking at power consumption numbers 1-2 generations back ? Well, when the reviews on 10900k come out, they will probably have current CPUs from both camps.

4000 CL18 isn't perfect (9 ns) but I wouldn't call it Abysmal. It's almost the same as 3600 CL16 (8.88 ns) and better than 3200 CL15 (9.375 ns).

With datarate in Mhz you get the mem-access latency in ns with the following calculation:

4000CL18 is not as bad as 2933CL16 or whatever. CL18 and memory access latency is only part of the story. There are also other primaries that are bad. And XMP does horrible things to secondaries and tertiaries to make memory compatible.

So AMD was 1900FCLK fine tuned latency, pretty much peak perf, while Intel was horrible XMP job that left performance on the table. Tuning things like RFC/tREFI/tFAW etc is mandatory to extract full performance out of these multi core beasts and becomes more important when core counts grow.

Those power consumption numbers would have severely limited Zen 2, and AMD would have probably resorted to what Intel is doing now with Rocket Lake by back-porting the architecture onto GLobal Foundries' 12LP process node.Why are we looking at power consumption numbers 1-2 generations back ? Well, when the reviews on 10900k come out, they will probably have current CPUs from both camps.

Gideon

Golden Member

- Nov 27, 2007

- 1,644

- 3,692

- 136

That's some utter BS.What I meant is:

Intel worked on other things for CPUs. They just didn't focus on increasing core count in desktop parts - so maybe the only thing you care for.

AMD CPU division did pretty much nothing.

It takes 4-5 years to implement a totally new microarchitecture from ground up (e.g. Zen). AMD was really strapped for cash in 2011 - 2016, so they focused everything they had on that. Even then, they had to dowsize drasticly, increase debt as much as they could and even sell of their campus to generate some cash.

BTW, initially had desktop Bulldozer updates also on the roadmap (a 10-core one for instance) but as the architecture sucked so much, no server customer was interersted in thefollowups (other than Piledriver) so they canned it. The fact that there was no decent new GloFo node coming after 32nm (28nm bulk was a sidegrade) and before 14 nm (which was by the time Zen was to be released) aided in their decision.

So they ended up only doing small mobile iterations of Bulldozer (Carrizo was the most important validating fully synthesized designs and power savings coming from that, also uop cache and some other minor things).

Your claim is pretty much AMD was lazy because they didn't want to go bankrupt (a problem Intel did not face).

EDIT:

And yes, the 4-5 years means that engineers were already working on Zen 3 for quite some time before even Zen 1 was released. And yes, Intel Tick-Tock was also only possible because multiple designs were worked on at the same time, in parallel.

Last edited:

Thunder 57

Platinum Member

- Aug 19, 2007

- 2,675

- 3,801

- 136

It's quite obvious from the link you posted that the 2700x is TDP restricted. I call that going belly up, as Zen+ usually does when it smells a power virus. What I'm trying to say is that, you won't see XFR(2) or PBO in a power virus test, whereas an Intel chip will always run everything, apps or power virus, AVX or not, with turbo boost applied until it hits some kind of limitation, be it thermal, or power.

Do you remember when power consumption tests went from Prime 95 to Blender, Handbrake, or Cinebench? Who do you think was responsible for that?

Let's take some numbers from your link:

Prime 95, Small FFTs, AVX Enabled:

Ryzen R7 2700x = 104.7 watts

Core i5 8400 = 117.5 watts

Compared to this:

Blender Gooseberry: (System Power Consumption)

Ryzen R7 2700x = 205 watts

Core i5 8400 = 117 watts

View attachment 21262

Intel Core i9-9900K Re-Review

Today we're revisiting our original Core i9-9900K review and updating it with 95 watt TDP limited results, basically results based on the official Intel specification. For better...www.techspot.com

Obviously, when I said the 2700x consumes 200W+, I meant system. So here we go:

205 Watts at stock running blender at stock, and 246 Watts running same at just 4.2GHz:

View attachment 21263

AMD Ryzen 7 2700X & Ryzen 5 2600X Review

Power Consumption, Operating Temperatures. It's been great to have more competition in the CPU sector since Ryzen arrived. Based on a refreshed Zen+ architecture, today we're testing AMD's new X processors:...www.techspot.com

You're so full of Intel your eyes are blue. Now you throw in system power when you were clearly wrong? Then why does CAD and gaming show considerably less power use as well compared to the 8700k? Clearly those are not power viruses.

What I meant is:

Intel worked on other things for CPUs. They just didn't focus on increasing core count in desktop parts - so maybe the only thing you care for.

AMD CPU division did pretty much nothing.

When exactly did I say that AMD's comeback is not a good thing?

When you said AMD was giving us the middle finger for 10 years. See @Gideon's response for a better answer

TRENDING THREADS

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 10K

-

Discussion Speculation: Zen 4 (EPYC 4 "Genoa", Ryzen 7000, etc.)

- Started by Vattila

- Replies: 13K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes Discussion Threads

- Started by Tigerick

- Replies: 7K

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.