- Nov 27, 2007

- 1,641

- 3,678

- 136

Anandtech has had a great article on the front page for a while I don't see discussed here:

As Synopsys is the company providing tools to AMD (and AMD themselves say their partnership was essential to deliver Zen 2 as well as they did) I'm really looking forward to see these advancements at play also while designing CPUs.

The most relevant quote (though I really suggest to read the entire article):

I could see this shortening the design cycle for a CPU by several months with huge wins in perf/watt and in the long run radically change how we build chips. Well worth a separate discussion thread IMO.

As Synopsys is the company providing tools to AMD (and AMD themselves say their partnership was essential to deliver Zen 2 as well as they did) I'm really looking forward to see these advancements at play also while designing CPUs.

The most relevant quote (though I really suggest to read the entire article):

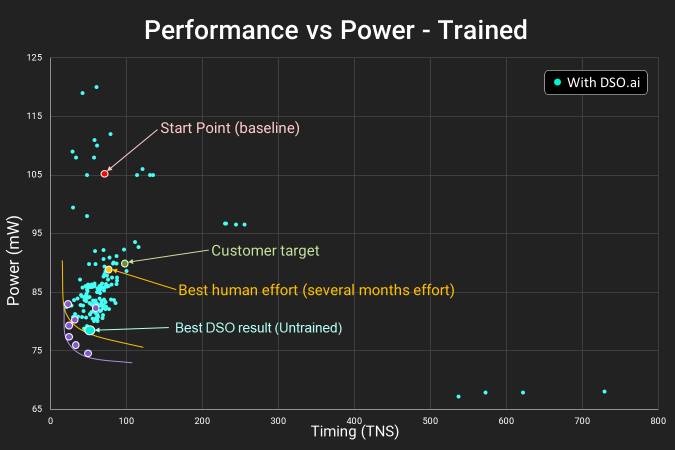

In this graph, we are plotting power against wire delay. The best way to look at this graph is to start at the labeled point at the top, which says Start Point.

- Start Point, where a basic quick layout is achieved

- Customer Target, what the customer would be happy with

- Best Human Effort, where humans get to after several months

- Best DSO result (untrained), where AI can get to in just 24 hours

All of the small blue points indicate one full AI sweep of placing the blocks in the design. Over 24 hours, the resources in this test showcase over 100 different results, with the machine learning algorithm understanding what goes where with each iteration. The end result is something well beyond what the customer requires, giving them a better product.

There is a fifth point here that isn't labeled, and that is the purple dots that represent even better results. This comes from the DSO algorithm on a pre-trained network specifically for this purpose. The benefit here is that in the right circumstances, even a better result can be achieved. But even then, an untrained network can get almost to that point as well, indicated by the best untrained DSO result.

Synopsys has already made some disclosures with customers, such as Samsung. Across four design projects, time to design optimization was reduced by 86%, from a month do days, using up to 80% fewer resources and often beating human-led design targets.

I could see this shortening the design cycle for a CPU by several months with huge wins in perf/watt and in the long run radically change how we build chips. Well worth a separate discussion thread IMO.