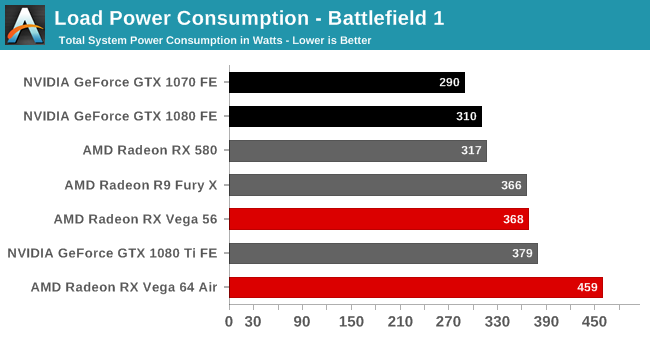

As much as I want to point the finger at Nvidia fanboys and their hypocrisies, I think it's fair to rag on Vega and not be willing to upgrade a PSU for it but be willing to upgrade a PSU for the 3080 because RX Vega didn't even take the performance crown when it launched. The GTX 1080 Ti was the performance leader and it consumed less power than Vega 64.

The RTX 3080, on the other hand, isn't a revolution in perf/W, but it is the defacto performance leader at the moment. If people need top crown performance, people will do whatever it takes to get it, including upgrading their PSU.

I think the think that made Vega particularly bad in this regard was just the number of excuses that kept on adding up.

You have to remember Vega was 16 month to 17 months later than the competition and AMD fans during that period did everything they could to stall people from buying a GTX 1080 and 1080 ti.

During this time a lot was said about Pascal, how about it was not impressive, how Vega was going to beat the 1080 ti and to just wait for Vega. Add on this wait got longer and longer kept on getting extended and people were at wits end. This bet on people to wait had a cost and this was the good will and faith people had in AMD and it's fan base that advertise for them.

Then the card came, under performed, was priced just like the competition on top of being loud and hot. People had enough and just wanted to get their videocards now which at this point wasn't a Vega 64 anymore and was likely the GTX 1080 ti.

Then more bets in good will were made by AMD fans to still buy Vega based on underclocking, Vega 64 could not be sold for less because of HBM2 and to wait for drivers. What these fans did not realize is that the Vega 64 launch had bankrupted their good will. No one wanted to listen to these excuses anymore after waiting 16 months for a product so disappointing vs the hype.

This launch is nothing like that. People really got hyped for Amphere over the last 2 weeks and although the claims were outlandish in some cases(the 1.9x performance per watt claim), most people knew what to expect based on the digital foundry preview(which were really inflated by 10% only). There wasn't this huge wait time where expectations grew and the most impressive thing about the GTX 3080, the price, was still true. People knew it was going to be a 320watt power usage prior to launch so this wasn't an unpleasant surprise for people. Additionally unlike AMD, Nvidia gave the cards sufficient coolers so the cards did not run hot, throttled and loud which prevents the Flak Vega received.

The fact that AMD fans are comparing this launch to Vega is absurd. If Vega had captured the performance crown handily while healthily moving the price to performance bar forward while not being late, it's reception would have been great even with it's power consumption numbers.