igor_kavinski

Lifer

- Jul 27, 2020

- 16,328

- 10,340

- 106

I'm more concerned about the poor guy not being able to pay his bills. It was a bad idea to do an experiment on a critical PC.Ouch, poor Mobo.

I'm more concerned about the poor guy not being able to pay his bills. It was a bad idea to do an experiment on a critical PC.Ouch, poor Mobo.

My old clc cooler pump died, so I changed it with for a hyper evo 212+ and haven't looked back. Maybe your cooler wasn't working properly.Sorry for so many posts, but to add some closure I found a Haswell rig on FB market place for $140 that had no gpu or hard drive. I put my ram, SSD, psu and 1080 in it and I am back in action! I can’t believe the 4770k is running so much cooler on a simple air cooler than my 3770k was on a closed loop cooler. Maybe I had it hooked up wrong over all those years. Set it at auto OC to 4.2 GHz with no issue and just updated the chipset, audio and lan drivers to be safe.

View attachment 74613

View attachment 74614

OK, my good man, pay your bills and let's put that 4090 bad boy back in for another trial runSorry for so many posts, but to add some closure I found a Haswell rig on FB market place for $140 that had no gpu or hard drive. I put my ram, SSD, psu and 1080 in it and I am back in action! I can’t believe the 4770k is running so much cooler on a simple air cooler than my 3770k was on a closed loop cooler. Maybe I had it hooked up wrong over all those years. Set it at auto OC to 4.2 GHz with no issue and just updated the chipset, audio and lan drivers to be safe.

View attachment 74613

View attachment 74614

So at the time my computer died and I called Gigabyte technical support I was not aware my entire computer had died. I was in the denial stage of grief and in my mind only the GPU could have failed and nothing as drastic as the entire PC. I entered a replacement request with Newegg which was approved and drove to the UPS store over my lunch and mailed the 4090 back to Newegg. I don't know if it is dead or not. I thought it was at the time, but had no way to determine. I don't know yet what Newegg will do or the status of the card. In any event it will not fit in the new case by any way and I would not risk putting it in there. I will buy a large case (maybe Fractal Air Pop XL) whenever Ryzen 7000 3d cache chips launch and if I obtain a new 4090 it will go in there.OK, my good man, pay your bills and let's put that 4090 bad boy back in for another trial run

Best of luck!I will buy a large case (maybe Fractal Air Pop XL) whenever Ryzen 7000 3d cache chips launch and if I obtain a new 4090 it will go in there.

It's a cutdown version of AD106.

I take this as a bad sign in that they felt like higher frequencies didn't matter because of the memory bottleneck. Still probably 3070 - 3070 Ti performance.

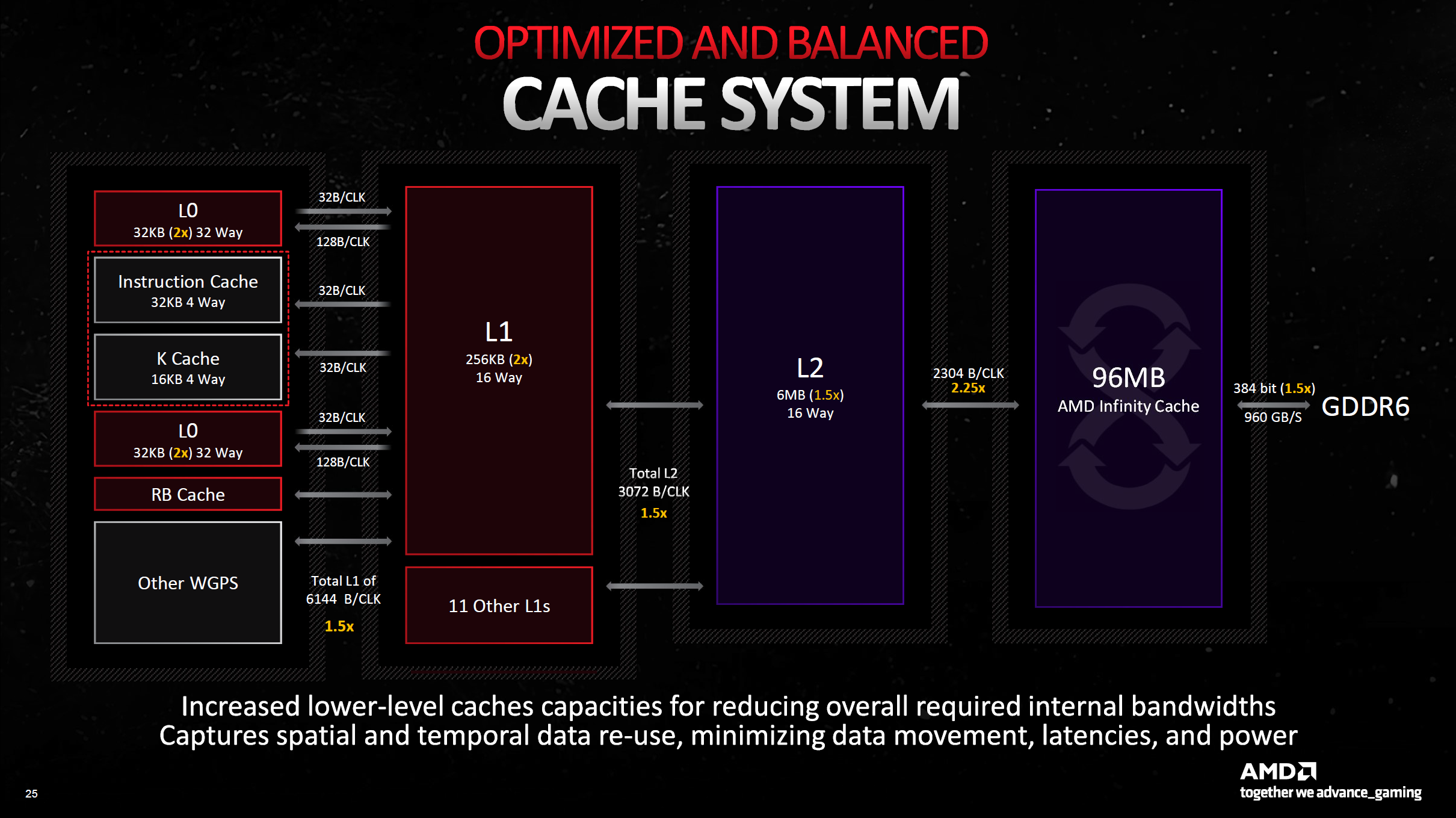

If 4070Ti has 76.5% more SM and TFLOPs will be >66% higher, then doesn't It mean It also need >66% more data to access?It's probably a little early to say that a 4060 Ti with those specs won't have bandwidth issues. At least looking back at AMD's slides from the intro of Infinity Cache, hit rates are primarily resolution and cache size dependent. The difference in bandwidth and SM between the 4070 Ti and 4060 Ti is the same, but the 4060 Ti might have to go out to GDDR6 more often. If AMD's slide is still accurate, they show at 1080p 32MB of IC having a 55% hit rate and 48MB is more like 66%. That's a miss rate of 45% and 34%, meaning a 32MB GPU might need 33% more trips to memory than the 48MB one.

Maybe it won't be so bad, but 288GB/s is just so low. That's 64% of what the 3060 Ti had and is basically halfway between a 3060 and a 3050.

The proliferation of open world game designs is exacerbating the memory pressure issue. If you are going in a high speed vehicle, for instance, the game is constantly streaming data and this will introduce more latency as the data needs to be loaded into VRAM and older data evicted to make space for new data. Clever design where a lot of textures are re-used seamlessly without letting it be too obvious to the gamer is a solution but it would require more creativity and increase the development time to figure everything out. That's why more VRAM makes more sense now than increasing the cache size. There's a limit to the cache size but it's much easier and more economical to have a larger VRAM size, as long as you are not using crazy expensive memory tech like GDDR6X or HBM3.Scene data is more time dependent that framerate dependent; you move from one room to another in X seconds, not in Y number of frames. That's just speculation on my part though.

What would be the benefit of that? I wouldn't think there would be a use for the non-volatile nature of it on a GPU, and would there be much latency benefit to onboard Optane vs PCIe to main memory? I thought at least on the CPU side that the benefit of Optane was providing a layer of NVM between DRAM and SSD, with much larger capacities than you'd have DRAM but still faster than a traditional NVMe SSD.I also think that onboard 32GB Optane on a graphics card as an additional cache layer could solve the latency issue further.

64GB or 128GB would be better but I don't expect Intel to let that be economical. It should save time because access times on the Optane would be better than pulling data off the SSD with higher access times.Or do you mean using Optane as a cache to calls to NVMe through DirectStorage or something similar? If that was the case, wouldn't you want something a lot bigger than 32GB?

Ah, yeah. At that point it's almost like the AMD SSG, but with a little faster memory. It's kind beside the point now I guess, since Optane is EOL.64GB or 128GB would be better but I don't expect Intel to let that be economical. It should save time because access times on the Optane would be better than pulling data off the SSD with higher access times.

I hope Raja Koduri reads these forums. He can finally find Optane a nice home and purpose.Ah, yeah. At that point it's almost like the AMD SSG, but with a little faster memory. It's kind beside the point now I guess, since Optane is EOL.

I think you are right and Hitrate won't be affected by higher FPS.I don't believe so, but I would definitely cede the point to someone more knowledgeable than I. My basic understanding is that with increasing framerates comes increasing bandwidth requirements, as the GPU needs to go to memory (either cache or external) for every frame. However the size of memory required is dependent on the amount of data that's needed to be stored, which is resolution (and game) dependent. If the data needed is in cache you don't need to go to external memory, otherwise you do. For a given game and resolution, having a larger L2 means higher hit rates, so less trips to external memory. In the case of something like the 4070 Ti vs 4060 Ti, the larger cache means fewer misses and thus less data is needed from external memory at a given resolution, and the wider bus and faster memory mean that data takes less time to transfer.

This supposes that hit rate doesn't go down at higher framerates vs lower ones, but I don't logically think it does. Scene data is more time dependent that framerate dependent; you move from one room to another in X seconds, not in Y number of frames. That's just speculation on my part though.

Both vendors are using big caches to compensate for narrower bus width and lower bandwidth though. Something like the 6750XT used a big 96MB cache and still had 432GB/s to external memory. It'll be interesting to see how a card that should have similar gaming performance does at higher res with a cache 1/3 the size and 2/3rds the memory bandwidth.

| Infinity cache 2 | 16 MB | 32 MB | 48 MB | 64 MB | 96 MB | 128 MB (doesn't exist) |

| B/CLK | 384 | 768 | 1152 | 1536 | 2304 | 3072 |

| Theoretical BW at 2.5GHz | 960 GB/s | 1920 GB/s | 2880 GB/s | 3840 GB/s | 5760 GB/s | 7680 GB/s |

| Hit rate at 1080p (BW) | 37 % (355 GB/s) | 55 % (1056 GB/s) | 65 % (1872 GB/s) | 72 % (2765 GB/s) | 78 % (4493 GB/s) | 80 % (6144 GB/s) |

| Hit Rate at 1440p (BW) | 23% (221 GB/s) | 38 % (730 GB/s) | 48 % (1382 GB/s) | 57 % (2189 GB/s) | 69 % (3974 GB/s) | 74 % (5683 GB/s) |

| Hit rate at 4K (BW) | 19 % (182 GB/s) | 27 % (518 GB/s) | 34 % (979 GB/s) | 42 % (1613 GB/s) | 53 % (3053 GB/s) | 62 % (4762 GB/s) |

The proliferation of open world game designs is exacerbating the memory pressure issue. If you are going in a high speed vehicle, for instance, the game is constantly streaming data and this will introduce more latency as the data needs to be loaded into VRAM and older data evicted to make space for new data. Clever design where a lot of textures are re-used seamlessly without letting it be too obvious to the gamer is a solution but it would require more creativity and increase the development time to figure everything out. That's why more VRAM makes more sense now than increasing the cache size. There's a limit to the cache size but it's much easier and more economical to have a larger VRAM size, as long as you are not using crazy expensive memory tech like GDDR6X or HBM3.

I also think that onboard 32GB Optane on a graphics card as an additional cache layer could solve the latency issue further.

Is any of the 4000 series cards released so far a full die?

Isn't the 4070 Ti all 60SM of AD104?Is any of the 4000 series cards released so far a full die?