You'll just have to believe me. I've been reading reviews since the late 90's and with big reviews I'd do analysis on them to see scaling and everything.

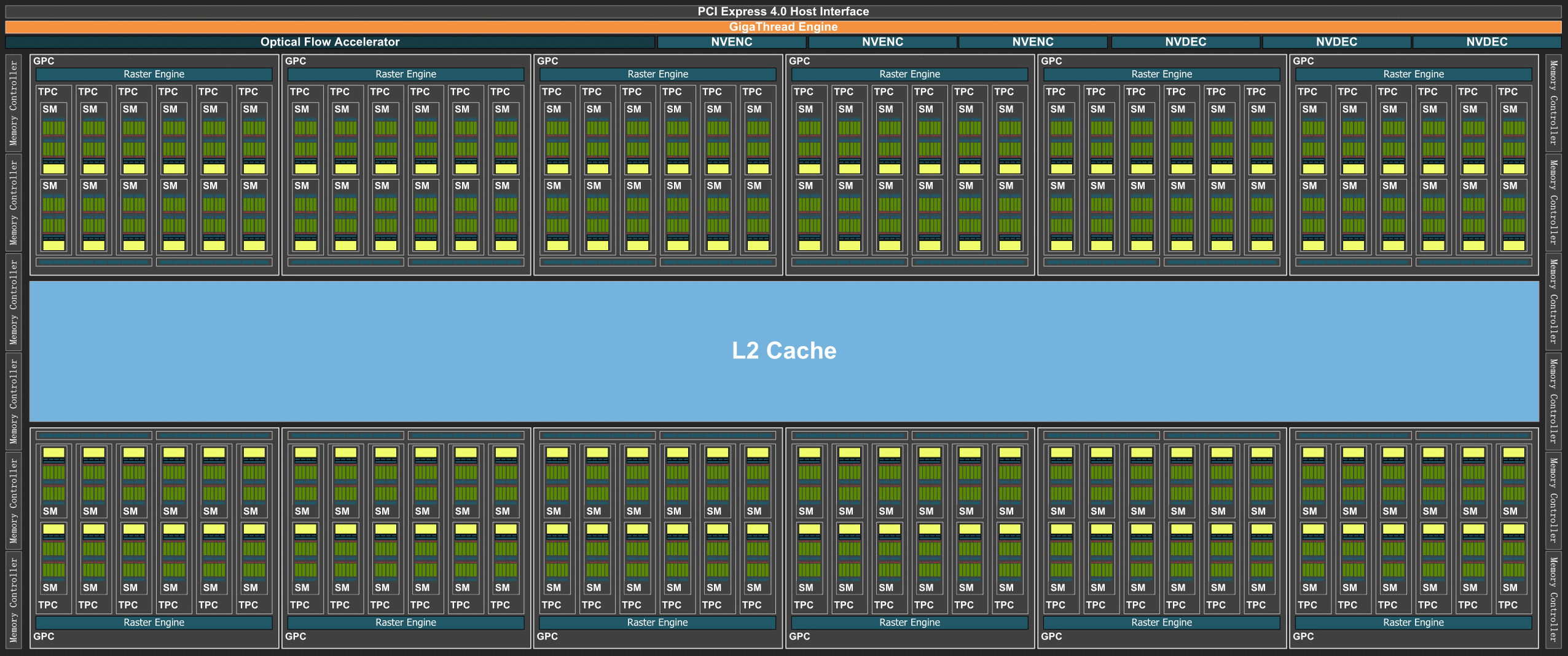

Approach it logically. A balanced system and game would take advantage of each of the major features equally. 20-30% gains can be had by doubling memory bandwidth. Same with fillrate, and same with shader firepower.

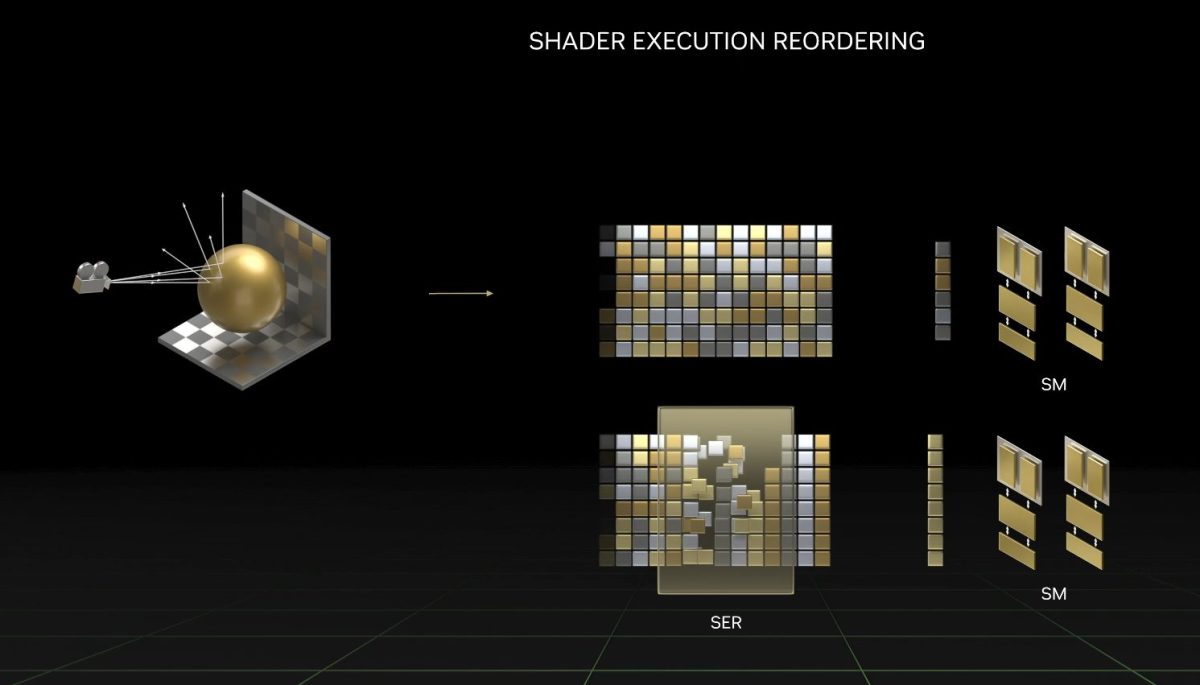

If one generation changes the balance too much, that means there's optimization to be had. Imagine a game/video card where doubling memory bandwidth increased performance by 60-80%. Then you know somewhere in the game and/or GPU, there's a serious bottleneck when it comes to memory. This also means you are wasting resources by having too much shader and fillrate, because you could have had much better perf/$, perf/mm and perf/W ratio.

That's why it's called a Rule of Thumb though. All sorts of real-world analysis has to be done to get the actual value. Game development changes, and quality of the management for the GPU team changes.

Efficiency is still a good thing. Less shader units equals less die space and less heat.

There's no such thing as free. To get more performant shader units, generally you need more resources. Optimization is what you need to do better than that, but that requires innovation, ideas and time which doesn't always happen.