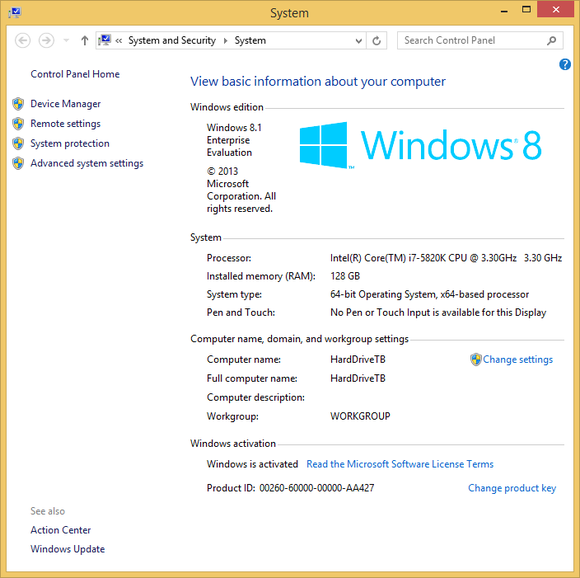

Hi, as the post says, i'm running a 5960x with a H110 cooler on a MSI Krait SLI motherboard. Ram is 32 gb of Corsair Dominator 3000 - i've kept that at 2133 for now.

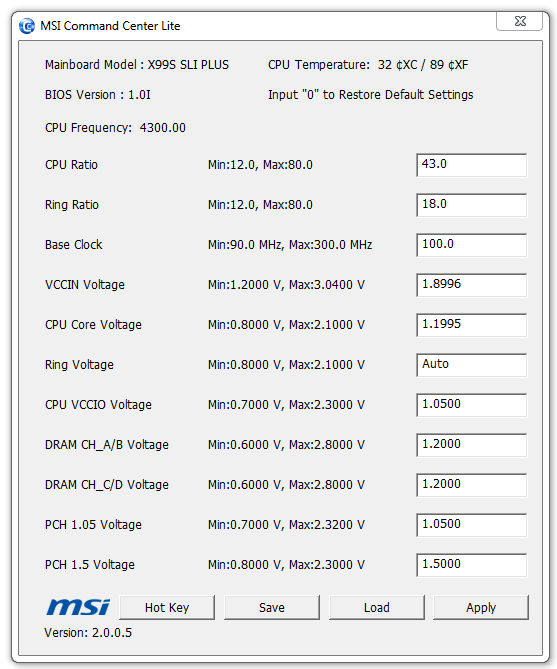

I've been playing around with overclock settings and i think i've managed to get it to 3.9 stable(ish...). But people seem to be getting to 4.3-4.5 without any trouble.

I was wondering if anyone could share information or any sites that might have bios parameters i could try to achieve a higher stable overclock. 4.1 or 4.2 would be very nice if i could achieve it and still have a machine that i only need to reboot once a month or less (i'm running linux).

Any advice would be appreciated.

Also, anyone running very large amounts of RAM ? I'm looking at getting 128 GB for this machine but i'm worried that without ECC it'll be practically unusable because of bit errors.

Interestingly, for the first 2-3 months since i built this box, i would get a ton of memory errors, but now i don't have any noticeable amount - i wonder if RAM needs burn-in or something.

I've been playing around with overclock settings and i think i've managed to get it to 3.9 stable(ish...). But people seem to be getting to 4.3-4.5 without any trouble.

I was wondering if anyone could share information or any sites that might have bios parameters i could try to achieve a higher stable overclock. 4.1 or 4.2 would be very nice if i could achieve it and still have a machine that i only need to reboot once a month or less (i'm running linux).

Any advice would be appreciated.

Also, anyone running very large amounts of RAM ? I'm looking at getting 128 GB for this machine but i'm worried that without ECC it'll be practically unusable because of bit errors.

Interestingly, for the first 2-3 months since i built this box, i would get a ton of memory errors, but now i don't have any noticeable amount - i wonder if RAM needs burn-in or something.