- Aug 14, 2000

- 22,706

- 2,956

- 126

NVIDIA France Accidentally Reveals GeForce RTX 4070 Ti Specs

With less than a week to go until the official launch of the GeForce RTX 4070 Ti, NVIDIA France has gone and spoiled things by revealing the official specs of the upcoming GPU. The French division of NVIDIA appears to have posted the full product page, but it has since then been pulled. That...

First official performance leak. A perfect opportunity to highlight the fraud pushed by nVidia with DLSS.

Performance looks fantastic, right? Look again, at the smallprint - 4070 is using frame generation.

I said exactly this would happen as soon as DLSS 3.0 was announced. 2560x1440 is a lie given it's actually ~1080p due to legacy DLSS. Since nVidia got away with it, the next step is frame interpolation lies. It's "faster", yo!

Adjusting for frame generation (i.e. halve the 4070's bars), it's actually barely faster than the 3080 in MFS, and slower in Warhammer 40K. All this from a card that will likely cost more.

Customers not seeing the smallprint and/or not understanding DLSS are having systematic fraud perpetuated on them.

Reviews

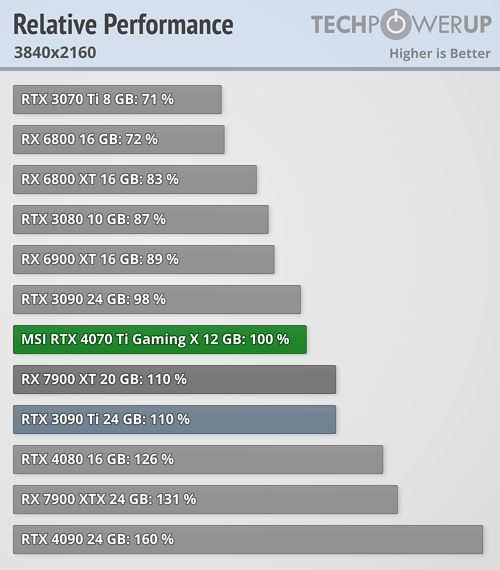

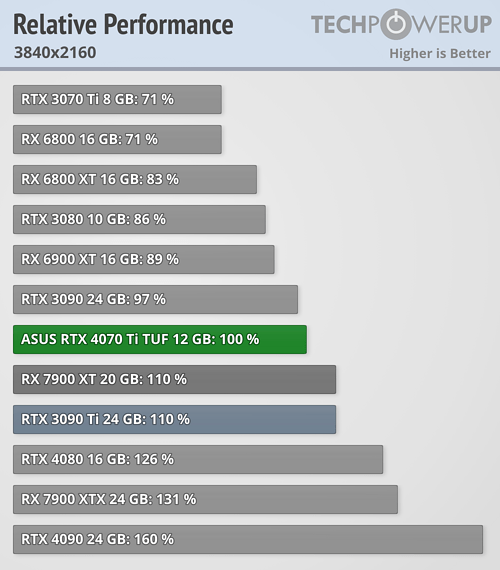

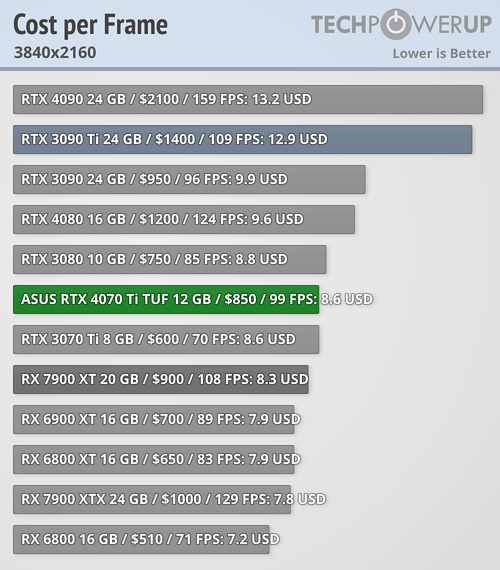

MSI GeForce RTX 4070 Ti Gaming X Review

MSI's GeForce RTX 4070 Ti Gaming X comes with a large triple-fan, triple-slot thermal solution that runs at whisper-quiet noise levels with low temperatures. In terms of performance the new card is able to match last generation's flagship, the RTX 3090 Ti, and offers new features like DLSS 3...

Last edited: